CS455 Selected Lecture Notes

This is one big WEB page, used for printing

These are not intended to be complete lecture notes.

Complicated figures or tables or formulas are included here

in case they were not clear or not copied correctly in class.

Computer commands, directory names and file names are included.

Specific help may be included here yet not presented in class.

Source code may be included in line or by a link.

Lecture numbers correspond to the syllabus numbering.

Lecture 1, Introduction

Lecture 2, Rocket Science

Lecture 3, Simultaneous Equations

Lecture 3a, Case Study, Matrix Inversion

Lecture 3b, multiprocessors, MPI, threads and tasks

Lecture br, Boundary reduction of equations

Lecture 4, Least Square Fit

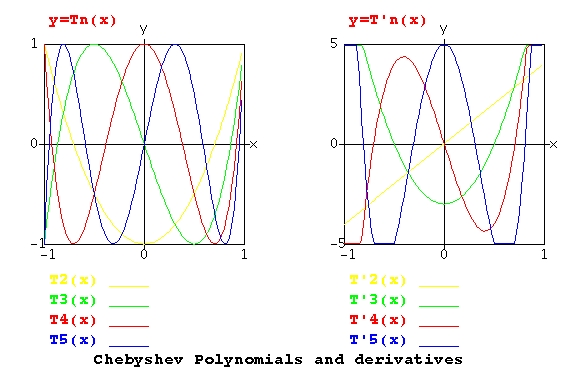

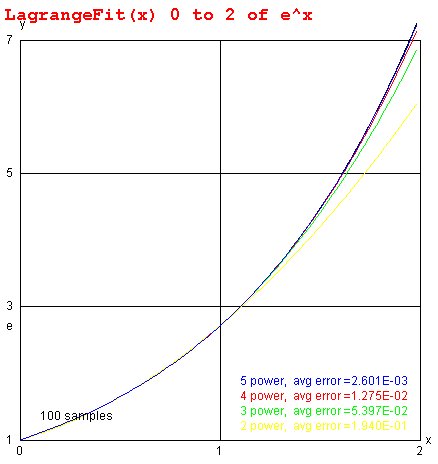

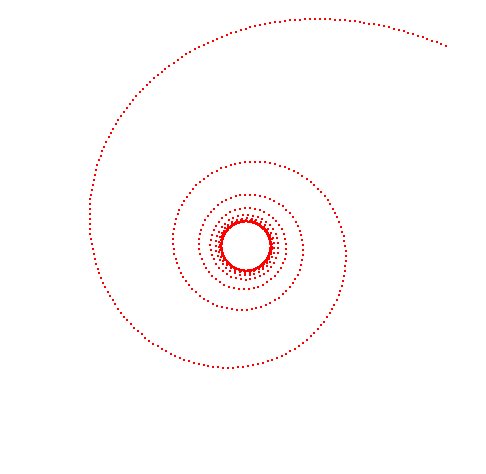

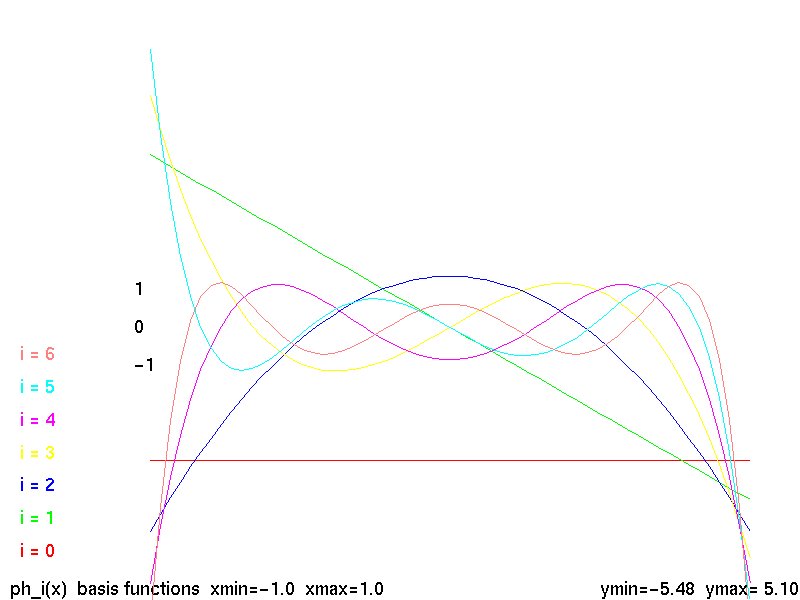

Lecture 5, Polynomials

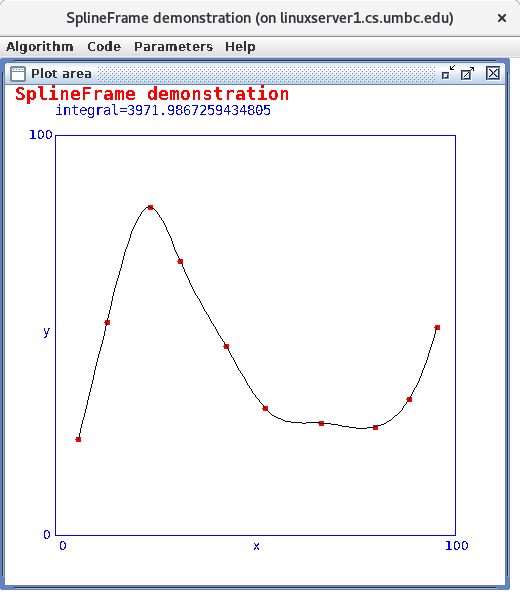

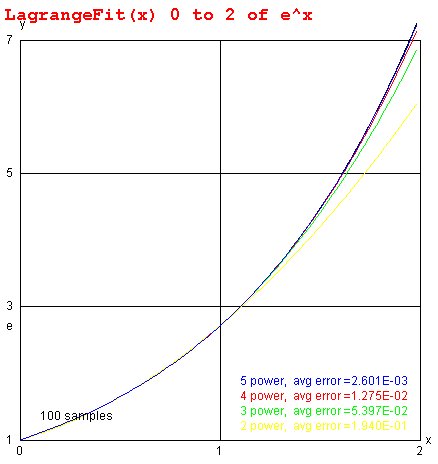

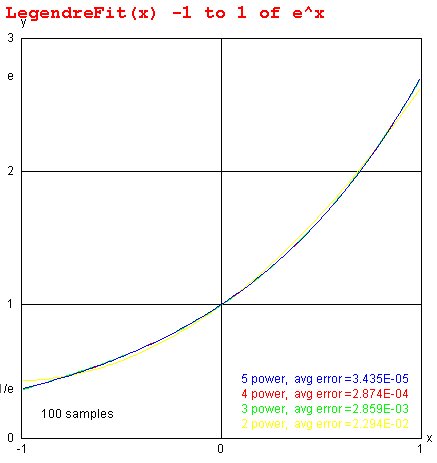

Lecture 6, Curve Fitting

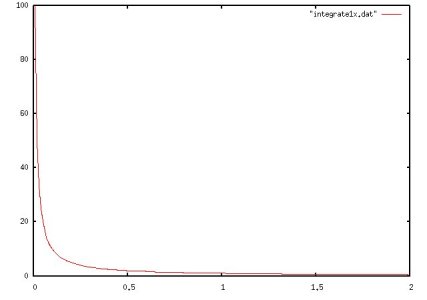

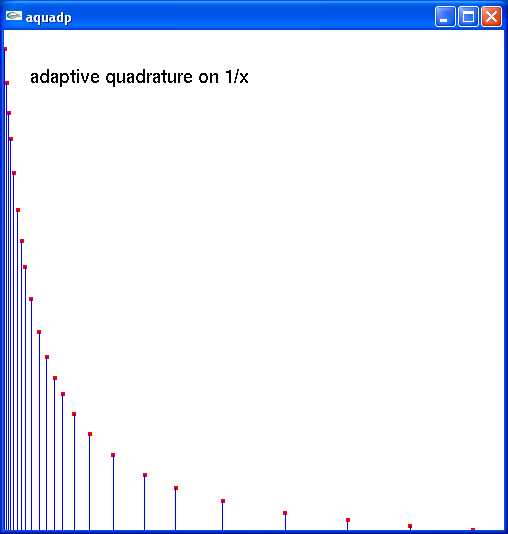

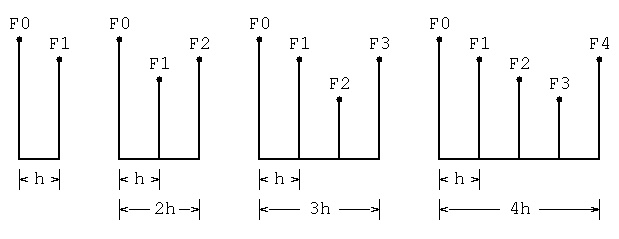

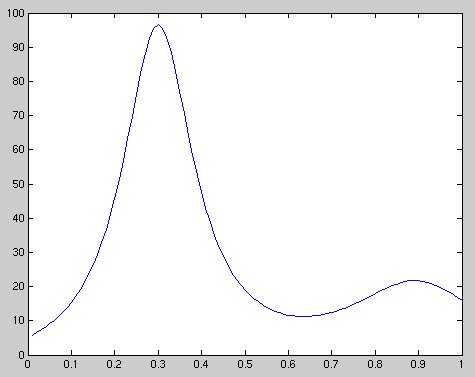

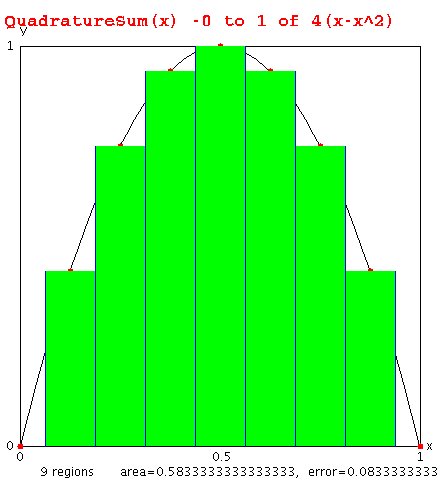

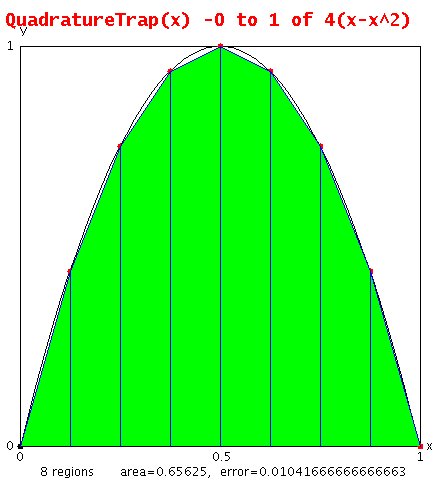

Lecture 7, Numerical Integration

Lecture 8, Numerical Integration 2

Lecture 9, Review 1

Lecture 10, Quiz 1

Lecture 11, Complex Arithmetic

Lecture 11, More Complex Arithmetic

Lecture 12, Complex Functions

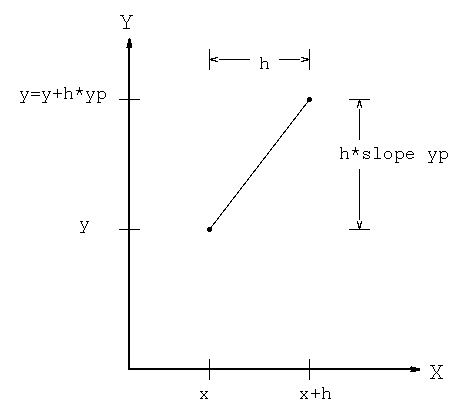

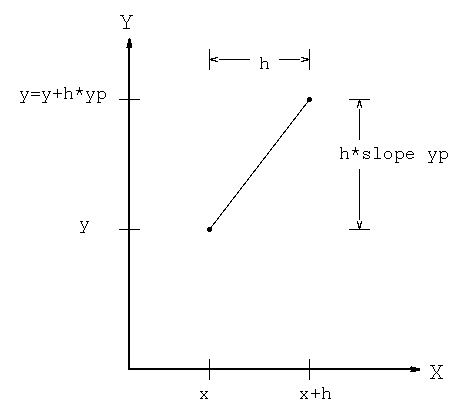

Lecture 13, Eigenvalues of a Complex Matrix

Lecture 14, LAPACK

Lecture 15, Multiple precision, bignum

Lecture 16, Finding Roots and Nonlinear Equations

Lecture 17, Optimization, finding minima

Lecture 18, FFT, Fast Fourier Transform

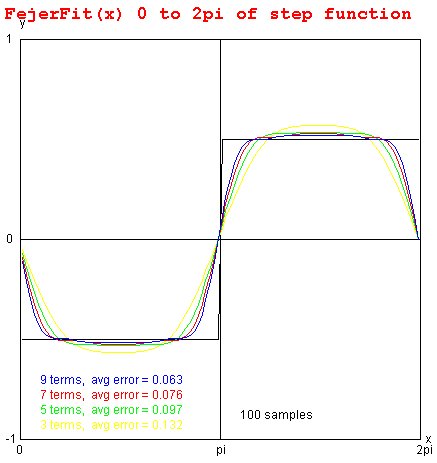

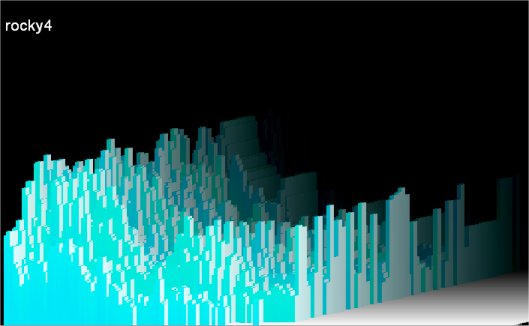

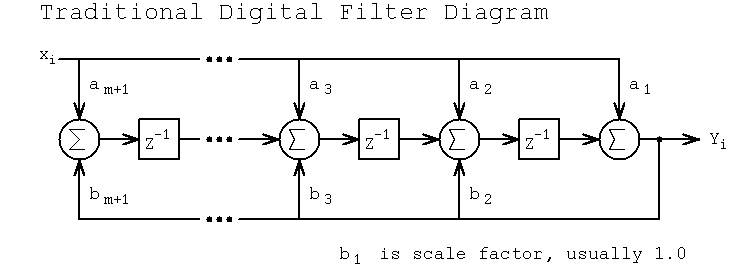

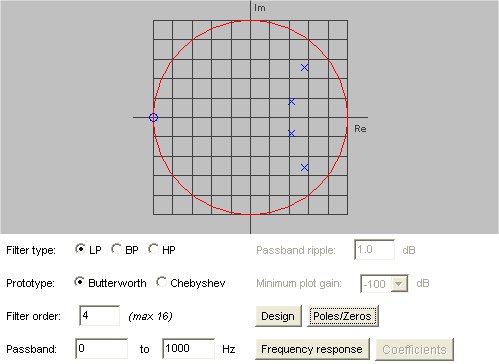

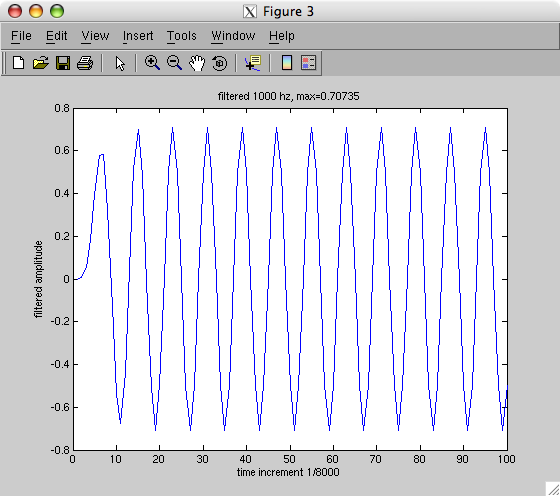

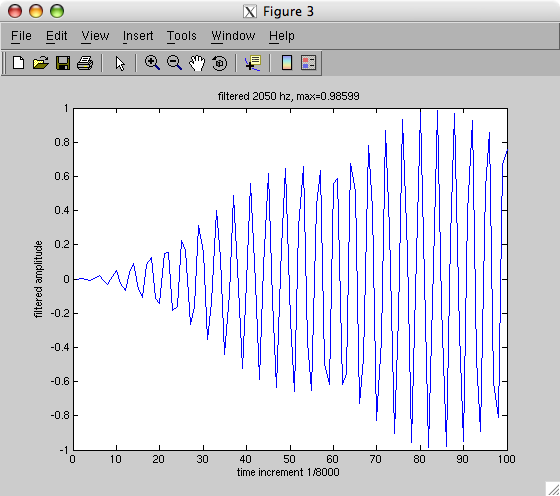

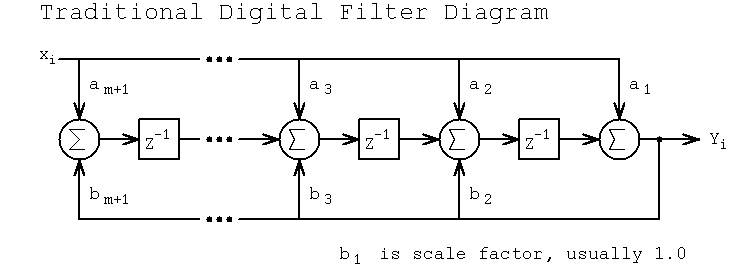

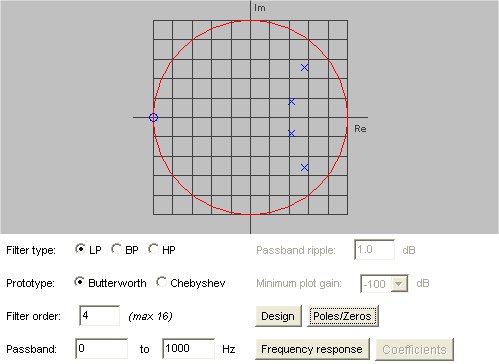

Lecture 18a, Digital Filtering

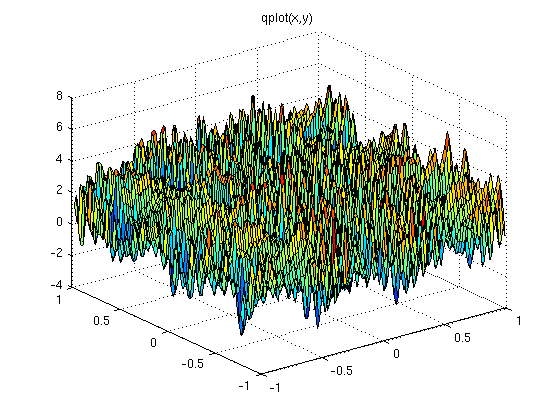

Lecture 18b, Molecular frequency response

Lecture 19, Review 2

Lecture 20, Quiz 2

Lecture 21, Benchmarks, time and size

Lecture 22, Project Discussion

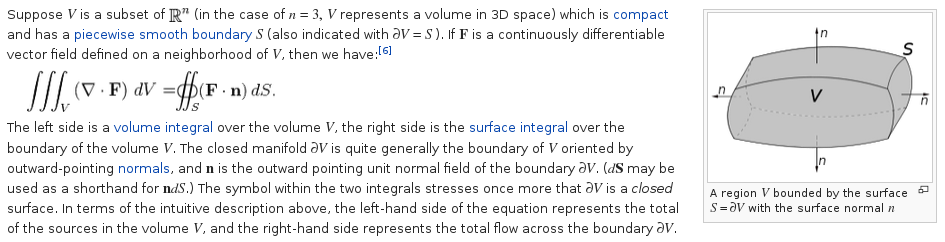

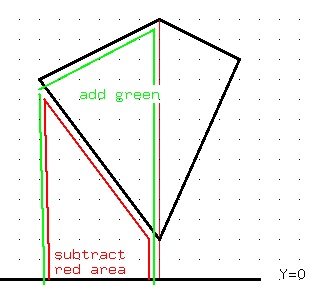

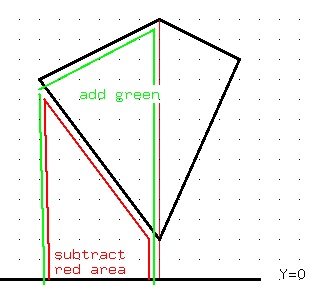

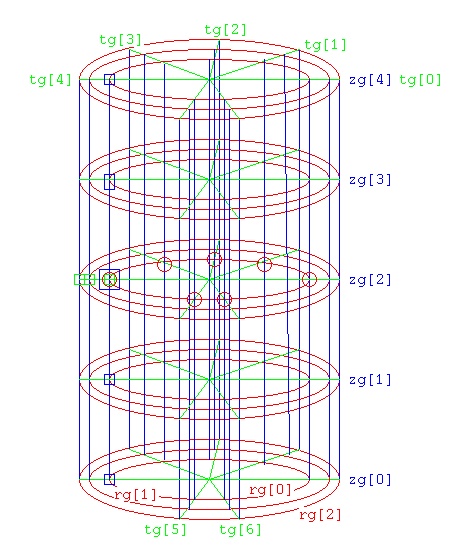

Lecture 23, Computing Volume and Area

Lecture 24, Numerical Differentiation

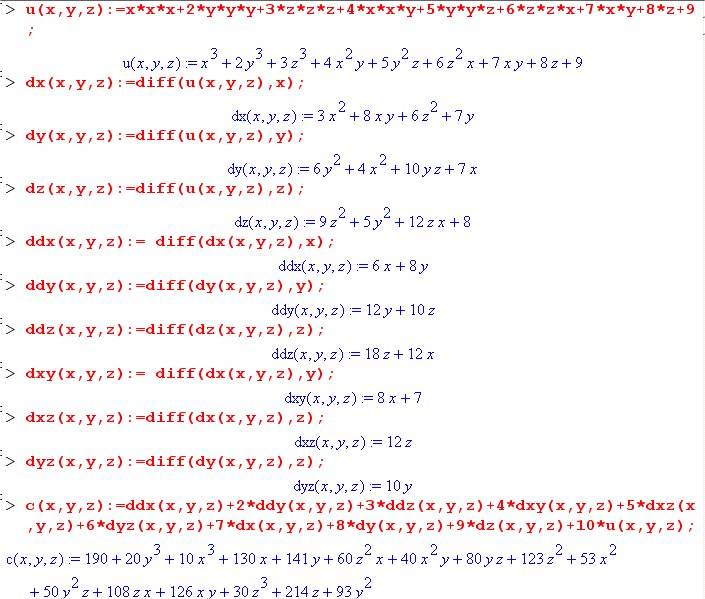

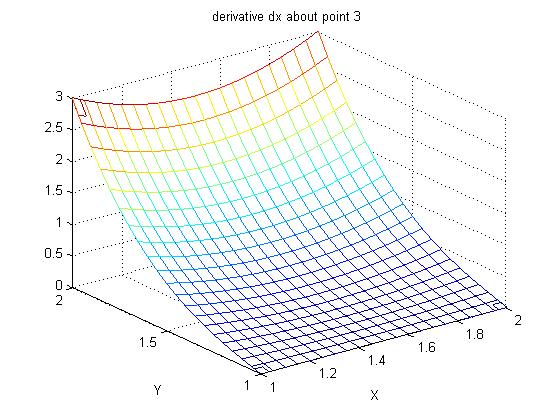

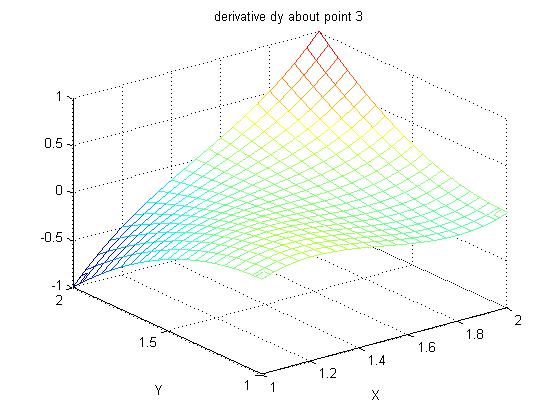

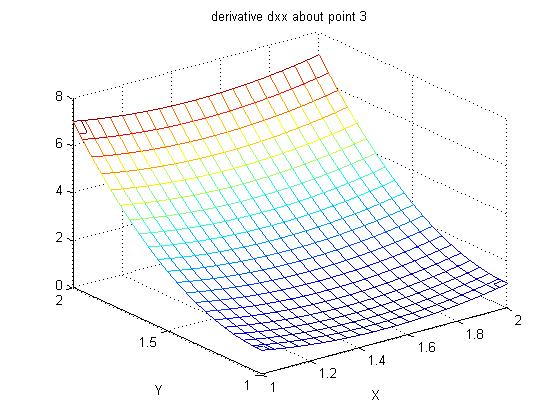

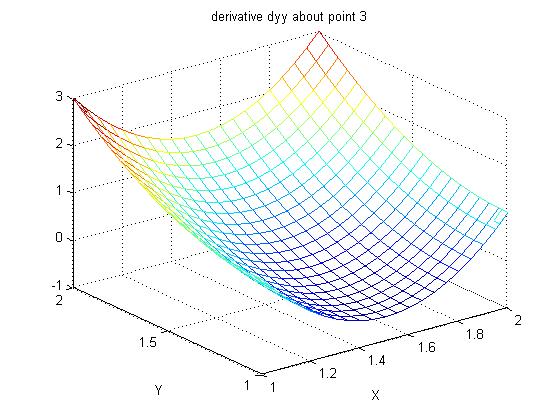

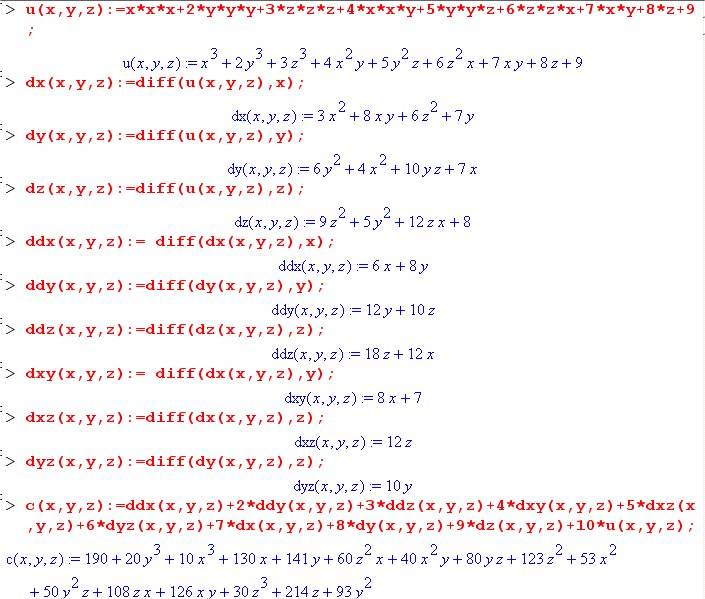

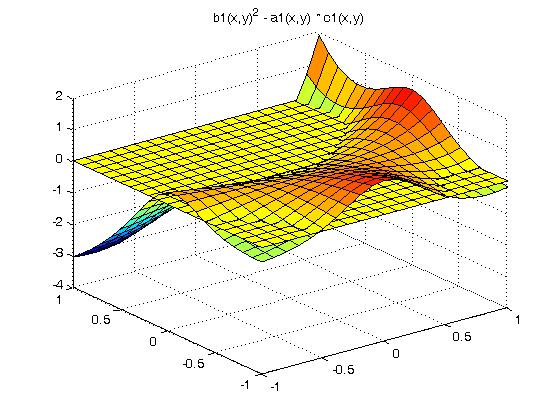

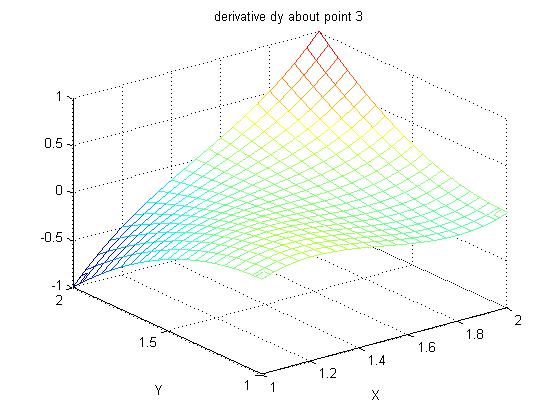

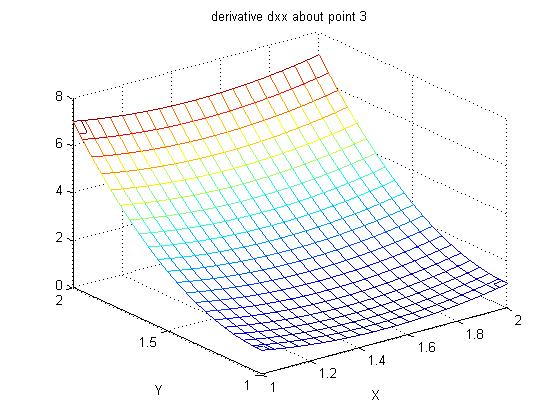

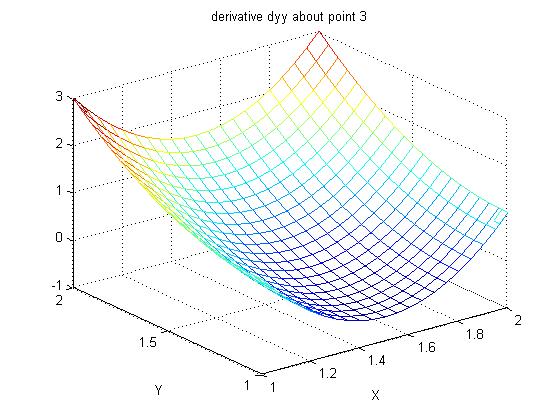

Lecture 24a, Computing partial derivatives

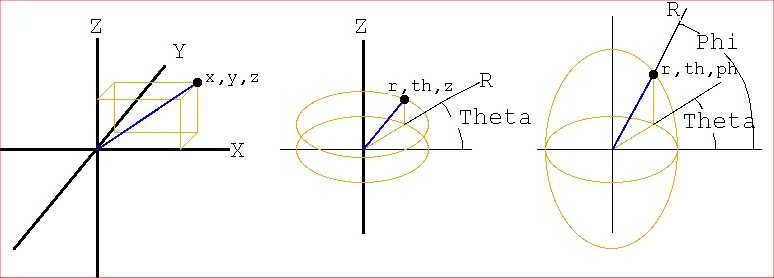

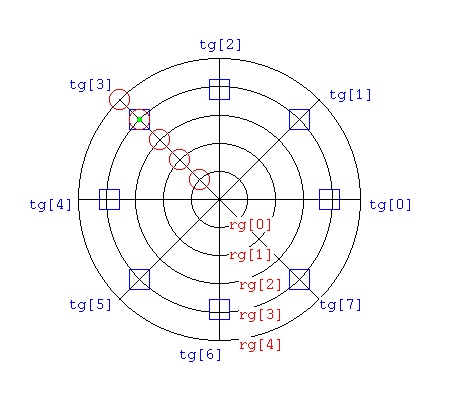

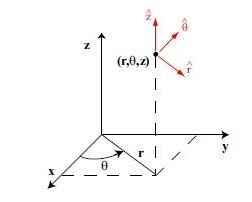

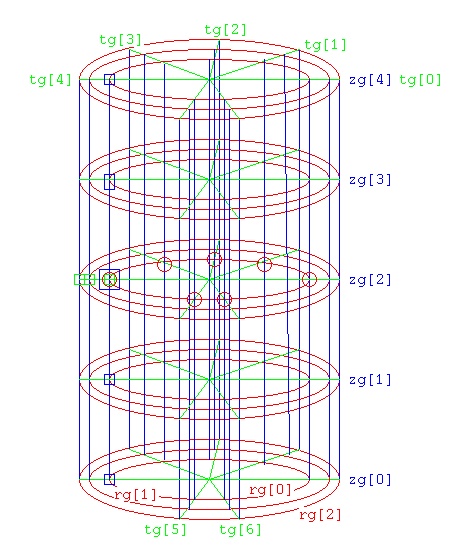

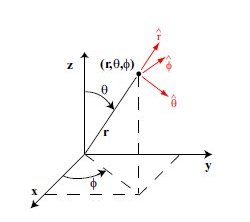

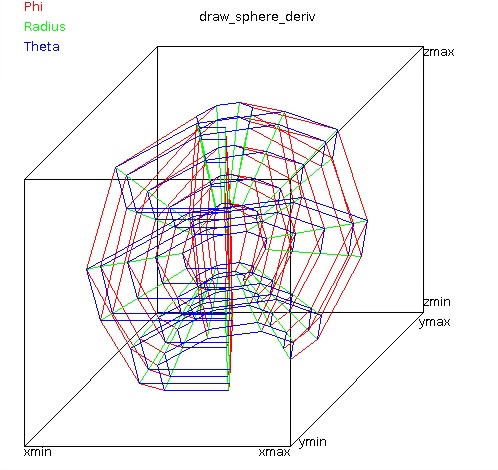

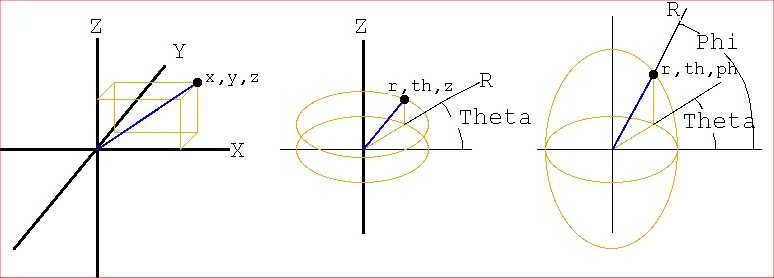

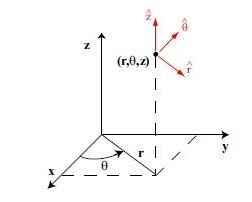

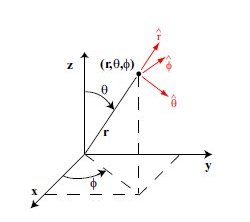

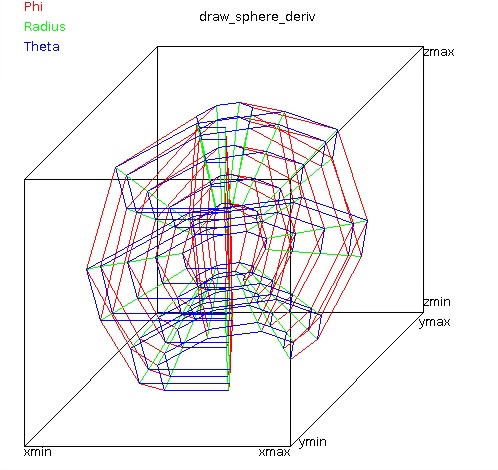

Lecture 24b, Computing partial derivatives in polar, cylindrical, spherical

Lecture 24b4, toward del^4 in spherical coordinates

Lecture 25, Ordinary Differential Equations

Lecture 26, Ordinary Differential Equations

Lecture 27, Partial Differential Equations

Lecture 27a, Differential Equation Definitions

Lecture ODE PDE Overview

Lecture 28, Partial Differential Equations

Lecture 28a, Additional Differential Equations

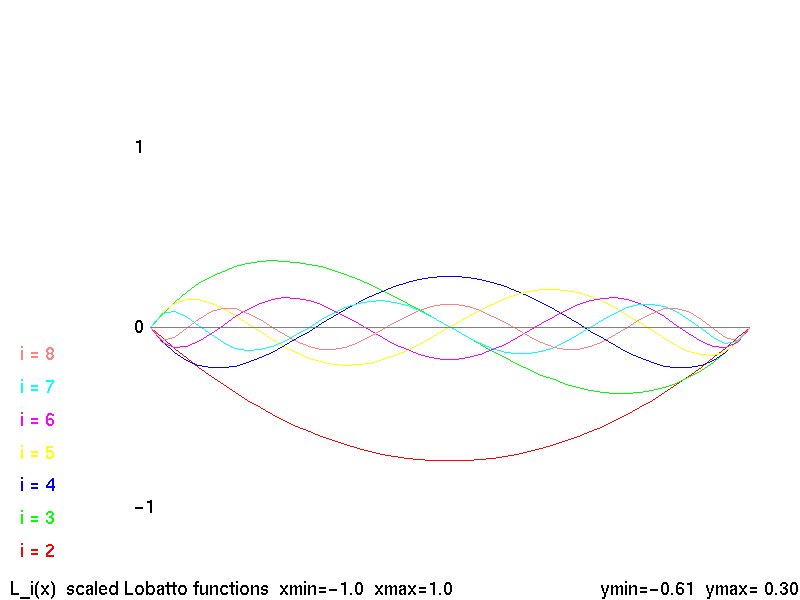

Lecture 28d, Biharmonic PDE using higher order

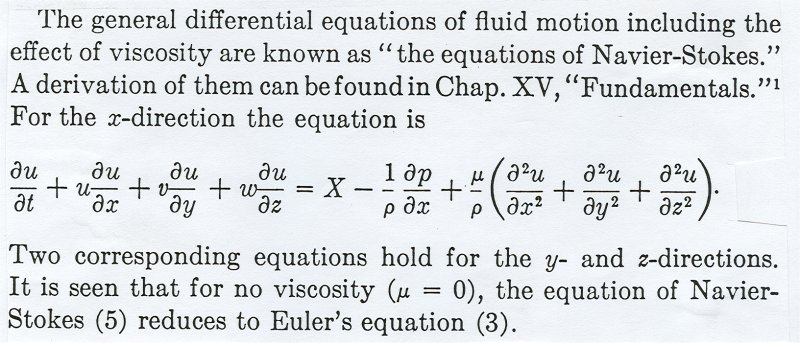

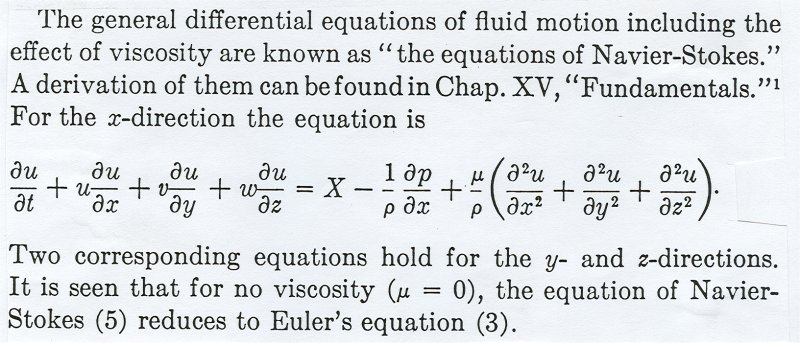

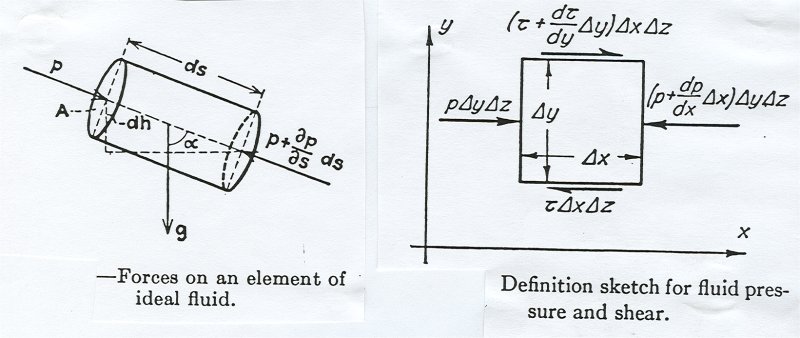

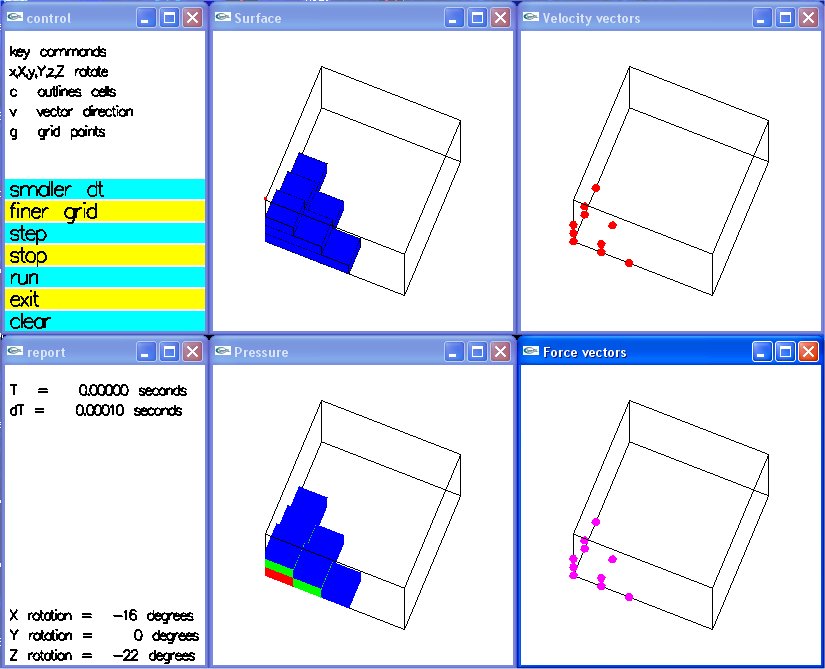

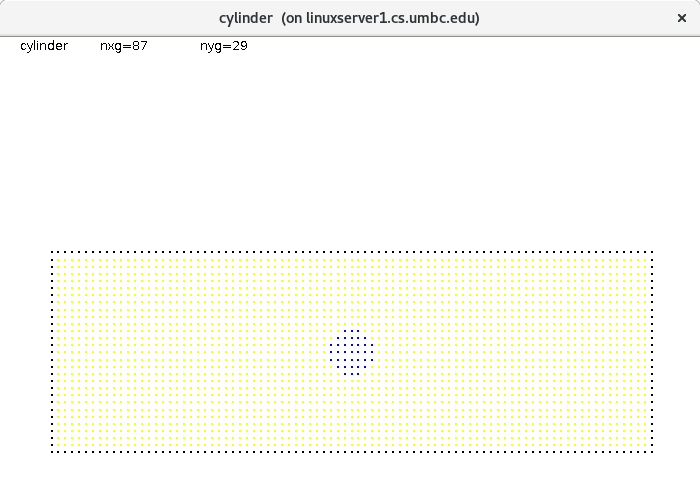

Lecture 28b, Navier Stokes case study

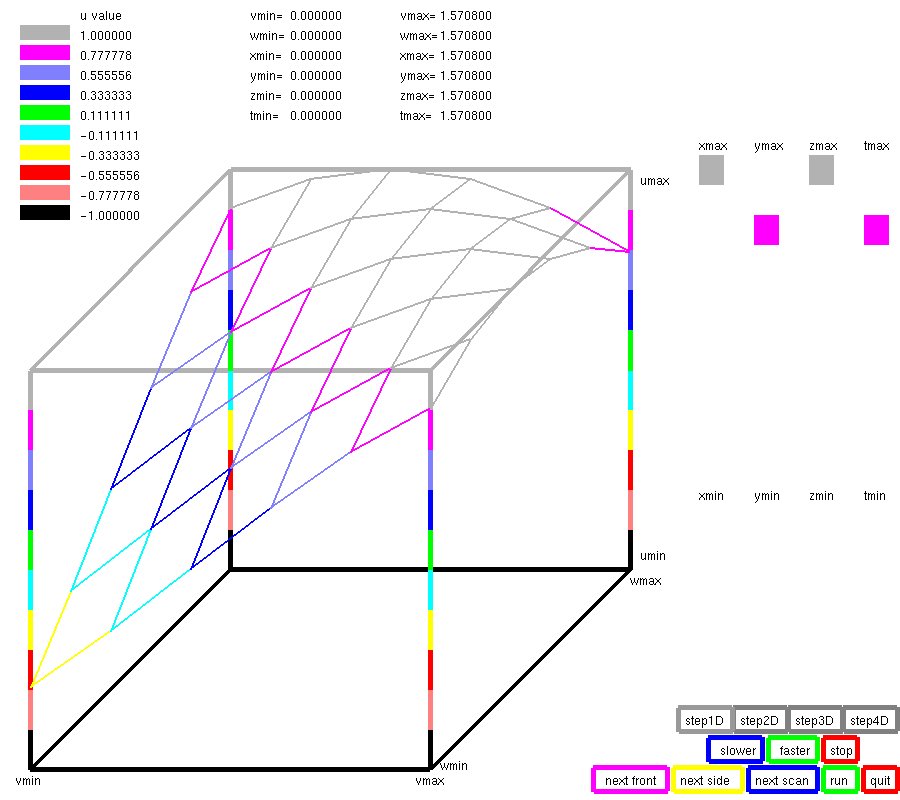

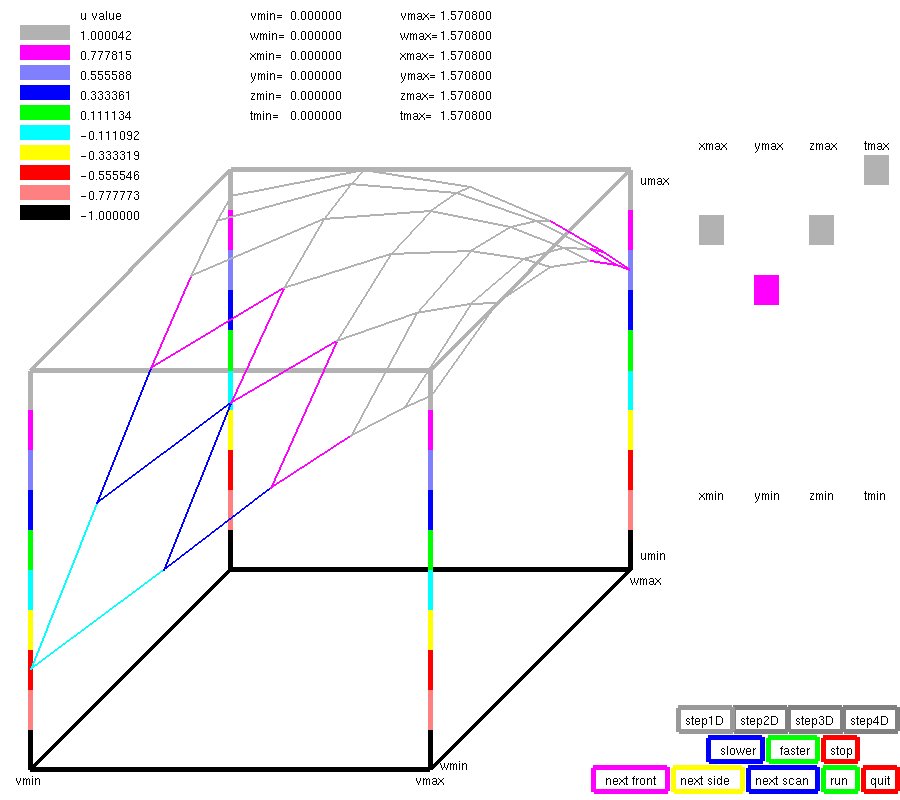

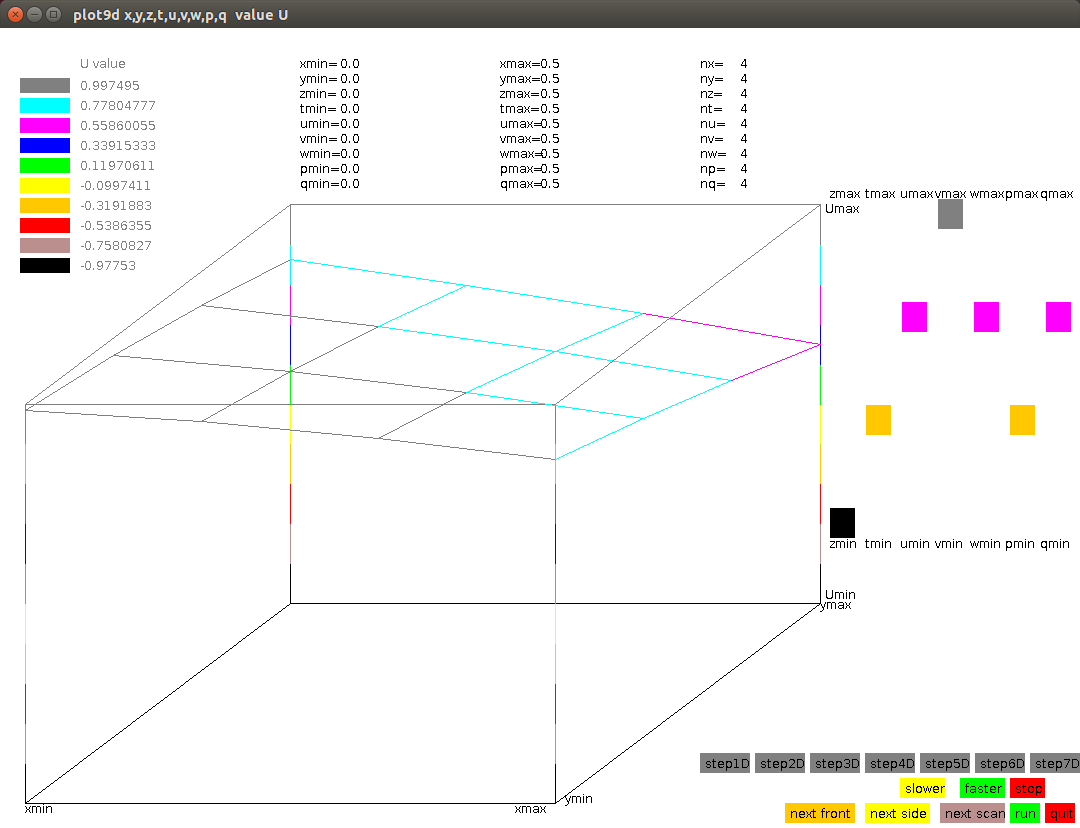

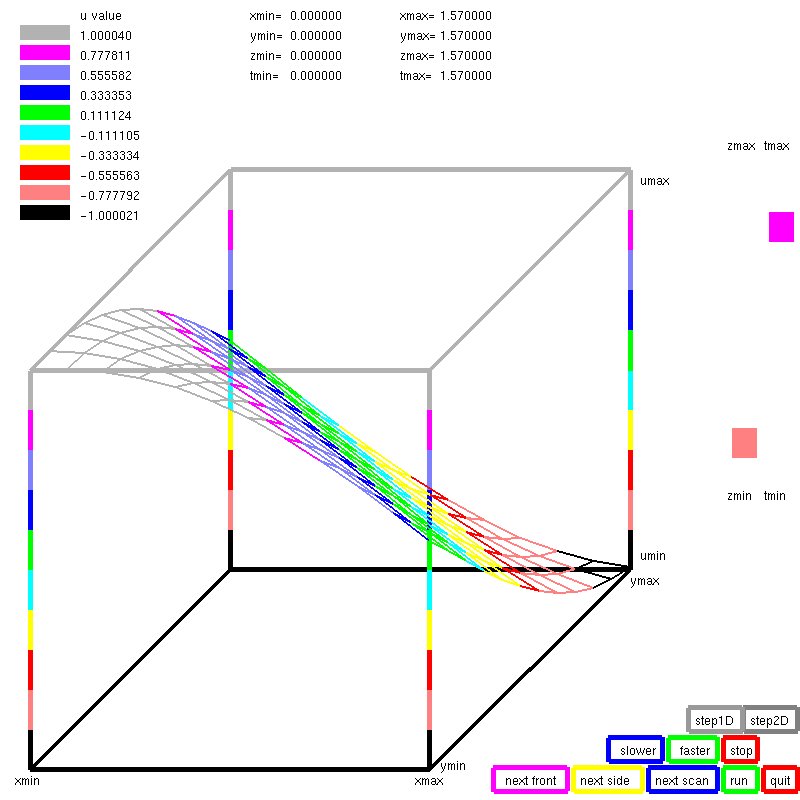

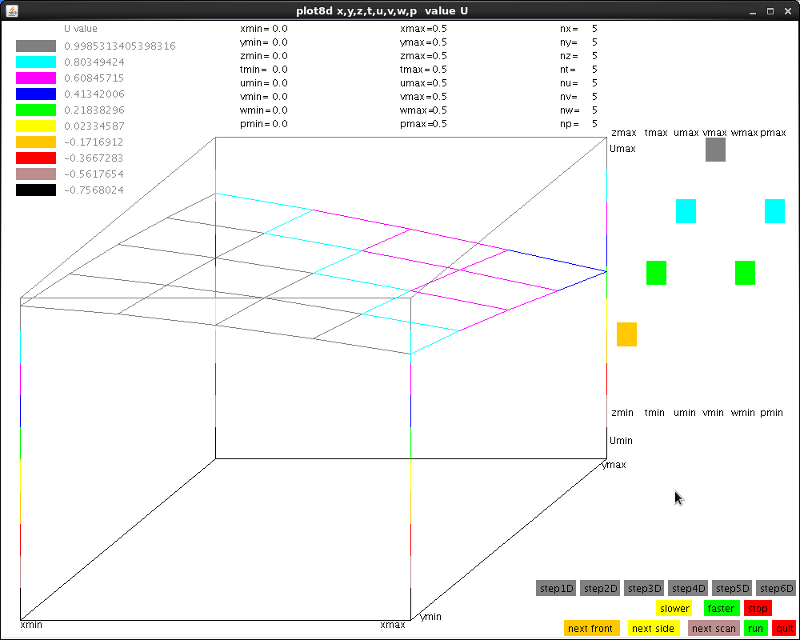

Lecture 28e, 5D five dimensions, independent variables

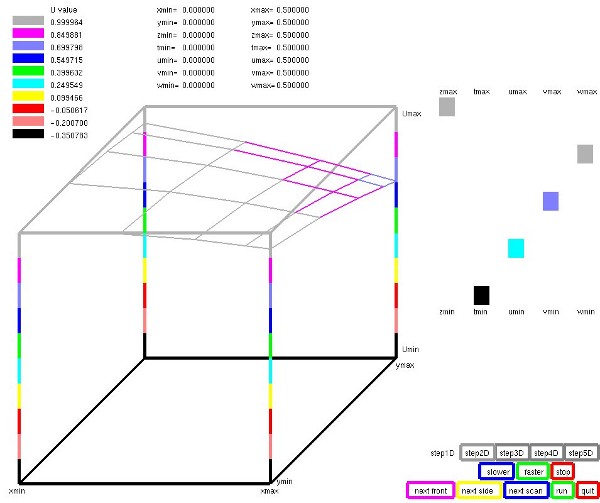

Lecture 28f, 6D six dimensions, Biharmonic

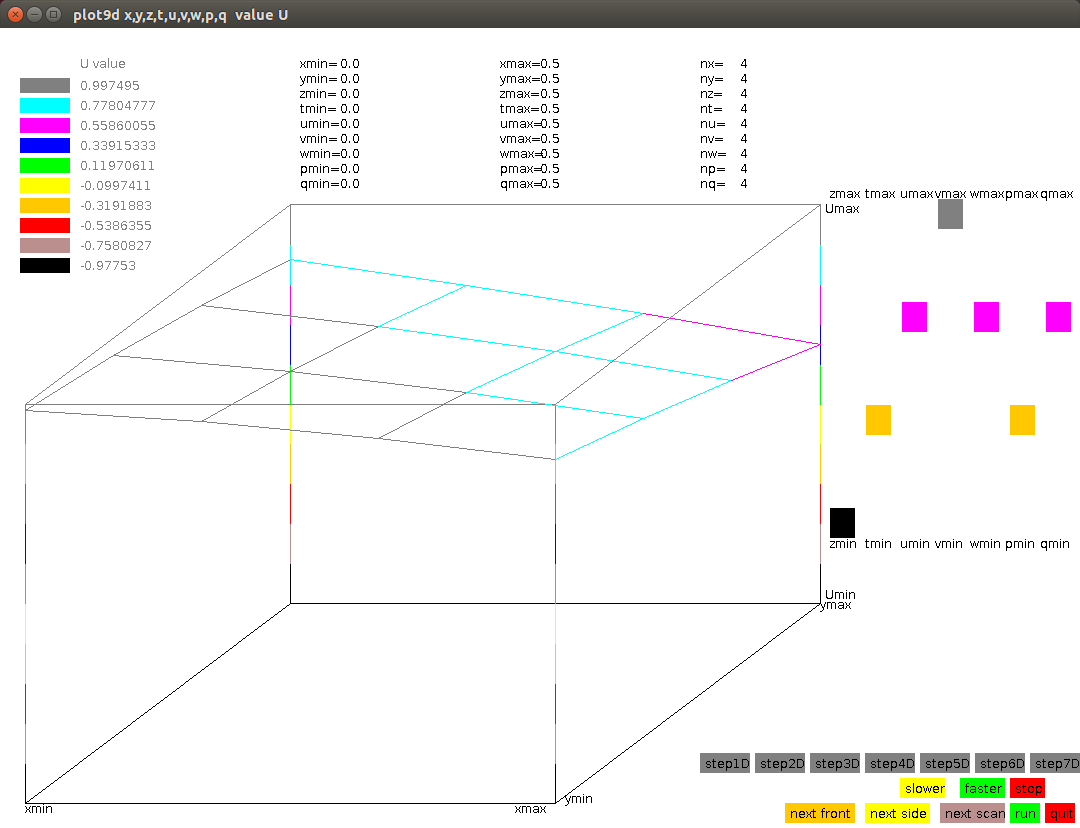

Lecture 28g, extended to 7 dimensions

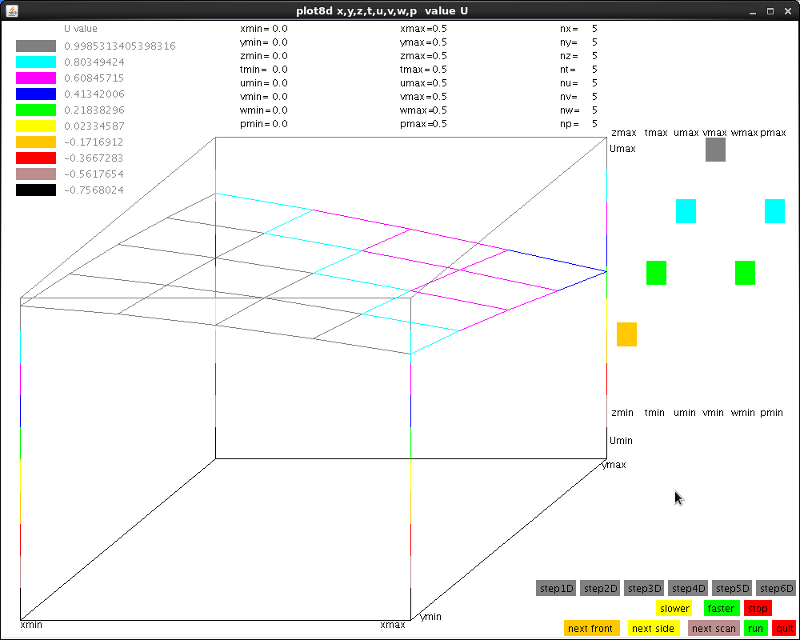

Lecture 28k, extended to 8 dimensions

Lecture 28m, extended to 9 dimensions

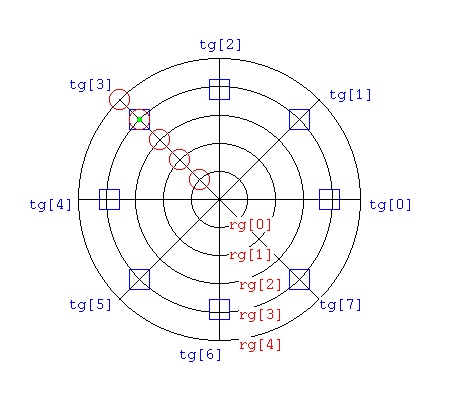

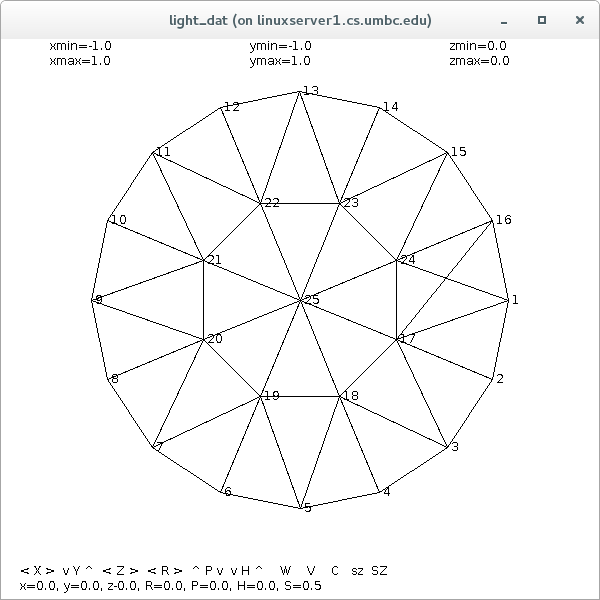

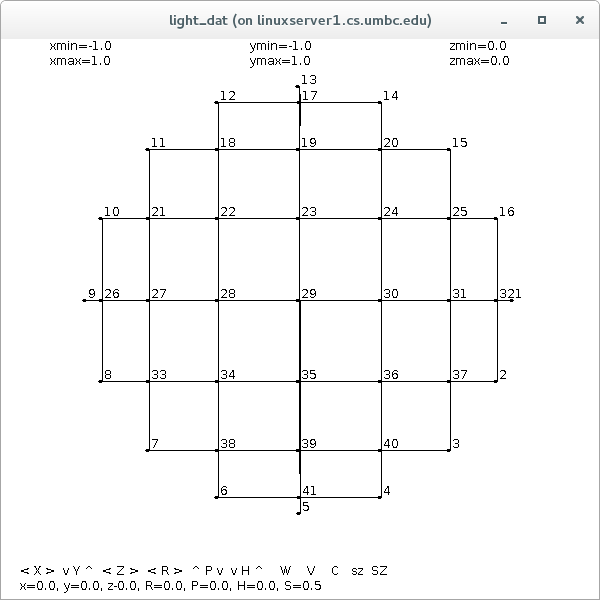

Lecture 28h, PDE polar, cylindrical, spherical

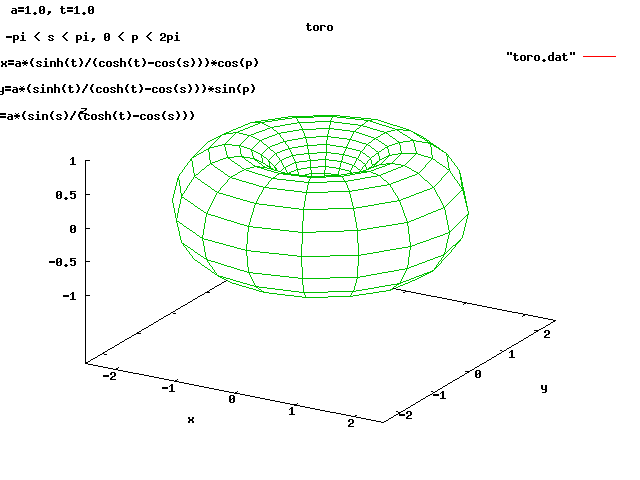

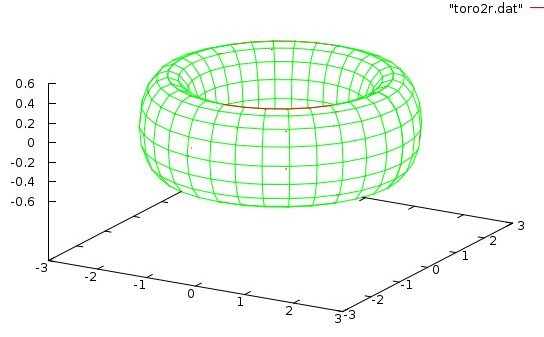

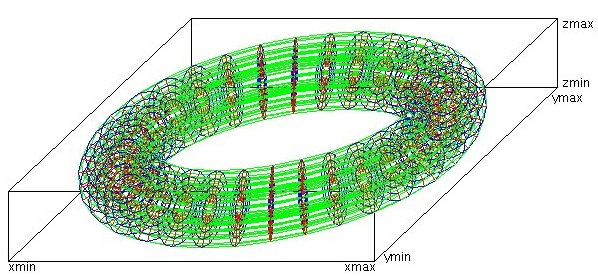

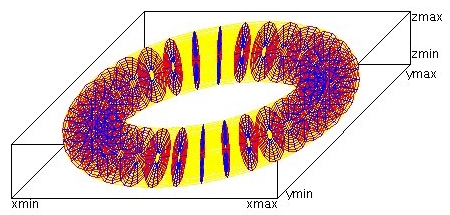

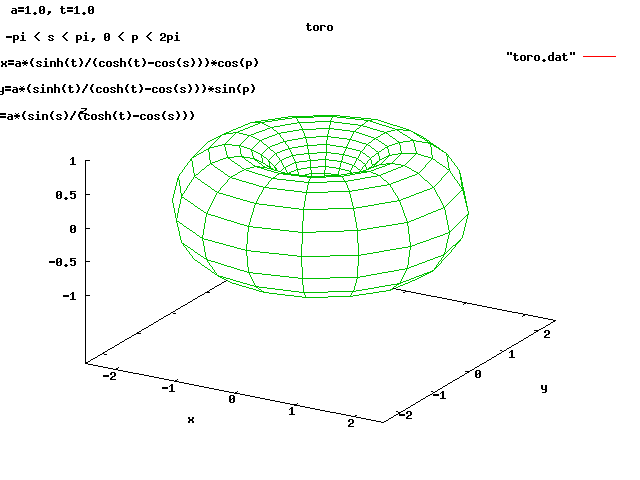

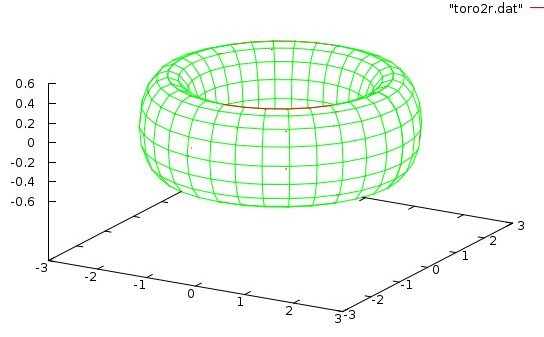

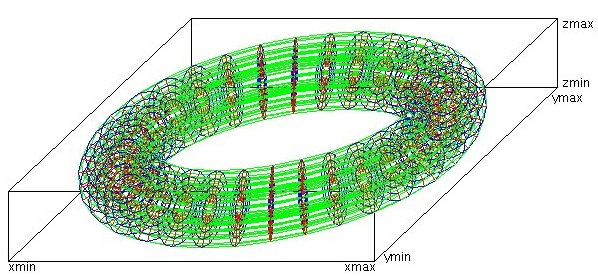

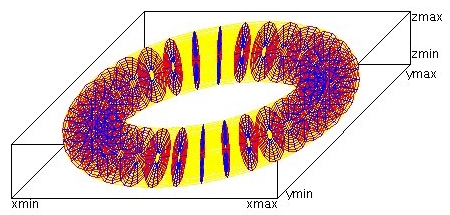

Lecture 28j, PDE toroid geometry

Lecture 29, Review

Lecture 30, Final Exam

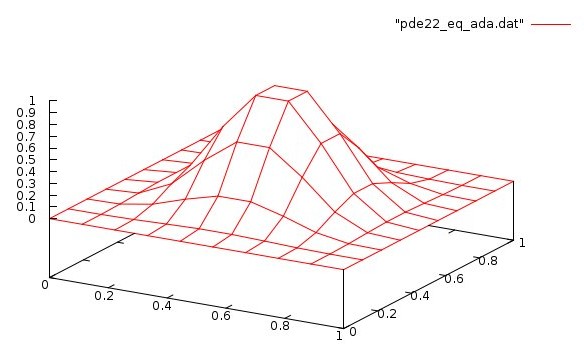

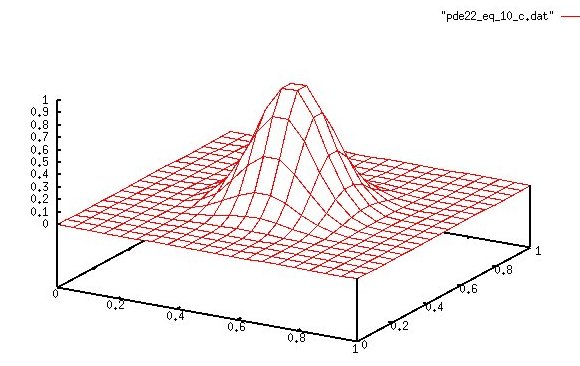

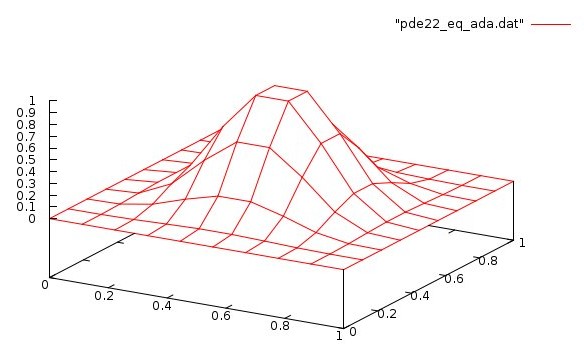

Supplemental L31, Creating PDE Test Cases

Supplemental L31a, sparse solution of PDE

Supplemental L31b, Nonlinear PDE

Supplemental L31c, Parallel PDE

Supplemental L31d, Parallel Multiple Precision PDE

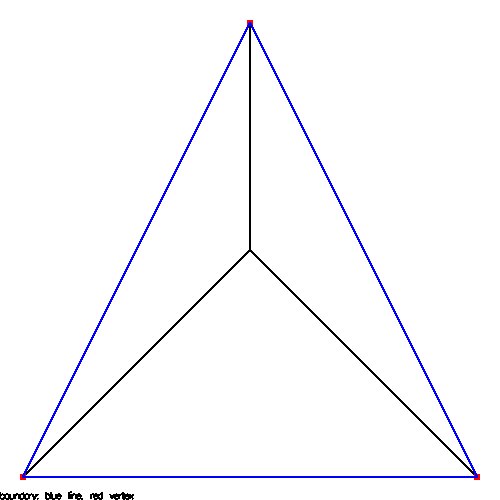

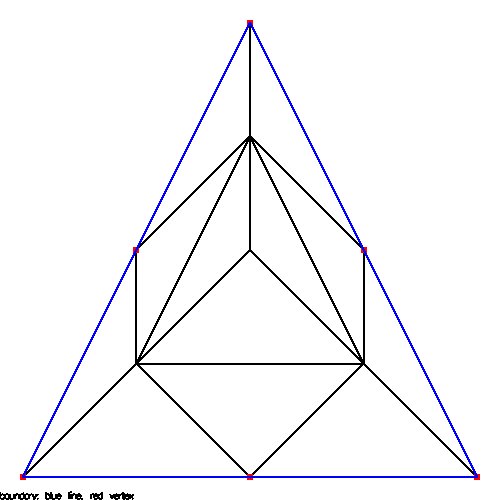

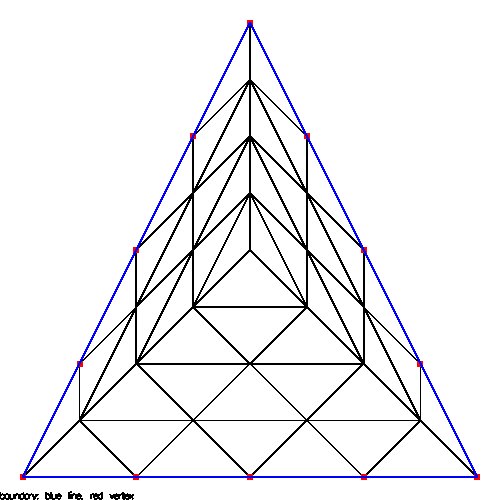

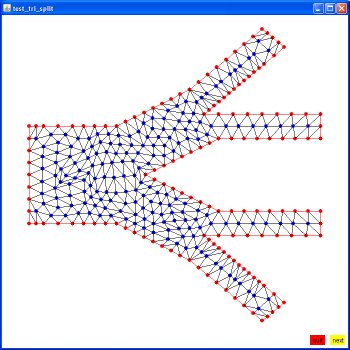

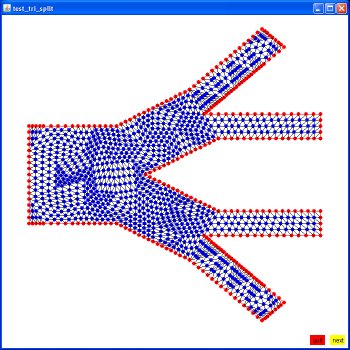

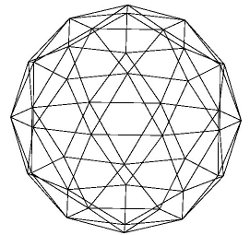

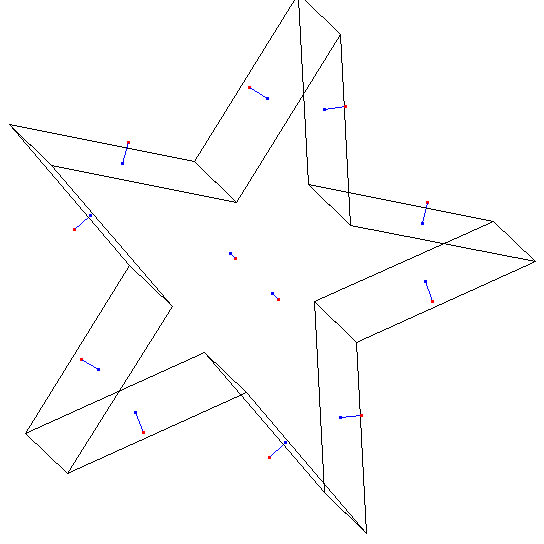

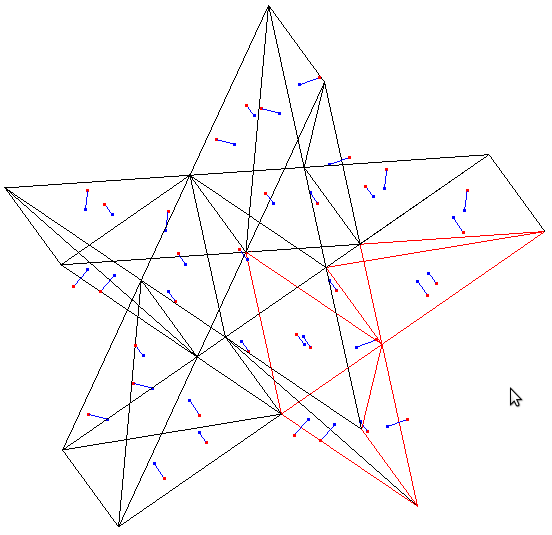

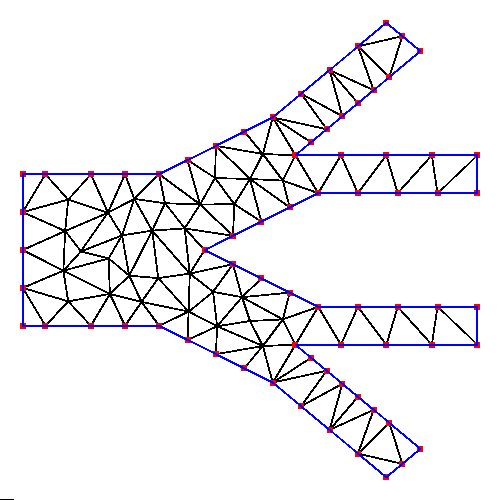

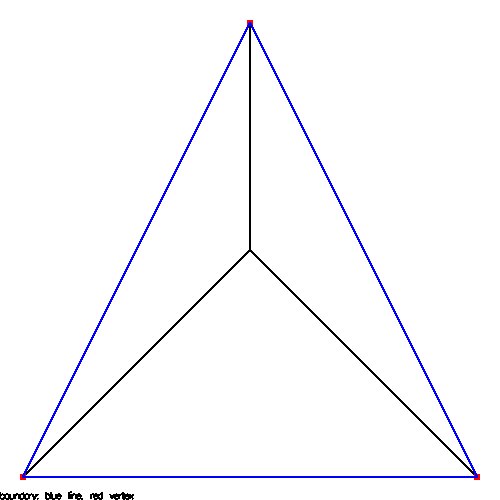

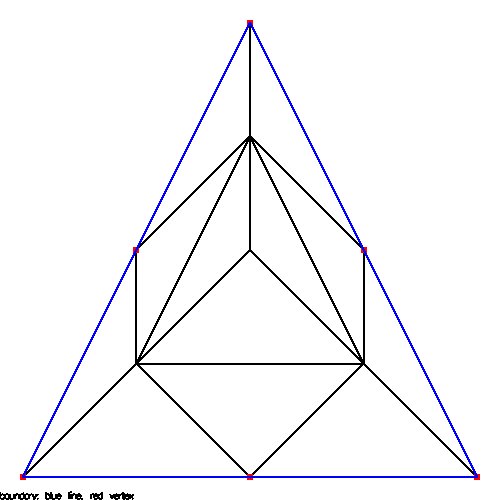

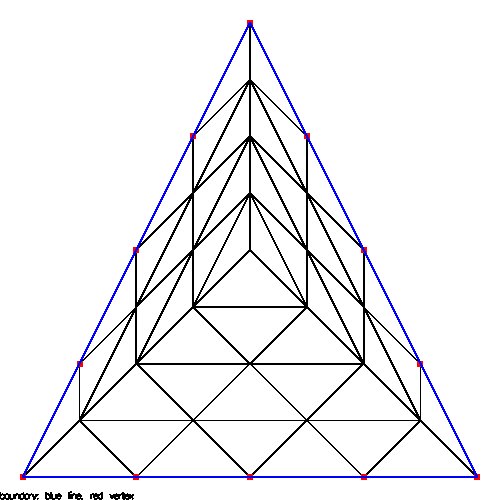

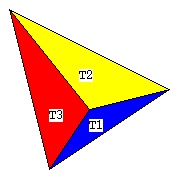

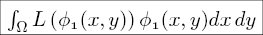

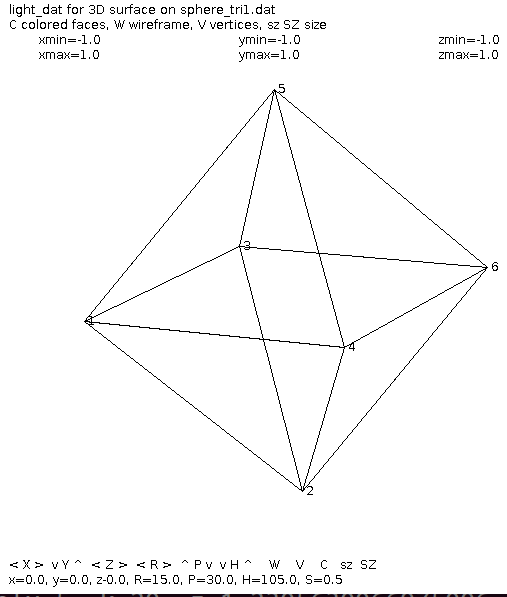

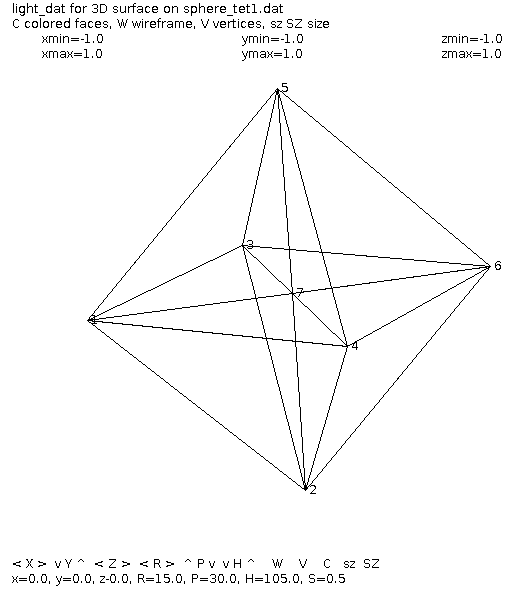

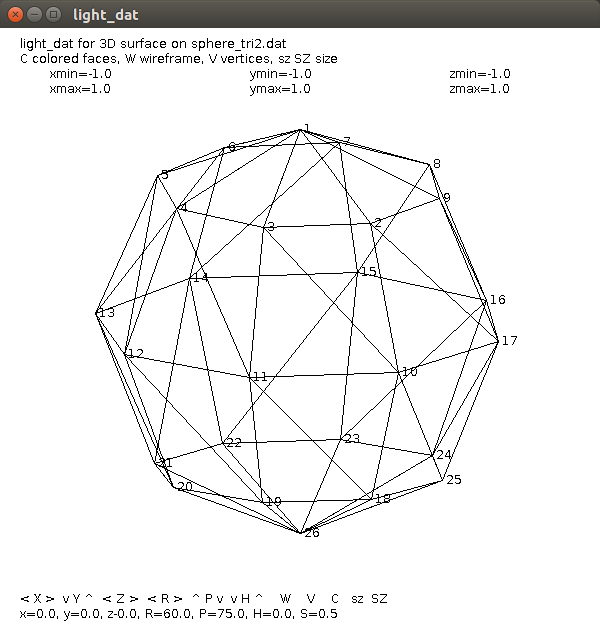

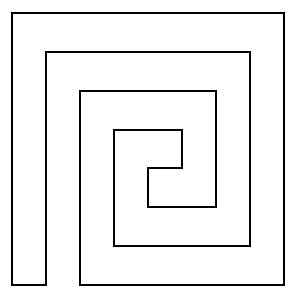

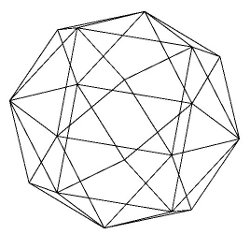

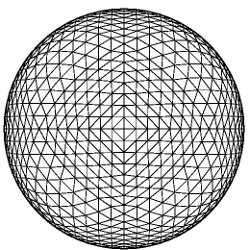

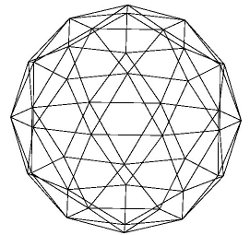

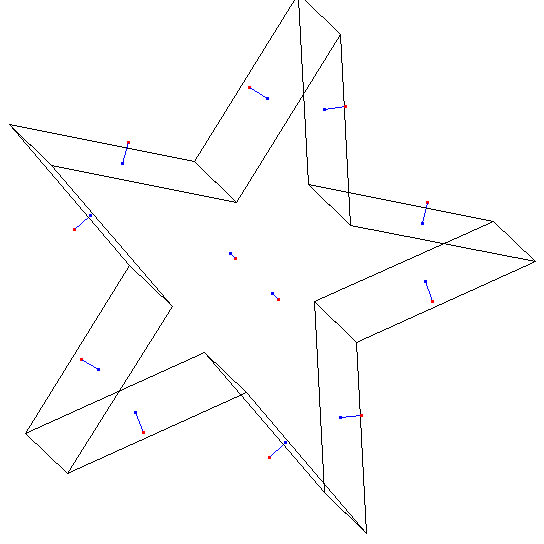

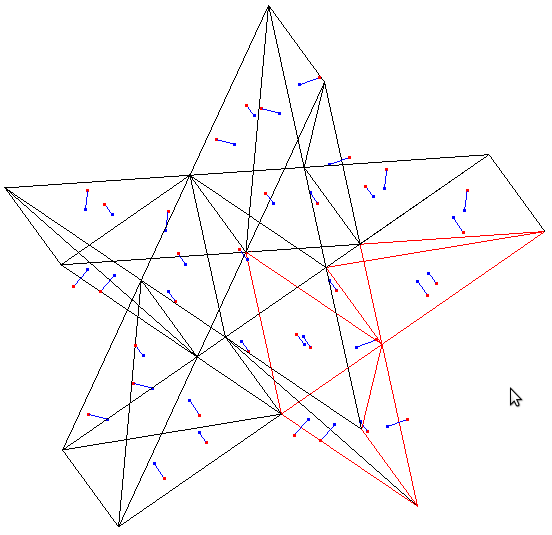

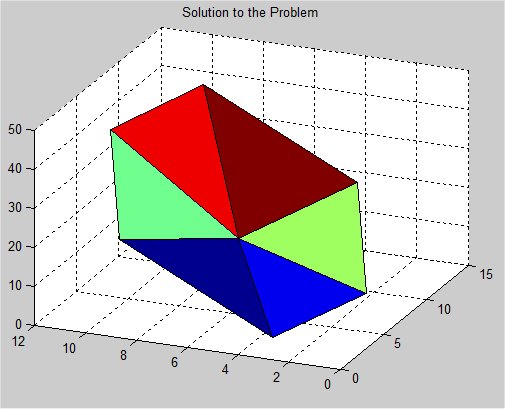

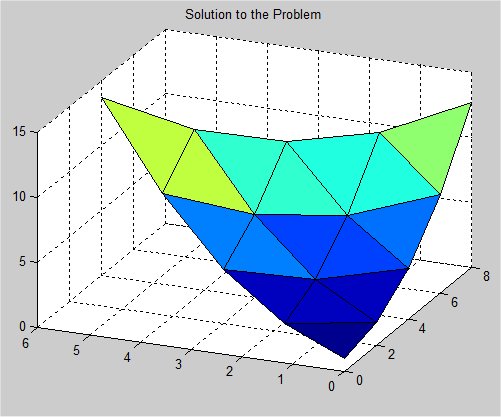

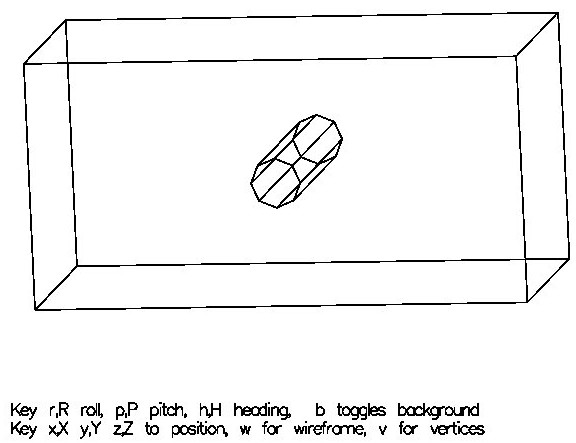

Supplemental L32, Finite Element Method

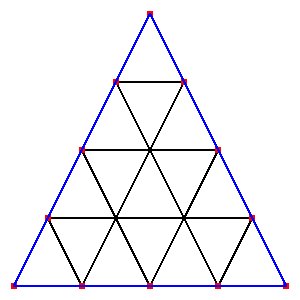

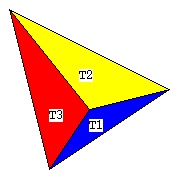

Supplemental L33, Finite Element Method, triangle

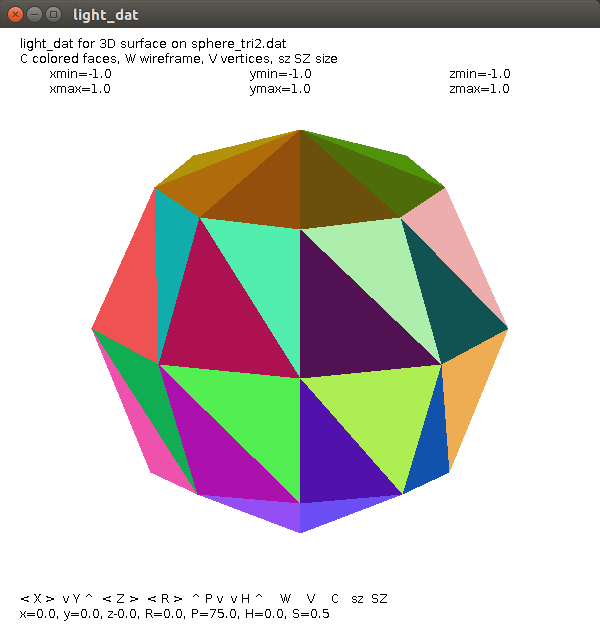

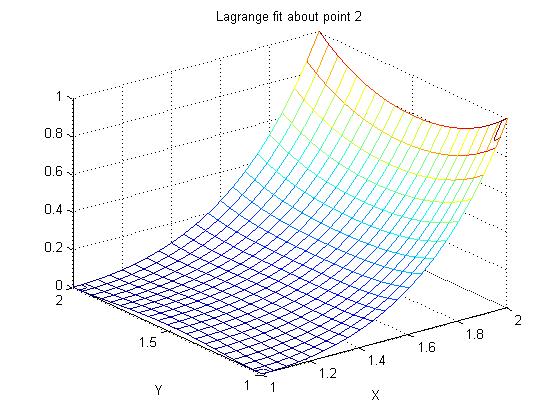

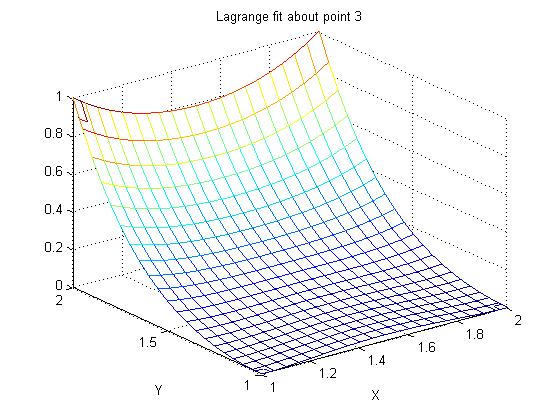

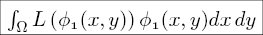

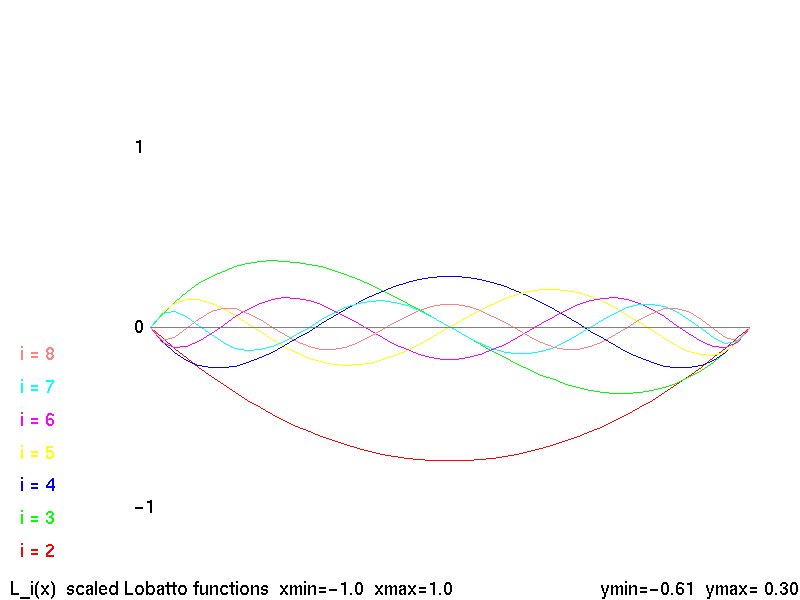

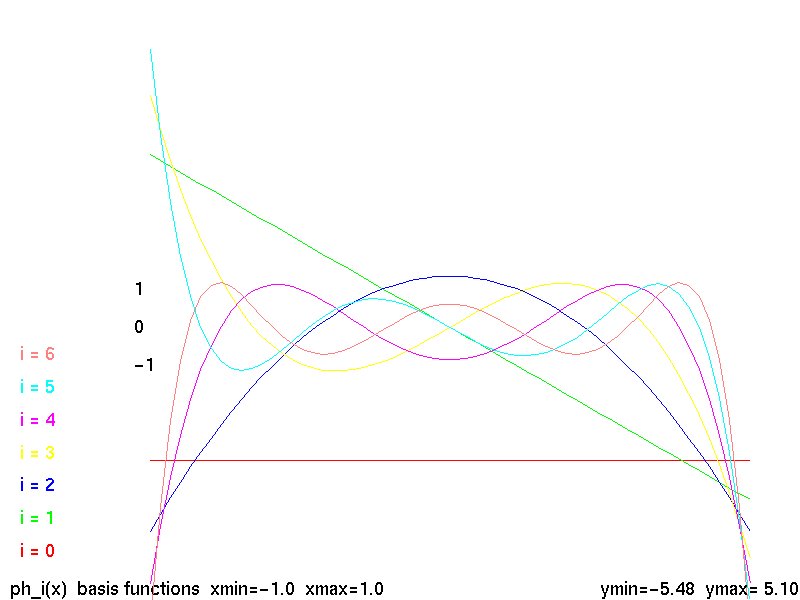

Supplemental L33a, Lagrange fit triangle

Supplemental L34, Formats, reading

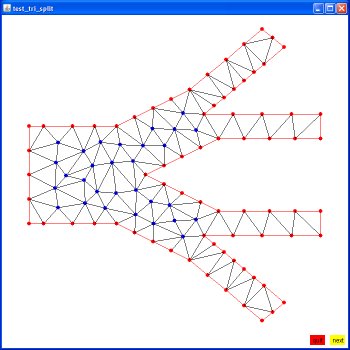

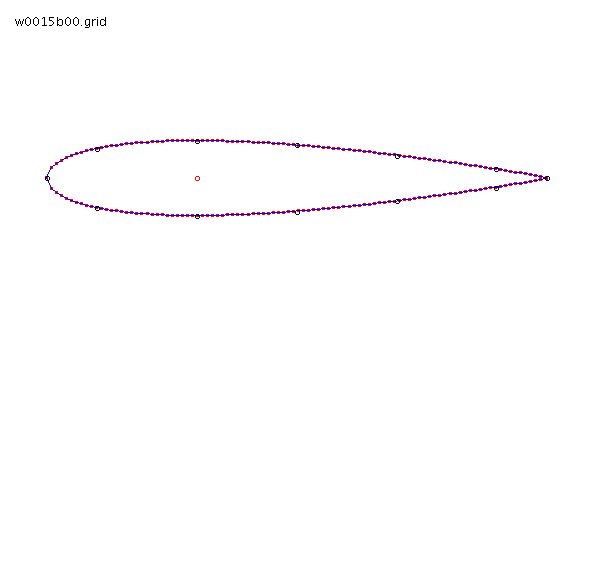

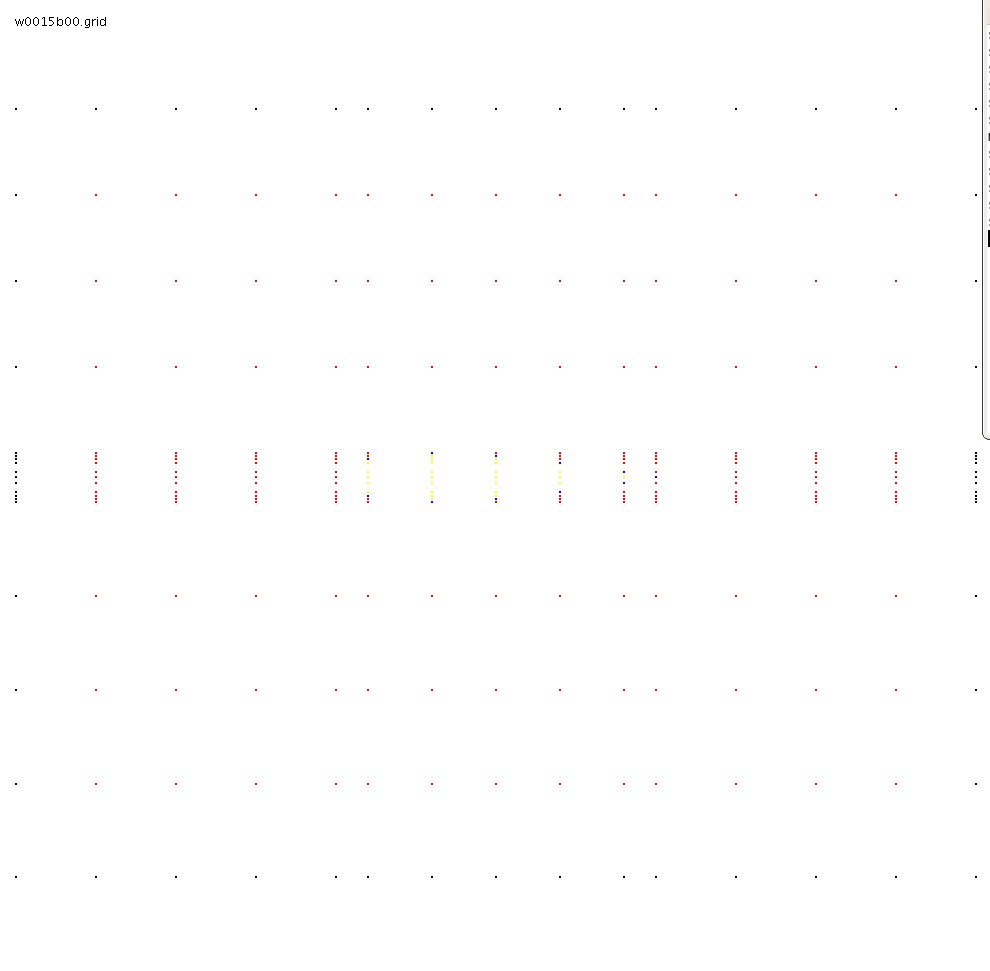

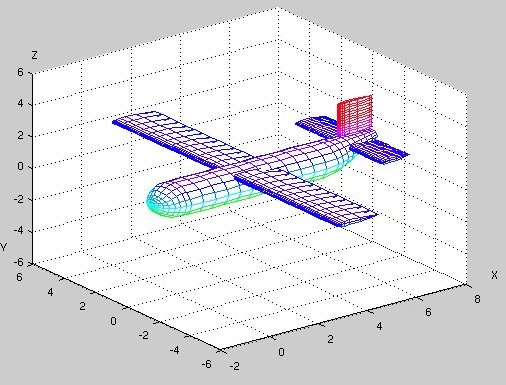

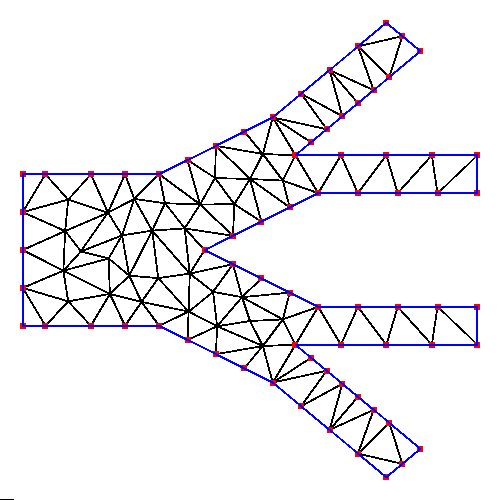

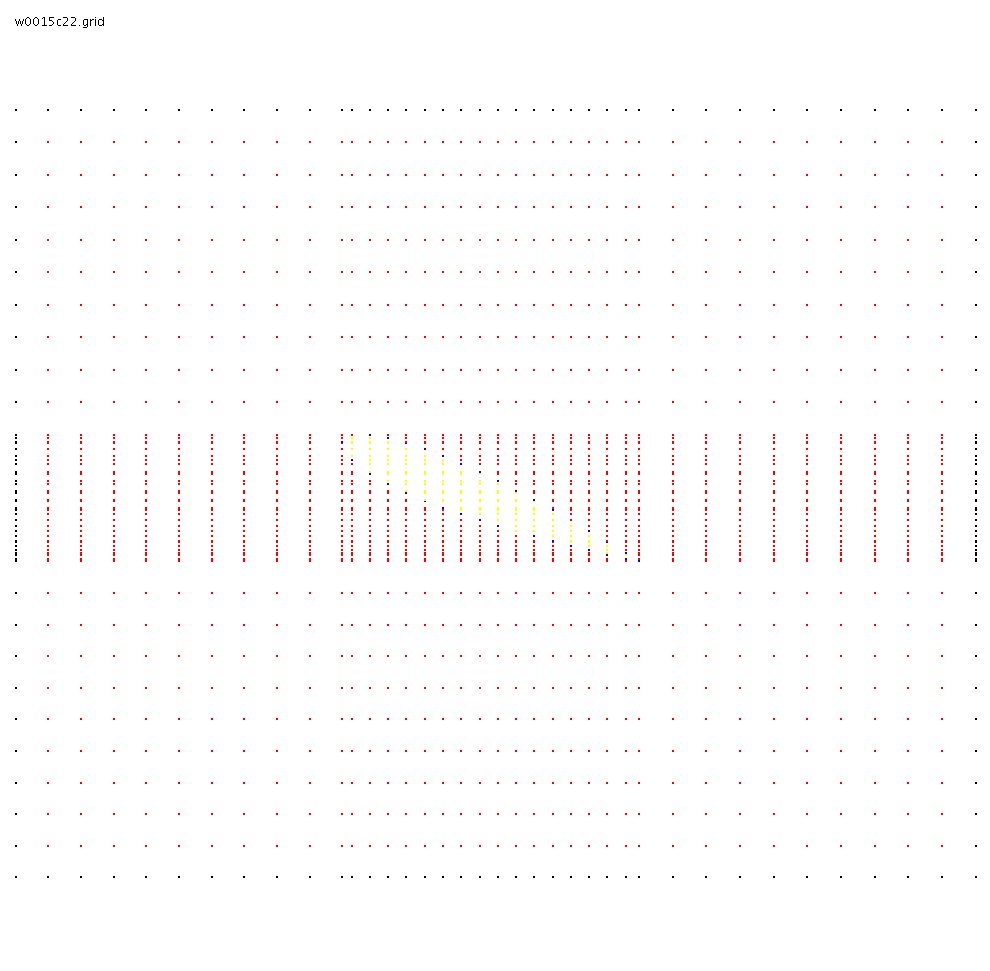

Lecture 28c, fem_50 case study

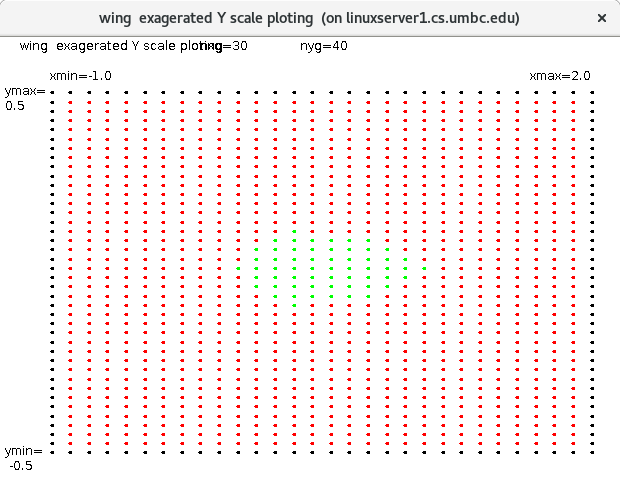

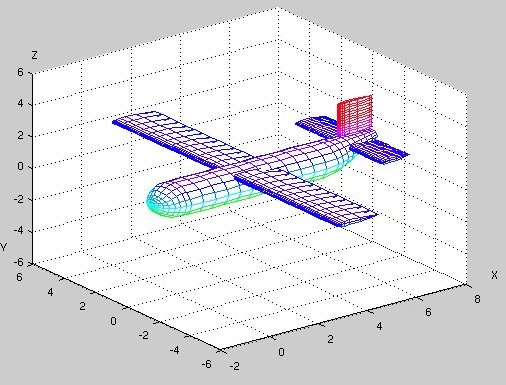

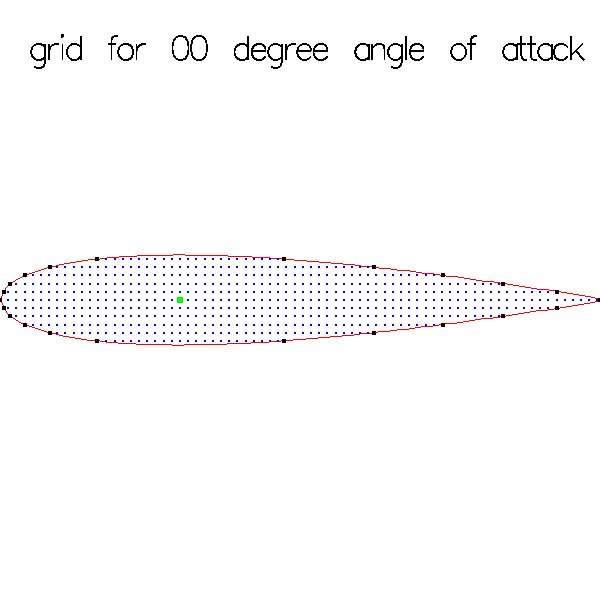

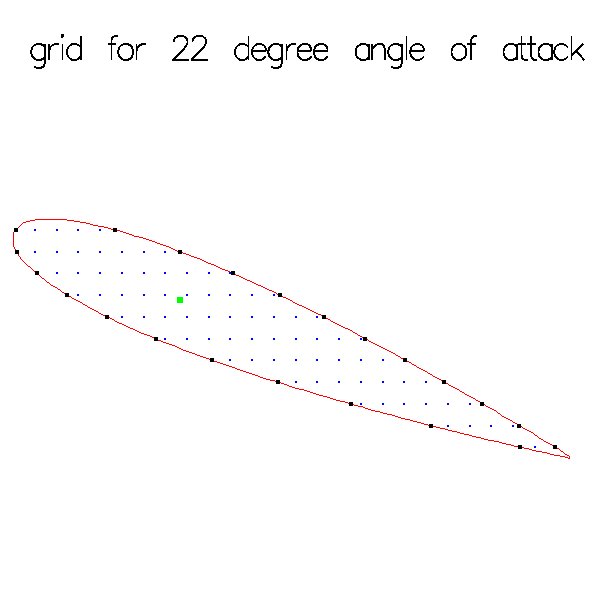

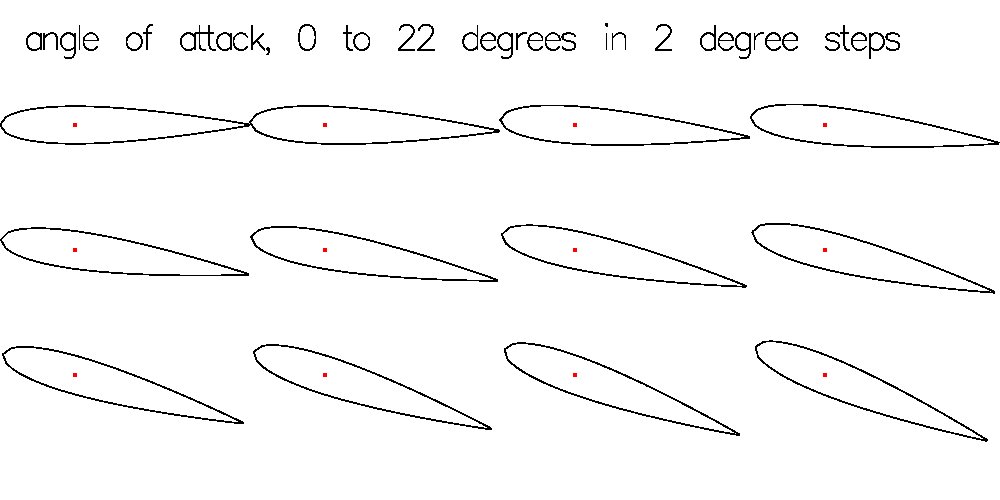

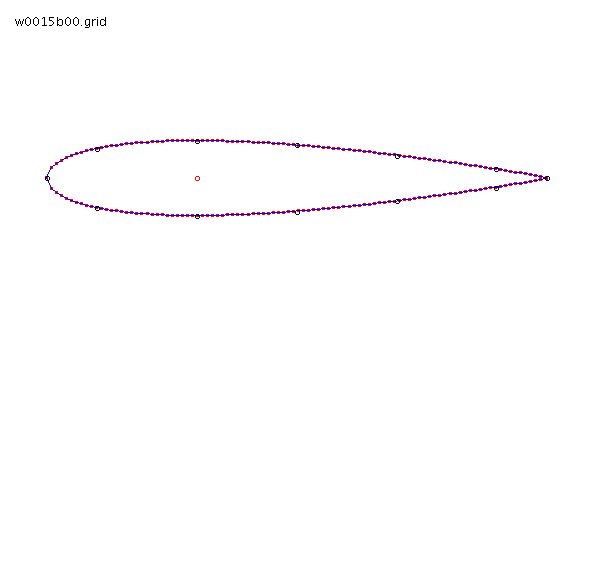

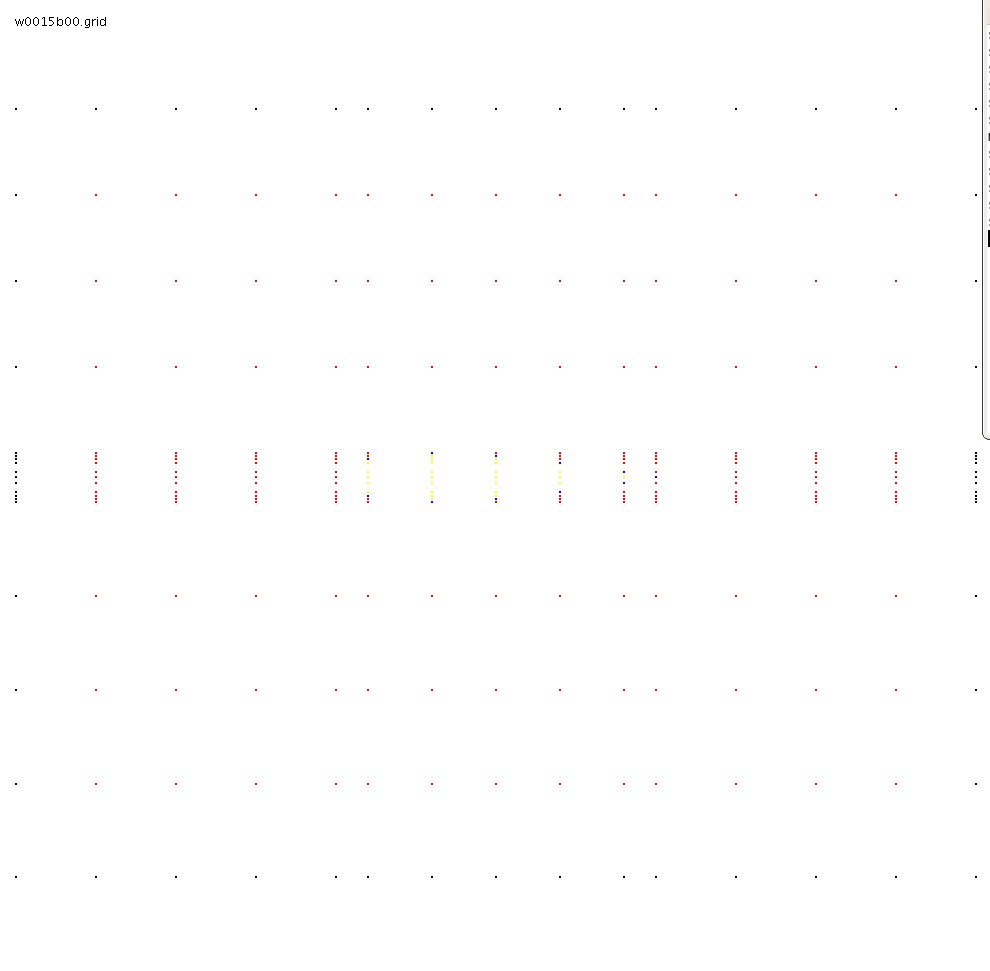

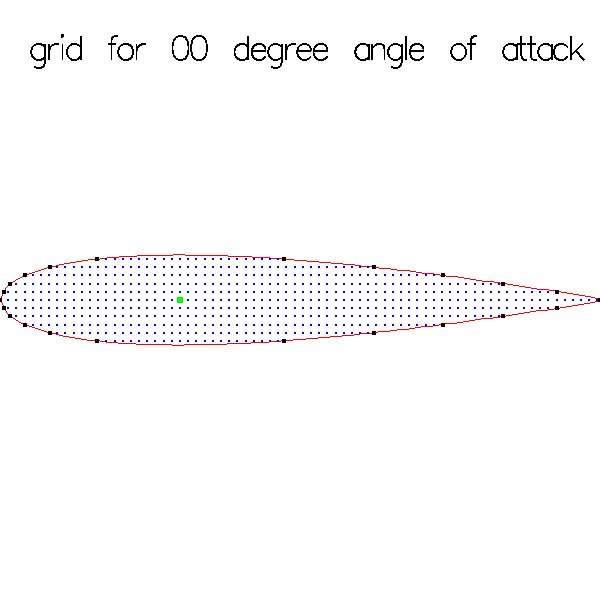

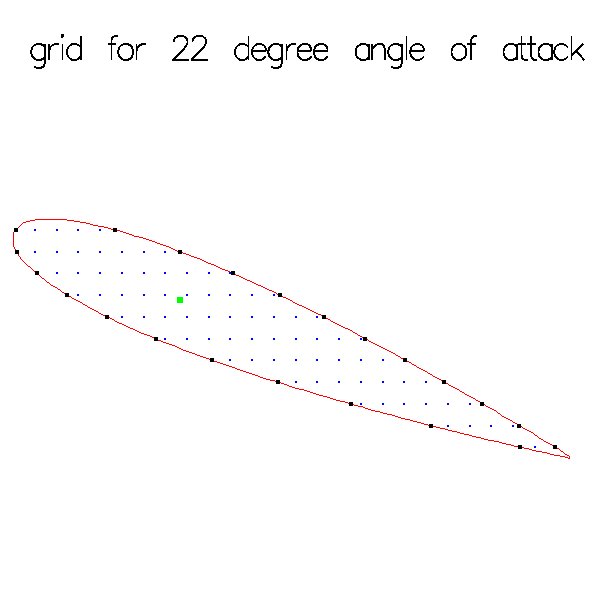

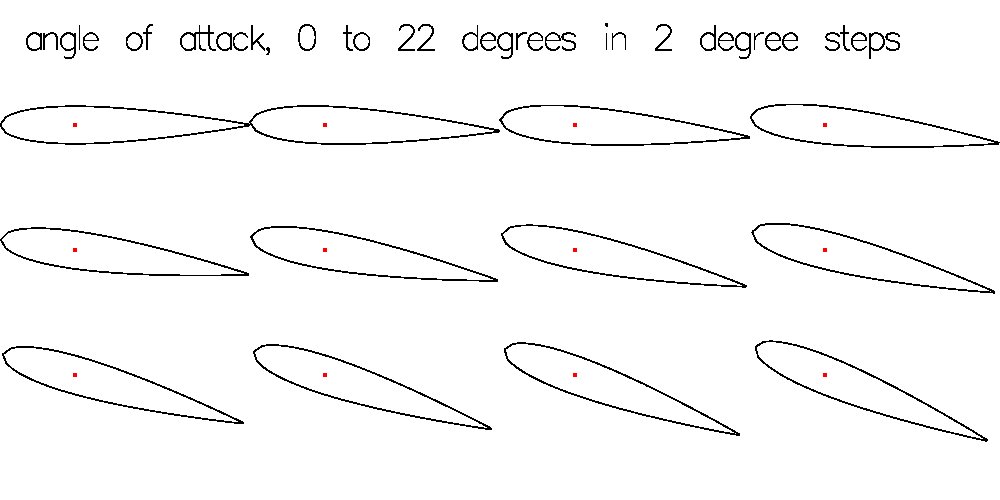

Supplemental L35, Navier Stokes Airfoil Simulation

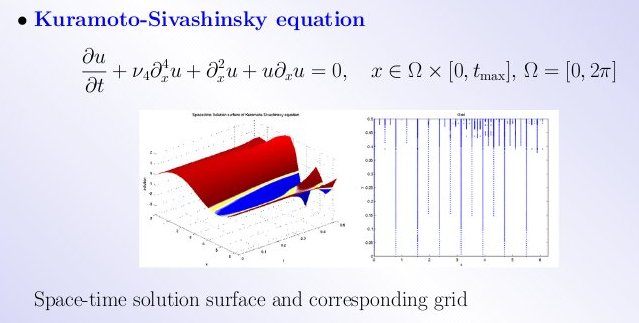

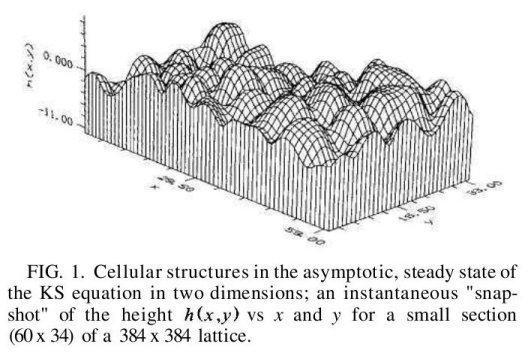

Supplemental L36, Some special PDE's

Supplemental L36a, Special discretization, non uniform

Supplemental L37, Vaious utility

Supplemental L38, Open Tutorial on LaTeX

Supplemental L39, Tutorial on Numerical Solution of Differential Equations

Supplemental L40, Unique Numerical Solution of Differential Equations

Supplemental L41, Numerical solving AC circuits

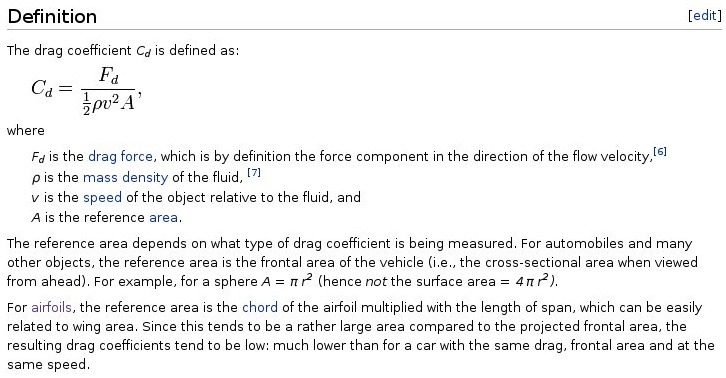

Supplemental Airfoil lift and drag coefficients

Supplemental Continuum Hypothesis

openMP parallel computing

Supplemental Functional programming

Other Links

Introduction:

Hello, my name is Jon Squire and I have been using computers

to solve numerical problems since 1959. I have about 1 million

lines of source code, in more than 15 languages,

written over more than 50 years.

How can that be? Check the numerical computation:

1,000,000/50 years is 20,000 lines per year.

20,000/200 working days per year is 100 lines per working day.

Link to file type, file count, line count.

With a lot of reuse, cut-and-paste, same programs and

data files including scripts for many languages on many

operating systems, easy.

On a job, 20,000/(50 weeks*5 days per week) is 80 lines per day.

80/8 hours is 10 lines per hour. You can do that.

You may not save every line you type. sad.

Overview:

You will be writing 6 small programs and a project in a language

of your choice.

Full details and sample code in a few lnguages will be provided.

You will always have a weekend between a homework assignment

and the due date.

You may use whatever language or languages you like and you

may experiment with other languages. Examples will be provided

in C, Java, Python, Ruby, Matlab and others. You will see many

languages including Fortran, Ada, Lisp, Pascal, Delphi, Scheme...

The point is that the "syntactic sugar" of any language does

not mean much. You may sometime convert code from some language

into a language you like better.

I have found some programs that I wrote can run faster in

Java, Python, Ada, Fortran, Matlab than they run in optimized C.

Well, the Python and Matlab use efficient library routines

that are written in Fortran. We will cover threads and

multiprocessor HPC concepts. There is a full course, CMSC 483

Parallel and Distributed processing where you get to program

a multiprocessor.

You will be exposed to toolkits. Very valuable to help you

produce more and better software with less effort. Over time

you may develop a toolkit to help others. Toolkits are

available for numerical computation, graphics, AI, robotics,

any many other areas.

Read the syllabus.

In this course there may be no exactly correct answer.

This lecture will provide the definitions and the intuition for

absolute error

relative error

round off error

truncation error

Then how to call intrinsic function and elementary functions.

Some terms will be used without comment in the rest of the course.

Learn them now. You should run the sample code to increase your

understanding and belief.

"Absolute error" A positive number, the difference between the

exact (analytic) answer and the computed, approximate, answer.

"Relative error" A positive number, the Absolute Error divided

by the exact answer. (Not defined if the exact answer is zero.)

Most numerical software is first tested with one or more

known solutions. Then, the software may be used on

problems where the solution is not known.

Given the exact answer is 1000 and the computed answer is 1001:

The absolute error is 1

The relative error is 1/1000 = 0.001

For a set of computed numbers there are three common absolute errors:

"Maximum Error" the largest error in the set.

"Average Error" the sum of the absolute errors divided by the number

in the set.

"RMS Error" the root mean square of the absolute errors.

sqrt(sum_over_set(absolute_error^2)/number_in_set)

Given the exact answer is 100 answers of 1.0 and we

computed 99 answers of 1.0 and one answer of 101.0:

The maximum error is 100.0 101.0 - 1.0

The average error is 1.0 100.0/100

The RMS error is 10.0 sqrt(100.0*100.0/100)

In some problems the main concern is the maximum error, yet

the RMS error is often the best intuitive measure of error.

Generally much better intuitive measure than average error.

Almost all Numerical Computation arithmetic is performed using

IEEE 754-1985 Standard for Binary Floating-Point Arithmetic.

The two formats that we deal with in practice are the 32 bit and

64 bit formats. You need to know how to get the format you desire

in the language you are programming. Complex numbers use two values.

older

C Java Fortran 95 Fortran Ada 95 MATLAB Python R

------ ------ ---------------- ---------- ------------ -------- --------

32 bit float float real real float N/A float

64 bit double double double precision real*8 long_float 'default' 'default'

complex

32 bit 'none' 'none' complex complex complex N/A N/A

64 bit 'none' 'none' double complex complex*16 long_complex 'default' 'default'

'none' means not provided by the language (may be available as a library)

N/A means not available, you get the default.

IEEE Floating-Point numbers are stored as follows:

The single format 32 bit has

1 bit for sign, 8 bits for exponent, 23 bits for fraction

about 6 to 7 decimal digits

The double format 64 bit has

1 bit for sign, 11 bits for exponent, 52 bits for fraction

about 15 to 16 decimal digits

Some example numbers and their bit patterns:

decimal

stored hexadecimal sign exponent fraction significand

The "1" is not stored |

|

31 30....23 22....................0 |

1.0

3F 80 00 00 0 01111111 00000000000000000000000 1.0 * 2^(127-127)

0.5

3F 00 00 00 0 01111110 00000000000000000000000 1.0 * 2^(126-127)

0.75

3F 40 00 00 0 01111110 10000000000000000000000 1.1 * 2^(126-127)

0.9999995

3F 7F FF FF 0 01111110 11111111111111111111111 1.1111* 2^(126-127)

0.1

3D CC CC CD 0 01111011 10011001100110011001101 1.1001* 2^(123-127)

63 62...... 52 51 ..... 0

1.0

3F F0 00 00 00 00 00 00 0 01111111111 000 ... 000 1.0 * 2^(1023-1023)

0.5

3F E0 00 00 00 00 00 00 0 01111111110 000 ... 000 1.0 * 2^(1022-1023)

0.75

3F E8 00 00 00 00 00 00 0 01111111110 100 ... 000 1.1 * 2^(1022-1023)

0.9999999999999995

3F EF FF FF FF FF FF FF 0 01111111110 111 ... 1.11111* 2^(1022-1023)

0.1

3F B9 99 99 99 99 99 9A 0 01111111011 10011..1010 1.10011* 2^(1019-1023)

|

sign exponent fraction |

before storing subtract bias

Note that an integer in the range 0 to 2^23 -1 may be represented exactly.

Any power of two in the range -126 to +127 times such an integer may also

be represented exactly. Numbers such as 0.1, 0.3, 1.0/5.0, 1.0/9.0 are

represented approximately. 0.75 is 3/4 which is exact.

Some languages are careful to represent approximated numbers

accurate to plus or minus the least significant bit.

Other languages may be less accurate.

Now for some experiments for you to run an the computer of your choice

in the language of your choice.

In single precision floating point print:

10^10 * sum( 1.0, 10^-7, -1.0) answer should be 1,000

10^10 * sum( 1.0, 0.5*10^-7, -1.0) answer should be 500

expression 10^10 * ( 1.0 + 10^-7 -1.0) answer should be 1,000

10000000000.0*(1.0+10.0E-7-1.0)

The order of addition is important.

Adding a small number to 1.0 may not change the value.

This small number is less that what we call "epsilon".

error_demo1.adb Ada source code

error_demo1_ada.out output

error_demo1.c C source code

error_demo1_c.out output

epsilon.c C double and float source code

epsilon_c.out double and float output

error_demo1.f90 Fortran source code

error_demo1_f90.out output

error_demo1.java Java source code

error_demo1_java.out output

error_demo1.py3 Python source code

error_demo1_py3.out output

error_demo1.m Matlab source code

error_demo1_m.out output

error_demo1.r R source code

error_demo1_r.out output

Remember 10^-7 is 0.0000001, not a power of 2.

Thus, can not be stored exactly.

1.0e-16 = 10^-16 not exact because not a power of 2

Also, floating point arithmetic is performed in registers with

more bits than can be stored. Thus, as shown below, you may

get more precision than you expect. Do not count on it.

epsilon.c showing more precision source code

epsilon.out showing forced store output

We will cover, in the course, methods of reducing error in areas:

statistics sum x^2 - (sum x)^2 vs sum(x-mean)

polynomial definition, evaluation Horner's rule vs x^N

approximation, e.g. sin(x) truncation error vs roundoff error

derivatives

indefinite integral, definite integral, area

partial differential equations

and show sample code in many languages:

Ada 95, C, Fortran 95, Java, Python, MatLab and R

Error accumulation when computing standard deviation.

Subtracting large numbers loses significant digits.

123456 - 123455 = 1.00000 only 1 significant digit

With only 6 digits representable

123456.00 - 123455.99 = 1.00000 yet should be 0.010000

Two ways of computing the standard deviation are shown,

with the errors indicated for various sets of data:

Cases:

stddev(1, 2,..., 100)

stddev(10, 20,..., 1,000) should just scale

stddev(100, 200,..., 10,000)

stddev(1,000, 2,000,..., 100,000)

stddev(10,000, 20,000,..., 1,000,000)

stddev(10,001, 10,002,..., 10,100) should be same as first

See computed values in .out files:

sigma_error.c

sigma_error_c.out

sigma_error.f90

sigma_error_f90.out

sigma_error.adb

sigma_error_ada.out

sigma_error.java float

sigma_error_java.out

sigma_errord.java double

sigma_errord_java.out

sigma_error.py

sigma_error_py.out

sigma_error.m

sigma_error_m.out

Using different algorithms, expect slightly different results.

Using different types, float or double, may get very different results.

Iteration needing uniform step size should use multiplication,

not addition as shown in:

bad_roundoff.c

bad_roundoff_c.out

The elementary functions are sin, cos, tan, exp, sqrt

and inverse forms asin, acos, atan, log, power

and reciprocal forms cosecant, secant, cotangent,

and hyperbolic forms sinh, cosh, tanh,

and inverse hyperbolic forms asinh, acosh, atanh, ...

The intrinsic functions are built into the language, e.g. abs (sometimes)

Note that Fortran 95 and Java need no extra information to get the

real valued elementary functions. "C" needs #include <math.h>

while Ada 95 needs 'with' and 'use' Ada.Numerics.Elementary_functions .

Note that Ada 95 overloads the function names and provides

single precision as 'float' and double precision as 'long_float'.

ef_ada.adb Ada 95, float and long float

Note that Fortran 95, java, python, overload the function names and provides

the same function name for single and double precision.

Fortran 95 names single precision as 'real' and

double precision as 'double precision'.

ef_f95.f90 Fortran 95, real and double

Note that Java provides double precision as 'double' for variables and constants.

ef_java.java Java, only double

Hyper.java create your own hyperbolic

Note that C provides double precision only as 'double' and constants.

ef_c.c C, only double is available

ef_c.out nan means not a number, bad input

Note that MATLAB has the most functions and all functions are

automatically double or double complex as needed.

ef_matlab.m MATLAB everything

ef_matlab.out automatic complex

Note that Python needs import math and can list available functions

test_math.py Python many, automatic conversion

test_math_py.out Python output

test_math.py3 Python many, automatic conversion

test_math_py3.out Python output

Just for fun: Power of 2 "tree"

A small program that prints epsilon, 'eps' and the largest and

smallest floating point numbers, run on one machine, shows:

float_sig.c running, may die

type float has about six significant digits

eps is the smallest positive number added to 1.0f that changes 1.0f

type float eps= 5.960464478E-08

type float small= 1.401298464E-45

this could be about 1.0E-38, above shows un-normalized IEEE floating point

type float large= 1.701411835E+38

type double has about fifteen significant digits

type double eps= 1.11022302462515654E-16

type double small=4.940656458E-324

this could be about 1.0E-304, above shows un-normalized IEEE floating point

type double large=8.988465674E+307

The program is float_sig.c

Using 2^n = 10^k/3.32 and n bits has a largest number 2^n -1 and

k digits has a largest number 10^k -1. 24 bits would be 7 digits.

53 bits would be 16 digits, the more optimistic significant

digits for IEEE floating point.

Notes: I have chosen to keep most WEB pages plain text so that

they may be read by all browsers. Some referenced pages may

be .pdf, .jpg, .gif, .ps or other file types.

Many math books use many Greek letters. You may want to refer

to various alphabets.

If you want to stretch your concept of numbers,

check out the Continuum Hypothesis

Beware of easy methods of printing, related to epsilon

eps.py Python2 "print" Python3 "print( )"

eps.f90 Fortran "print"

You may use any language you want. I do not run your code.

Turn in paper in class or submit your source code and output for grading.

It is OK to ask for an example in a language I have not

provided. For various "C", I just provide .h and .c.

You may add to .h files for C++

#ifdef __cplusplus

extern "C" {

#endif

// header file function prototypes

#ifdef __cplusplus

}

#endif

You may use Fortran and C object files in many

languages, including Java, Python, Matlab, etc.

Last updated 12/13/2021

Some physical problems are easy to solve numerically using just

the basic equations of physics. Other problems may be very difficult.

Consider a specific model rocket with a specific engine.

Given all the data we can find, compute the maximum altitude

the rocket can obtain. Yes, this is rocket science.

Most physics computation is performed with metric units.

units and equations

Estes Alpha III

Length 12.25 inches = 0.311 meters

Diameter 0.95 inches = 0.0241 meters

Body area 0.785 square inches = 0.506E-3 square meters cross section

Cd of body 0.45 dimensionless

Fins area 7.69 square inches = 0.00496 square meters total for 3 fins

Cd of fins 0.01 dimensionless

Weight/mass 1.2 ounce = 0.0340 kilogram without engine

Engine 0.85 ounce = 0.0242 kilogram initial engine mass

Engine 0.33 ounce = 0.0094 kilogram final engine mass

Estes Alpha III

Length 12.25 inches = 0.311 meters

Diameter 0.95 inches = 0.0241 meters

Body area 0.785 square inches = 0.506E-3 square meters cross section

Cd of body 0.45 dimensionless

Fins area 7.69 square inches = 0.00496 square meters total for 3 fins

Cd of fins 0.01 dimensionless

Weight/mass 1.2 ounce = 0.0340 kilogram without engine

Engine 0.85 ounce = 0.0242 kilogram initial engine mass

Engine 0.33 ounce = 0.0094 kilogram final engine mass

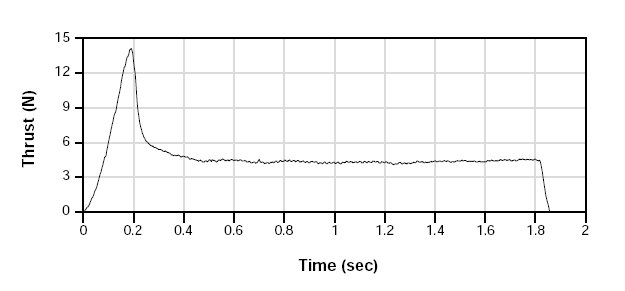

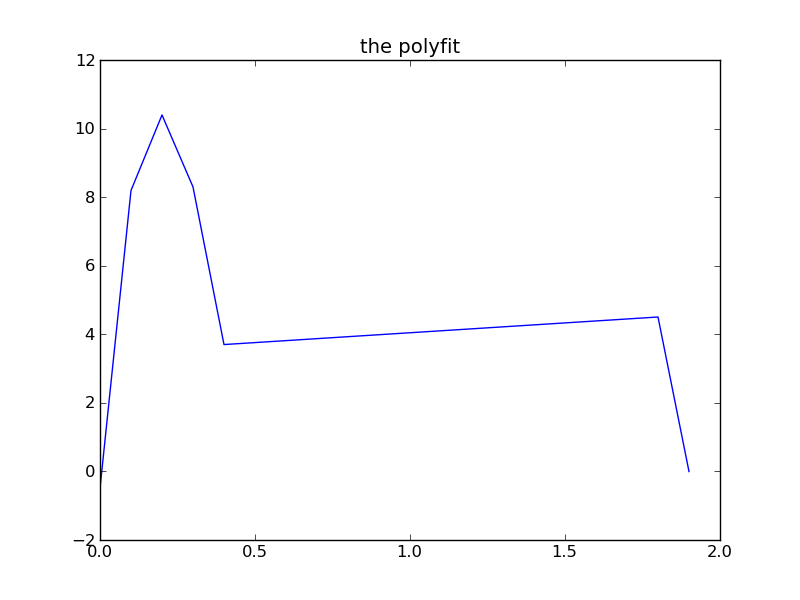

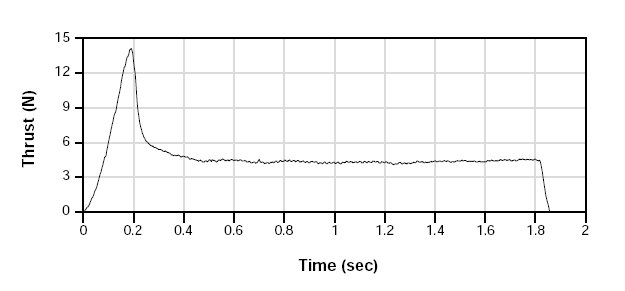

Thrust curve

Total impulse 8.82 newton seconds (area under curve)

Peak thrust 14.09 newton

Average thrust 4.74 newton

Burn time 1.86 second

Initial conditions:

t = 0 time

s = 0 height

v = 0 velocity

a = 0 acceleration

F = 0 total force not including gravity

m = 0.0340 + 0.0242 mass

i = 1 start with some thrust

Basic physics: (the order is needed for hw2)

(in while loop, start with time=0.1 thrust=6)

Fd = Cd*Rho*A*v^2 /2 two equations, body and fins

Fd is force of drag in newtons in opposite direction of velocity

Cd is coefficient of drag, dimensionless (depends on shape)

Rho is density of air, use 1.293 kilograms per meter cubed

A is total surface area in square meters

v is velocity in meters per second (v^2 is velocity squared)

Fg = m*g Fg is force of gravity toward center of Earth

m is mass in kilograms

g is acceleration due to gravity, 9.80665 meters per second squared

Ft = value from thrust curve array at this time, you enter this data.

index i, test i>18 and set Ft = 0.0 (time > 1.8 seconds)

Do not copy! This is part of modeling and simulation.

start with first non zero thrust.

F = Ft - (Fd body + Fd fins + Fg) resolve forces

a = F/m a is acceleration we will compute from knowing

F, total force in newtons and

m is mass in kilograms of body plus engine mass that changes

dv = a*dt dv is velocity change in meters per second in time dt

a is acceleration in meters per second squared

dt is delta time in seconds

v = v+dv v is new velocity after the dt time step

(v is positive upward, stop when v goes negative)

v+ is previous velocity prior to the dt time step

dv is velocity change in meters per second in time dt

ds = v*dt ds is distance in meters moved in time dt

v is velocity in meters per second

dt is delta time in seconds

s = s+ds s is new position after the dt time step

s+ is previous position prior to the dt time step

ds is distance in meters moved in time dt

m = m -0.0001644*Ft apply each time step

print t, s, v, a, m

t = t + dt time advances

i = i + 1

if v < 0 quit, else loop

Ft is zero at and beyond 1.9 seconds, rocket speed decreases

Homework Problem 1:

Write a small program to compute the maximum height when

the rocket is fired straight up. Assume no wind.

In order to get reasonable consistency of answers, use dt = 0.1 second

Every student will have a different answer.

Some where near 350 meters that is printed on the box. +/- 30%

Any two answers that are the same, get a zero.

Suggestion: Check the values you get from the thrust curve by

simple summation. Using zero thrust at t=0 and t=1.9 seconds, sampling

at 0.1 second intervals, you should get a sum of about 90 . Adjust

values to make it this value in order to get reasonable consistency of

answers.

The mass changes as the engine burns fuel and expels mass

at high velocity. Assume the engine mass decreases from 0.0242 kilograms

to 0.0094 grams proportional to thrust. Thus the engine mass is

decreased each 0.1 second by the thrust value at that time times

(0.0242-0.0094)/90.0 = 0.0001644 . mass=mass-0.0001644*thrust at this time.

"thrust" = 0.0 at time t=0.0 seconds

"thrust" = 6.0 at time t=0.1 seconds.

"thrust" = 0.0 at and after 1.9 seconds. Important, rocket is still climbing.

Check that the mass is correct at the end of the flight. 0.0340+0.0094

Published data estimates a height of 1100 feet, 335 meters to

1150 feet, 350 meters.

Your height will vary.

Your homework is to write a program that prints every 0.1 seconds:

the time in seconds

height in meters

velocity in meters per second

acceleration in meters per second squared

force in newtons

mass in kilograms (just numbers, all on one line)

and stop when the maximum height is reached.

Think about what you know. It should become clear that at each

time step you compute the body mass + engine mass, the three

forces combined into Ft-Fd_body-Fd_fins-Fg, the acceleration, the velocity

and finally the height. Obviously stop without printing if

the velocity goes negative (the rocket is coming down).

The program has performed numerical double integration. You might

ask "How accurate is the computation?"

Well, the data in the problem statement is plus or minus 5%.

We will see later that the computation contributed less error.

A small breeze would deflect the rocket from vertical and

easily cause a 30% error. We should say that:

"the program computed the approximate maximum height."

Additional cases you may wish to explore.

What is the approximate maximum height without any drag,

set Rho to 0.0 for a vacuum.

What is the approximate maximum height using dt = 0.05 seconds.

What is the approximate maximum height if launched at 45 degrees

rather than vertical, resolve forces in horizontal and vertical.

For this type of rocket to have stable flight the center of gravity

of the rocket must be ahead of the center of pressure. The center

of pressure is the centroid of the area looking at the side of

the rocket. This is why the fins extend out the back and the

engine mass is up in the rocket.

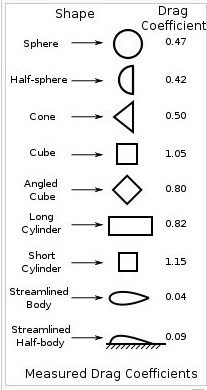

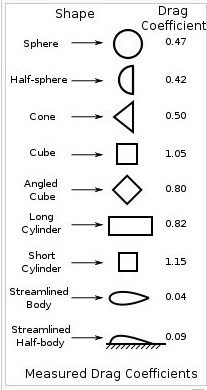

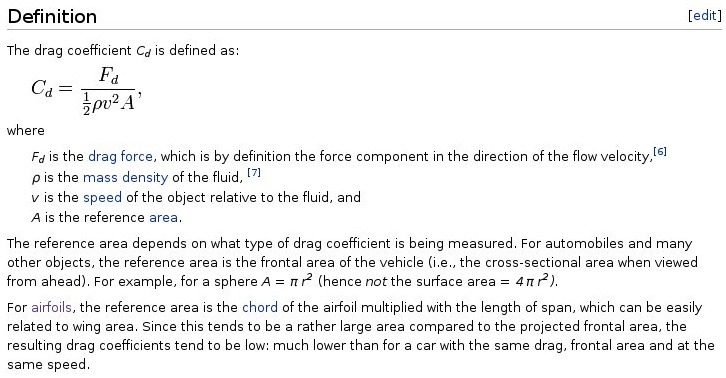

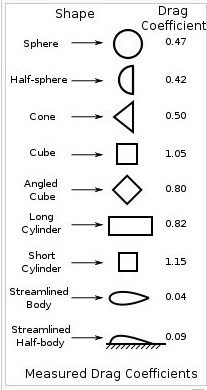

The shape of a moving object determines its drag coefficient, Cd.

The drag coefficient combined with velocity and area of the

moving object determine the drag force.

Fd = 1/2 * Cd * density * area * velocity^2

A few shapes and their respective drag coefficients are:

Thrust curve

Total impulse 8.82 newton seconds (area under curve)

Peak thrust 14.09 newton

Average thrust 4.74 newton

Burn time 1.86 second

Initial conditions:

t = 0 time

s = 0 height

v = 0 velocity

a = 0 acceleration

F = 0 total force not including gravity

m = 0.0340 + 0.0242 mass

i = 1 start with some thrust

Basic physics: (the order is needed for hw2)

(in while loop, start with time=0.1 thrust=6)

Fd = Cd*Rho*A*v^2 /2 two equations, body and fins

Fd is force of drag in newtons in opposite direction of velocity

Cd is coefficient of drag, dimensionless (depends on shape)

Rho is density of air, use 1.293 kilograms per meter cubed

A is total surface area in square meters

v is velocity in meters per second (v^2 is velocity squared)

Fg = m*g Fg is force of gravity toward center of Earth

m is mass in kilograms

g is acceleration due to gravity, 9.80665 meters per second squared

Ft = value from thrust curve array at this time, you enter this data.

index i, test i>18 and set Ft = 0.0 (time > 1.8 seconds)

Do not copy! This is part of modeling and simulation.

start with first non zero thrust.

F = Ft - (Fd body + Fd fins + Fg) resolve forces

a = F/m a is acceleration we will compute from knowing

F, total force in newtons and

m is mass in kilograms of body plus engine mass that changes

dv = a*dt dv is velocity change in meters per second in time dt

a is acceleration in meters per second squared

dt is delta time in seconds

v = v+dv v is new velocity after the dt time step

(v is positive upward, stop when v goes negative)

v+ is previous velocity prior to the dt time step

dv is velocity change in meters per second in time dt

ds = v*dt ds is distance in meters moved in time dt

v is velocity in meters per second

dt is delta time in seconds

s = s+ds s is new position after the dt time step

s+ is previous position prior to the dt time step

ds is distance in meters moved in time dt

m = m -0.0001644*Ft apply each time step

print t, s, v, a, m

t = t + dt time advances

i = i + 1

if v < 0 quit, else loop

Ft is zero at and beyond 1.9 seconds, rocket speed decreases

Homework Problem 1:

Write a small program to compute the maximum height when

the rocket is fired straight up. Assume no wind.

In order to get reasonable consistency of answers, use dt = 0.1 second

Every student will have a different answer.

Some where near 350 meters that is printed on the box. +/- 30%

Any two answers that are the same, get a zero.

Suggestion: Check the values you get from the thrust curve by

simple summation. Using zero thrust at t=0 and t=1.9 seconds, sampling

at 0.1 second intervals, you should get a sum of about 90 . Adjust

values to make it this value in order to get reasonable consistency of

answers.

The mass changes as the engine burns fuel and expels mass

at high velocity. Assume the engine mass decreases from 0.0242 kilograms

to 0.0094 grams proportional to thrust. Thus the engine mass is

decreased each 0.1 second by the thrust value at that time times

(0.0242-0.0094)/90.0 = 0.0001644 . mass=mass-0.0001644*thrust at this time.

"thrust" = 0.0 at time t=0.0 seconds

"thrust" = 6.0 at time t=0.1 seconds.

"thrust" = 0.0 at and after 1.9 seconds. Important, rocket is still climbing.

Check that the mass is correct at the end of the flight. 0.0340+0.0094

Published data estimates a height of 1100 feet, 335 meters to

1150 feet, 350 meters.

Your height will vary.

Your homework is to write a program that prints every 0.1 seconds:

the time in seconds

height in meters

velocity in meters per second

acceleration in meters per second squared

force in newtons

mass in kilograms (just numbers, all on one line)

and stop when the maximum height is reached.

Think about what you know. It should become clear that at each

time step you compute the body mass + engine mass, the three

forces combined into Ft-Fd_body-Fd_fins-Fg, the acceleration, the velocity

and finally the height. Obviously stop without printing if

the velocity goes negative (the rocket is coming down).

The program has performed numerical double integration. You might

ask "How accurate is the computation?"

Well, the data in the problem statement is plus or minus 5%.

We will see later that the computation contributed less error.

A small breeze would deflect the rocket from vertical and

easily cause a 30% error. We should say that:

"the program computed the approximate maximum height."

Additional cases you may wish to explore.

What is the approximate maximum height without any drag,

set Rho to 0.0 for a vacuum.

What is the approximate maximum height using dt = 0.05 seconds.

What is the approximate maximum height if launched at 45 degrees

rather than vertical, resolve forces in horizontal and vertical.

For this type of rocket to have stable flight the center of gravity

of the rocket must be ahead of the center of pressure. The center

of pressure is the centroid of the area looking at the side of

the rocket. This is why the fins extend out the back and the

engine mass is up in the rocket.

The shape of a moving object determines its drag coefficient, Cd.

The drag coefficient combined with velocity and area of the

moving object determine the drag force.

Fd = 1/2 * Cd * density * area * velocity^2

A few shapes and their respective drag coefficients are:

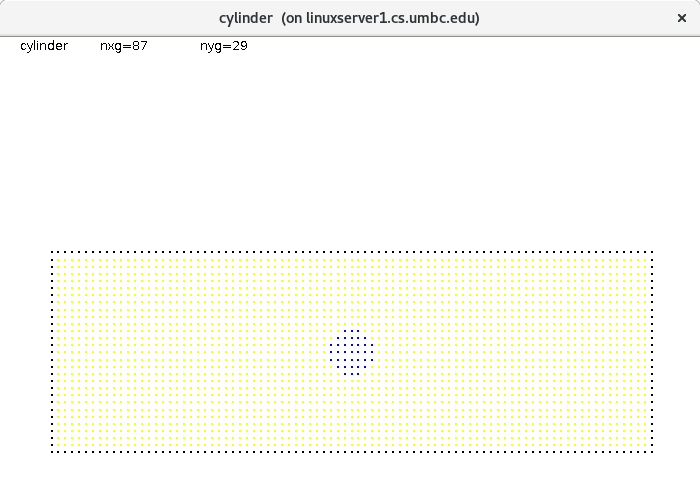

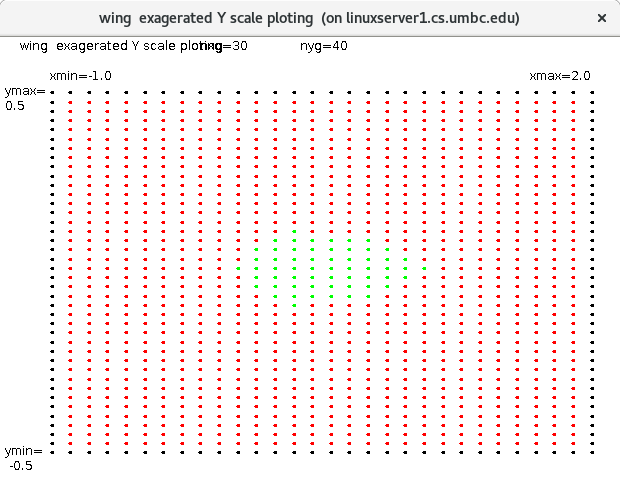

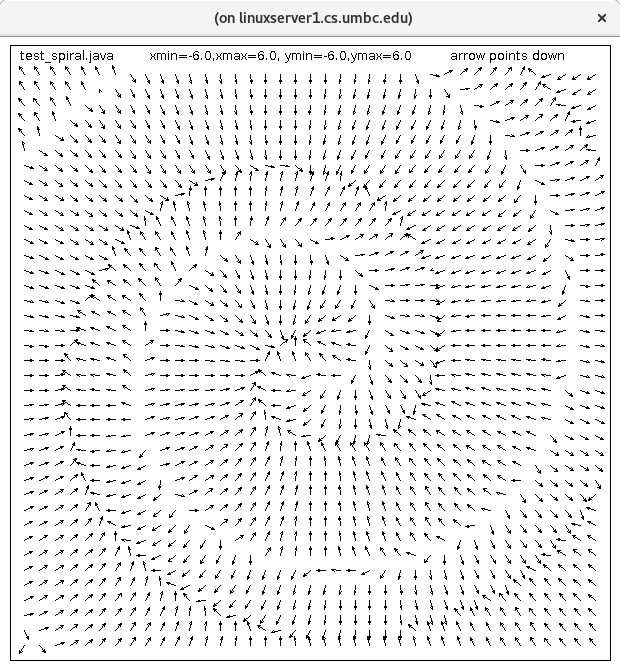

We will cover numeric computation of flow.

We will cover numeric computation of flow.

For more physics equations, units and conversion click here.

For more physics equations, center of mass, click here.

Homework 1 assignment

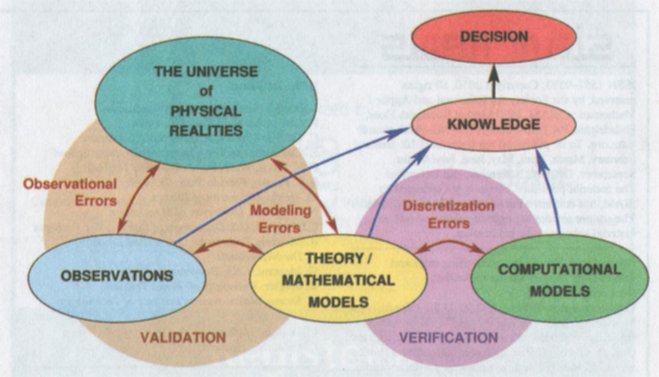

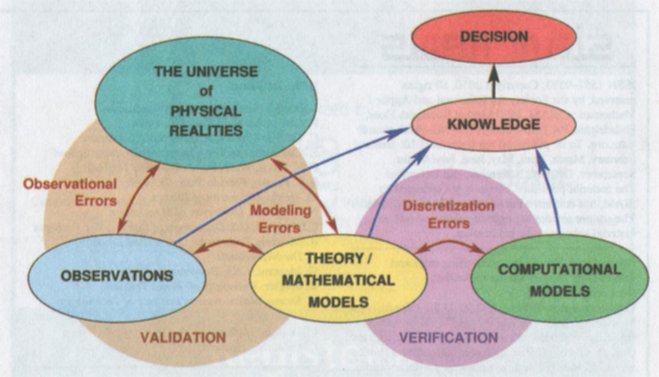

What you are doing is called "Modeling and Simulation", possibly

valuable buzz words on a job interview. Also "rocket scientist".

Observing what you are modeling may help.

For more physics equations, units and conversion click here.

For more physics equations, center of mass, click here.

Homework 1 assignment

What you are doing is called "Modeling and Simulation", possibly

valuable buzz words on a job interview. Also "rocket scientist".

Observing what you are modeling may help.

Simultaneous equations are multiple equations involving the same

variables. In general, to get a unique solution we need the

same number of equations as the number of unknown variables,

and the equations mutually linearly independent.

A sample set of three equations in three unknowns is:

eq1: 2*x + 3*y + 2*z = 13

eq2: 3*x + 2*y + 3*z = 17

eq3: 4*x - 2*y + 2*z = 12

One systematic solution method is called the Gauss-Jordan reduction.

We will reduce the three equations such that eq1: has only x,

eq2: has only y and eq3: has only z making the constant yield

the solution. Operations are always based on the latest version

of each equation. The numeric solution will perform the same

operations on a matrix.

Reduce the coefficient of x in the first equation to 1, dividing by 2

eq1: 1*x + 1.5*y + 1*z = 6.5

Eliminate the variable x from eq2: by subtracting eq2 coefficient of

x times eq1:

eq2: becomes eq2: - 3* eq1:

eq2: (3-3)*x + (2-4.5)*y + (3-3)*z = (17-19.5) then simplifying

eq2: 0*x - 2.5*y + 0*z = -2.5

Eliminate the variable x from eq3: by subtracting eq3 coefficient of

x times eq1:

eq3: becomes eq3: - 4* eq1:

eq3: (4-4)*x (-2 -6)*y + (2-4)*z = (12-26) then becomes

eq3: 0*x - 8*y - 2*z = -14

The three equations are now

eq1: 1*x + 1.5*y + 1*z = 6.5

eq2: 0*x - 2.5*y + 0*z = - 2.5

eq3: 0*x - 8.0*y - 2*z = -14.0

Reduce the coefficient of y in eq2: to 1, dividing by -2.5

eq2: 0*x + 1*y + 0*z = 1

Eliminate the variable y from eq1: by subtracting eq1 coefficient of y times eq2:

eq1: becomes eq1: -1.5* eq2:

eq1: 1*x + 0*y + 1*z = (6.5-1.5) then becomes

eq1: 1*x + 0*y + 1*z = 5

Eliminate the variable y from eq3: by subtracting eq3 coefficient of y times eq2:

eq3: becomes eq3: -8* eq2:

eq3: 0*x + 0*y - 2*z = (-14 +8) then becomes

eq3: 0*x + 0*y - 2*z = -6

The three equations are now

eq1: 1*x + 0*y + 1*z = 5

eq2: 0*x + 1*y + 0*z = 1

eq3: 0*x + 0*y - 2*z = -6

Reduce the coefficient of z in eq3: to 1, dividing by -2

eq3: 0*x + 0*y + 1*z = 3

Eliminate the variable z from eq1: by subtracting eq1 coefficient of z times eq2:

eq1: becomes eq1: -1* eq2:

eq1: 1*x + 0*y + 0*z = (5-3) then becomes

eq1: 1*x + 0*y + 0*z = 2

Eliminate the variable z from eq2: by subtracting eq2 coefficient of z times eq2:

eq2: becomes eq3: 0* eq2:

eq2: 0*x + 1*y + 0*z = 1

The three equations are now

eq1: 1*x + 0*y + 0*z = 2 or x = 2

eq2: 0*x + 1*y + 0*z = 1 or y = 1

eq3: 0*x + 0*y + 1*z = 3 or z = 3 the desired solution.

The numerical solution simply places the values in a matrix and uses the same

reductions shown above.

Given the equations: |A|*|X| = |Y| B = |AY|

eq1: 2*x + 3*y + 2*z = 13 | 2 3 2 | |x| |13|

eq2: 3*x + 2*y + 3*z = 17 | 3 2 3 |*|y|=|17|

eq3: 4*x - 2*y + 2*z = 12 | 4 -2 2 | |z| |12|

Create the matrix | 2 3 2 13 | having 3 rows and 4 columns

B = | 3 2 3 17 |

| 4 -2 2 12 |

The following code, using n=3 for these three equations, computes the

same desired solution as the manual method above.

for k=1:n

for j=k+1:n

B(k,j) = B(k,j)/B(k,k)

end

for i=1:n

if(i not k)

for j=1:n

B(i,j) = B(i,j) - B(i,k)*B(k,J)

Pivoting to avoid zero on diagonal and improve accuracy

Now we must consider the possible problem of B(k,k) being zero

for some value of k. It is rather obvious that the order of the

equations does not matter. The equations can be given in any

order and we get the same solution. Thus, simply interchange

any equation where we are about to divide by a zero B(k,k)

with some equation below that would not result in a zero B(k,k).

It turns out that we get better accuracy if we always pick

the equation that has the largest absolute value for B(k,k).

If the largest value turns out to be zero then there is no

unique solution for the set of equations.

We generally want numerical code to run efficiently and thus we

will not physically interchange the equations but rather keep

a row index array that tells us where the k th row is now.

The code for the final algorithm is given in the links below.

Note types of errors

test_simeq_small.c source code

test_simeq_small_c.out output

test_simeq_small.c results should be exactly X[0]=1.0, X[1]=-1.0

err is the error multiplying the solution, X, times matrix A*X=Y

a small change in data gives a big change in solution.

the big change is not indicated by the computed error.

decimal .835*X[0] + .667*X[1] = .168 Y[0]

decimal .333*X[0] + .266*X[1] = .067 Y[1]

flt-pt 0.835000*X[0] + 0.667000*X[1] = 0.168000

flt-pt 0.333000*X[0] + 0.266000*X[1] = 0.067000

initialization complete, solving

solution X[0]=1.0, err=0

solution X[1]=-1.0, err=0 -- expected

now perturb Y[1] from .067 to .066, .001 change, and run again

flt-pt 0.835000*X[0] + 0.667000*X[1] = 0.168000 Y[0]

flt-pt 0.333000*X[0] + 0.266000*X[1] = 0.066000 Y[1] -- only change

solution X[0]=-666.0, err=0

solution X[1]=834.0, err=2.84217e-14 -- X[1] WOW!

notice computed errors are still small,

yet, the values of X[0] and X[1] are very different.

Typical problem with people and computers:

Often called garbage in, garbage out!

test_inverse_small.c source code

test_inverse_small_c.out output

We will see later, when the norm of the coefficients of

simultaneous equations is inverted, and the norm of the inverse

is different by many orders of magnitude, the system will be

called ill-conditioned.

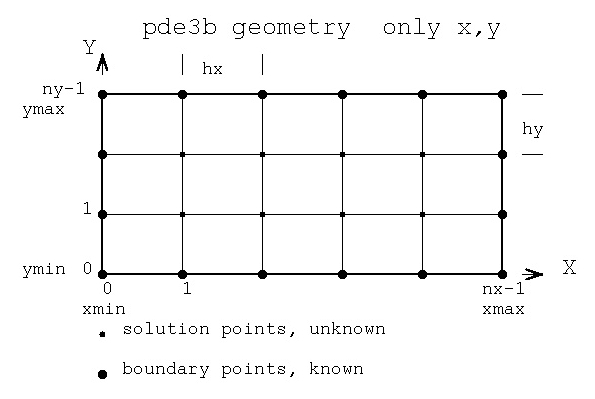

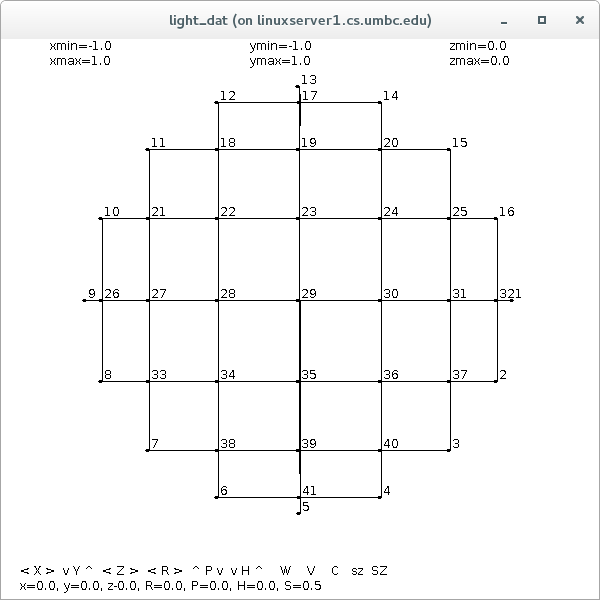

solving for function values on a grid

Solving for unknown function values on a given x,y grid:

test_eqn1 2*U(x,y) = C(x,y)

test_eqn2 2*U(x,y) + 3*V(x,y) = C(x,y)

3*U(x,y) + 3*V(x,y) = D(x,y)

test eqn3 2*U(x,y) + 3*V(x,y) + 4*W(x,y) = C(x,y)

4*U(x,y) + 2*V(x,y) + 3*W(x,y) = D(x,y)

3*U(x,y) + 4*V(x,y) + 2*W(x,y) = E(x,y)

test_eqn1.java source code

test_eqn1_java.out source code

test_eqn2.java source code

test_eqn2_java.out source code

test_eqn3.java source code

test_eqn3_java.out source code

test_eqn33.java source code

test_eqn33_java.out source code

test_eqn1.py3 source code

test_eqn1_py3.out source code

test_eqn2.py3 source code

test_eqn2_py3.out source code

test_eqn3.py3 source code

test_eqn3_py3.out source code

test_eqn33.py3 source code

test_eqn33_py3.out source code

Later lecture covers non linear equations.

Working code in many languages

The instructor understands that some students have a strong

prejudice in favor of, or against, some programming languages.

After about 50 years of programming in about 50 programming

languages, the instructor finds that the difference between

programming languages is mostly syntactic sugar. Yet, since

students may be able to read some programming languages

easier than others, these examples are presented in "C",

Fortran 95, Java and Ada 95. The intent was to do a quick

translation, keeping most of the source code the same,

for the different languages. Style was not a consideration.

Some rearranging of the order was used when convenient.

The numerical results are almost exactly the same.

The same code has been programmed in "C", Fortran 95, Java and Ada 95 etc.

as shown below with file types .c, .f90, .java and .adb .rb .py .scala:

Note the .h for C offers the user choices.

simeq.c "C" language source code

simeq.h "C" header file

time_simeq.c "C" language source code

time_simeq.out output

simeq.f90 Fortran 95 source code

time_simeq.f90 Fortran 95 source code

time_simeq_f90_cs.out output

simeq.java Java source code

time_simeq.java Java source code

time_simeq_java.out output

simeq.cpp C++ language source code

simeq.hpp C++ header file

test_simeq.cpp C++ language source code

test_simeq_cpp.out output

simeq.adb Ada 95 source code

real_arrays.ads Ada 95 source code

real_arrays.adb Ada 95 source code

Simeq.rb Ruby class Simeq

test_simeq.rb Ruby source code

test_simeq_rb.out Ruby output

simeq in matrix.pm Perl package

test_matrix.pl Perl source code test

test_matrix_pl.out Perl output

simeq.m MATLAB source code

simeq_m.out MATLAB output

With Python and downloading numpy using linalg

test_solve.py Python source code

test_solve_py.out Python output

With Python using numpy and array

test_simeq.py Python source code

test_simeq_py.out Python output

test_simeq.py3 Python source code

test_simeq_py3.out Python output

test_matmul.py3 Python source code

test_matmul_py3.out Python output

With Scala using Random, very small errors

Simeq.scala Scala source code

TestSimeq.scala Scala source code

TestSimeq_scala.out Scala output

Many methods have been devised for solving simultaneous equations.

A sample is LU decomposition and Crout method.

LU decomposition with pivoting:

lup_decomp.f90 source code

lup_decomp_f90.out output

simeq_lup.h source code

simeq_lup.c source code

time_simeq_lup.c source code

time_simeq_lup_cs.out output

simeq_lup.java source code

time_simeq_lup.java source code

time_simeq_lup_java.out output

Crout method without pivoting, uses back substitution:

crout.adb source code

crout_ada.out output

crout.f90 source code

time_crout.f90 source code

time_crout_f90.out output

crout.h source code

crout.c source code

time_crout.c source code

time_crout.c source code

time_crout_c.out output

crout1.h source code

crout1.c source code

time_crout1.c source code

time_crout1_c.out output

crout.java source code

test_crout.java accuracy test source

test_crout_java.out output

crout1.java source code

time_crout.java accuracy test source

time_crout_java.out output

Back substitution:

The difference from plain Gauss-Jordan with pivoting

and back substitution is less inner reduction,

then use back substitution. Solve A * X = Y

The initial reduction creates a matrix of the form:

| 1 a12 a13 a14 y1 |

| 0 1 a23 a24 y2 |

| 0 0 1 a34 y3 |

| 0 0 0 1 y4 |

Thus we have x4 = y4

Back substituting x3 = y3 - x4*a34

Back substituting x2 = y2 - x3*a23 - x4*a24

Back substituting x1 = y1 - x2*a12 - x3*a13 - x4*a14

simeq_back.h source code

simeq_back.c source code

test_simeq_back.c source code

test_simeq_back.out output

many methods in simeq.pdf

Tailoring

Throughout this course you will see variations of this source code,

tailored for specific applications. The packaging will change with

"C" files having code inside with 'static void', Fortran 95 code using

modules and, Java and Ada code using packages. Python etc. code.

It should be noted that the algorithm is exactly the same for sets

of equations with complex values. The code change is simply

changing the type in Fortran 95, Java, and Ada 95. The Java class

'Complex' is on my WEB page. The "C" code requires a lot of changes.

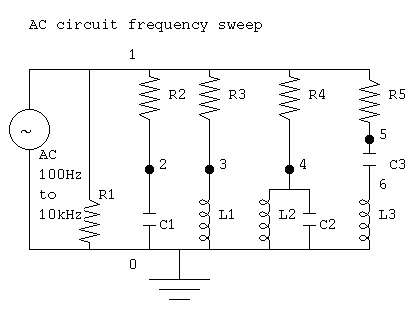

I wrote the first version of this program for the IBM 650 in assembly

language as an electrical engineering student. The program was for

complex values and solved for node voltages in alternating current

circuits. A quick and dirty version is ac_circuit.java

that needs a number of java packages:

Matrix.java

Complex.java

ComplexMatrix.java

ac_analysis.java an improved version

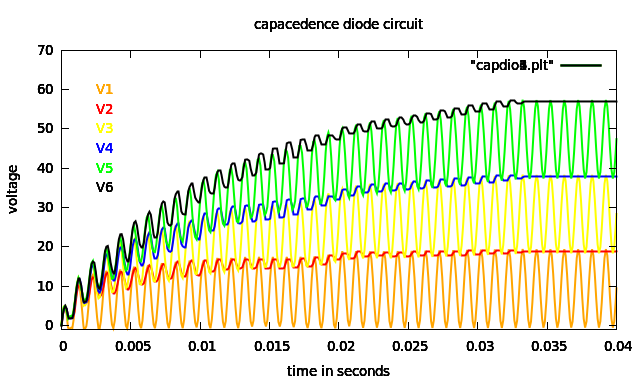

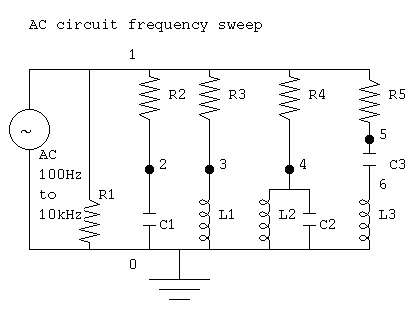

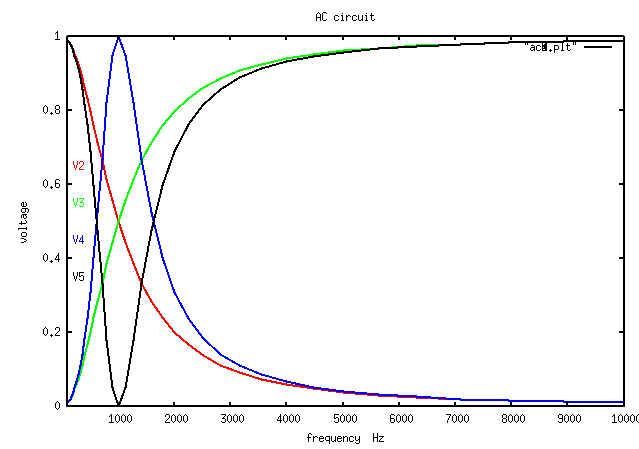

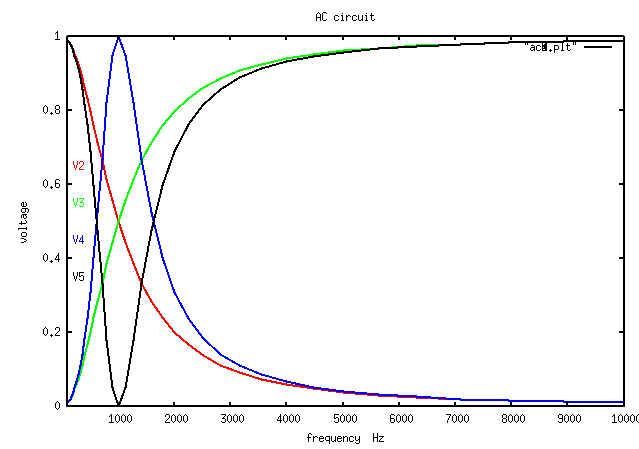

Then an even more complete version that plots up to eight node voltages.

ac_plot.java simple Java plot added

ac_plot.dat capacitor, inductor and tuned circuits

ac_plot.java simple Java plot added

ac_plot.dat capacitor, inductor and tuned circuits

Output of java myjava.ac_plot.java < ac_plot.dat

There are systems of equations with no solutions:

eq1: 1*x + 0*y + 0*z = 2

eq2: 2*x + 0*y + 0*z = 2

eq3: 4*x - 2*y + 3*z = 5

Some may ask: What about solving |A| * |X| = |Y| for X, given A and Y

using |X| = |Y| * |A|^-1 (inverse of matrix A) ?

The reason this is not a good numerical solution is that slightly

more total error will be in the inverse |A|^-1 and then a little

more error will come from the vector times matrix multiplication.

Output of java myjava.ac_plot.java < ac_plot.dat

There are systems of equations with no solutions:

eq1: 1*x + 0*y + 0*z = 2

eq2: 2*x + 0*y + 0*z = 2

eq3: 4*x - 2*y + 3*z = 5

Some may ask: What about solving |A| * |X| = |Y| for X, given A and Y

using |X| = |Y| * |A|^-1 (inverse of matrix A) ?

The reason this is not a good numerical solution is that slightly

more total error will be in the inverse |A|^-1 and then a little

more error will come from the vector times matrix multiplication.

Matrix Inverse

The code for matrix inverse is very similar to the code for solving

simultaneous equations. Added effort is needed to find the

maximum pivot element and there must be both row and column

interchanges. An example that shows the increasing error with the

increasing size of the matrix, on a difficult matrix, is shown below.

Note that results of a 16 by 16 matrix using 64-bit IEEE Floating

point arithmetic that is ill conditioned may become useless.

inverse.f90

test_inverse.f90

test_inverse_f90.out

Extracted form test_inverse_f90.out is

initializing big matrix, n= 2 , n*n= 4

sum of error= 1.84748050191530E-16 , avg error= 4.61870125478825E-17

initializing big matrix, n= 4 , n*n= 16

sum of error= 2.19971263426544E-12 , avg error= 1.37482039641590E-13

initializing big matrix, n= 8 , n*n= 64

sum of error= 0.00000604139304982709 , avg error= 9.43967664035483E-8

initializing big matrix, n= 16 , n*n= 256

sum of error= 83.9630735209012 , avg error= 0.327980755941020

initializing big matrix, n= 32 , n*n= 1024

sum of error= 4079.56590417946 , avg error= 3.98395107830025

initializing big matrix, n= 64 , n*n= 4096

sum of error= 53735.8765782488 , avg error= 13.1191104927365

initializing big matrix, n= 128 , n*n= 16384

sum of error= 85784.2643647822 , avg error= 5.23585597929579

initializing big matrix, n= 256 , n*n= 65536

sum of error= 1097119.16168229 , avg error= 16.7407098645368

initializing big matrix, n= 512 , n*n= 262144

sum of error= 1.36281435213093E+7 , avg error= 51.9872418262837

initializing big matrix, n= 1024 , n*n= 1048576

sum of error= 1.24247404738082E+9 , avg error= 1184.91558778841

Very similar results from the C version:

inverse.c

test_inverse.c

test_inverse.out

inverse.py

test_inverse.py

test_inverse_py.out

test_inv.py numpy version

test_inv_py.out

Extracted form test_inverse.out is

initializing big matrix, n=1024, n*n=1048576

sum of error=1.24247e+09, avg error=1184.92

Inverse.rb Ruby class Inverse

test_inverse.rb Ruby test

test_inverse_rb.out Ruby output

Multiple Precision

A case study using 32-bit IEEE floating point and 50, 100, and 200

digit multiple precision are shown in Lecture 3a

Reducing the number of equation when some values are known

Later, when we study partial differential equations, we will need

cs455_l3c.shtml a process for reducing the number

of equations when we know the value of one or more elements

of the unknown vector.

Nonlinear equations and systems of nonlinear equations

are covered in Lecture 16

Really difficult, are systems of nonlinear equations that need

a solution. The following examples have many comments describing

one or more possible methods of solution:

(Later versions have fewer bugs)

simeq_newton.adb with debug printout

simeq_newton_ada.out

simeq_newton2.adb

simeq_newton2_ada.out

simeq_newton5.adb

test_simeq_newton5.adb

test_simeq_newton5_ada.out

real_arrays.ads used by above

real_arrays.adb used by above

inverse.adb used by above

equation_nl.adb with debug printout

equation_nl_ada.out

simeq_newton.f90 with debug printout

simeq_newton_f90.out

simeq_newton2.f90

simeq_newton2_f90.out

inverse.f90 used by above

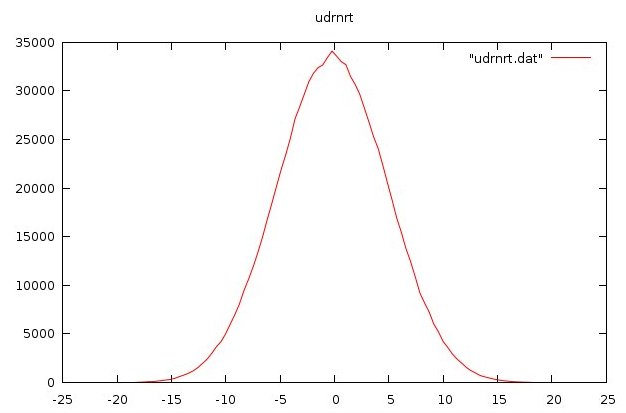

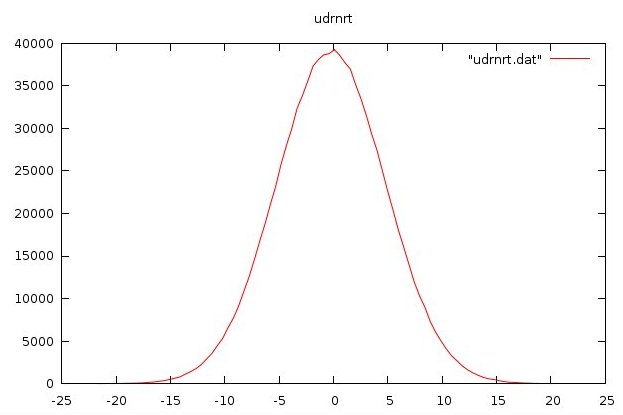

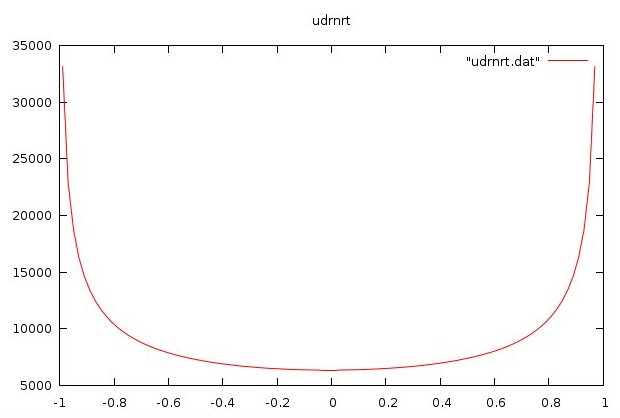

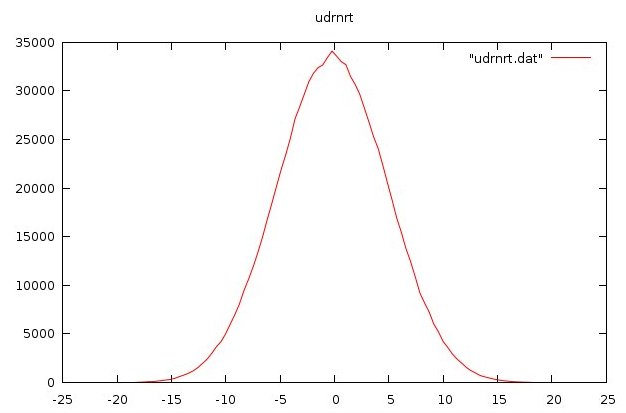

udrnrt.f90 used by above

equation_nl.f90 with debug printout

equation_nl_f90.out

simeq_newton.c with debug printout

simeq_newton.out

simeq_newton2.c

simeq_newton2.out

simeq_newton3.h

simeq_newton3.c

test_simeq_newton3.c

test_simeq_newton3_c.out

simeq_newton4.h

simeq_newton4.c

test_simeq_newton4.c

test_simeq_newton4_c.out

invert.h used by above

invert.c used by above

udrnrt.h used by above

udrnrt.c used by above

equation_nl.c with debug printout

equation_nl_c.out

simeq_newton.java with debug printout

simeq_newton2.java

test_simeq_newton2.java

test_simeq_newton2_java.out

simeq_newton3.java

test_simeq_newton3.java

test_simeq_newton3.java

simeq_newton5.java

test_simeq_newton5.java

test_simeq_newton5.java

test_pde_nl.java

test_pde_nl_java.out

test_46_nl.java simple demo

test_46_nl_java.out simple demo

simeq_newton5.py3

test_pde_nl.py3 simple test

test_pde_nl_py3.out simple test

simeq_newton5.java

test_simeq_newton5.java

test_simeq_newton5_java.out

invert.java used by above

equation_nl.java with debug printout

equation_nl_java.out

inverse.java used by above

Accuracy does degrade as the relative size of solution and

matrix elements gets large. Expect similar results with any method.

This program tests 0, 1, 2, 5, ... 1E9, 2E9, 5E9, 1E10 for various n.

simeq_accuracy.c big range test

simeq_accuracy_c.out big range results

Accuracy of all methods degrades with size of matrix on random data.

Not much error for 1024 by 1024 matrix.

Errors increase at 2048 by 2048 and 4096 by 4096.

Over 10K by 10K starts to need multiple precision floating point,

see next lectures.

For your information, modern manufacturing of automobiles:

www.youtube.com/embed/8_lfxPI5ObM?rel=0

The code for matrix inverse is very similar to the code for solving

simultaneous equations. Added effort is needed to find the

maximum pivot element and there must be both row and column

interchanges. An example that shows the increasing error with the

increasing size of the matrix, on a difficult matrix, is shown below.

Note that results of a 16 by 16 matrix using 64-bit IEEE Floating

point arithmetic that is ill conditioned may become useless.

Given a matrix A, computing the inverse AI, then checking that

|A| * |AI| = |II| is approximately the identity matrix |I|

is useful and possibly very important.

The check used for this case study was to sum the absolute values

of |II| - |I| and print the sum and also print the sum divided

by n*n, the number of elements in the matrix.

This case study uses the classic, difficult to invert,

variation of the Hilbert Matrix, in floating point format,

shown here as rational numbers:

| 1/2 1/3 1/4 1/5 | using i for the column index and

| 1/3 1/4 1/5 1/6 | j for the row index,

| 1/4 1/5 1/6 1/7 | the (i,j) element is 1/(i+j)

| 1/5 1/6 1/7 1/8 | as a floating point number

A few solutions are (A followed by AI):

| 1/2 | n=1 | 2 |

| 1/2 1/3 | n=2 | 18 -24 |

| 1/3 1/4 | | -24 36 |

| 1/2 1/3 1/4 1/5 | n=4 | 200 -1200 2100 -1120 |

| 1/3 1/4 1/5 1/6 | | -1200 8100 -15120 8400 |

| 1/4 1/5 1/6 1/7 | | 2100 -15120 29400 -16800 |

| 1/5 1/6 1/7 1/8 | | -1120 8400 -16800 9800 |

| 1/2 1/3 1/4 1/5 1/6 1/7 1/8 1/9 |

| 1/3 1/4 1/5 1/6 1/7 1/8 1/9 1/10 |

| 1/4 1/5 1/6 1/7 1/8 1/9 1/10 1/11 |

| 1/5 1/6 1/7 1/8 1/9 1/10 1/11 1/12 |

| 1/6 1/7 1/8 1/9 1/10 1/11 1/12 1/13 |

| 1/7 1/8 1/9 1/10 1/11 1/12 1/13 1/14 |

| 1/8 1/9 1/10 1/11 1/12 1/13 1/14 1/15 |

| 1/9 1/10 1/11 1/12 1/13 1/14 1/15 1/16 |

| 2592 -60480 498960 -1995840 4324320 -5189184 3243240 -823680 |

| -60480 1587600 -13970880 58212000 -129729600 158918760 -100900800 25945920 |

| 498960 -13970880 128066400 -548856000 1248647400 -1553872320 998917920 -259459200 |

| -1995840 58212000 -548856000 2401245000 -5549544000 6992425440 -454053600 118918800 |

| 4324320 -12972960 1248647400 5549544000 12985932960 -16527551040 10821610800 -2854051200 |

| -5189184 158918760 -1553872320 6992425440 -16527551040 21210357168 -13984850880 3710266560 |

| 3243240 -100900800 998917920 -4540536000 10821610800 -13984850880 9275666400 -2473511040 |

| -823679 25945920 -259459200 1189188000 -2854051200 3710266560 -2473511040 662547600 |

Note the exponential growth of the size of numbers in the inverse.

Now see increasing errors with size of simultaneous equations:

simeq2.c

simeq2.h

simeq2p.h

test_simeq_hilbert.c

test_simeq_hilbert_c.out

Much better accuracy with random matrices

test_simeq_random.c

test_simeq_random_c.out

One measure of the difficulty of inverting a matrix is the size

of the largest diagonal during each step of the inversion process.

The magnitude of the largest element of the inverse will be

approximately the order of the reciprocal of the smallest

of the largest diagonal.

The smallest of the largest diagonal for a few cases are:

n = 2 .277E-1

n = 4 .340E-4

n = 8 .770E-10

n = 16 .560E-22

n = 32 .358E-46

n = 64 .645E-95

n = 128 .178E-192

n = 256 failed with 200 digit multiple precision

The average error, as computed for various precisions, is

7-digit 15-digit 166-bit 332-bit 664-bit MatLab

IEEE IEEE mpf mpf mpf IEEE

n 32-bit 64-bit 50-digit 100-digit 200-digit 64-bit

2 1.29E-7 4.61E-17 1.99E-59 1.36E-107 6.37E-204 0.00

4 7.84E-5 1.37E-13 1.91E-58 3.05E-106 6.12E-203 2.39E-13

8 3.83E0 9.43E-8 4.44E-50 1.81E-98 3.24E-194 7.31E-7

16 1.23E1 3.27E-1 8.13E-39 2.77E-87 8.56E-183 2.37E2

32 5.20E0 3.98E0 4.72E-14 4.45E-62 1.19E-158 1.46E6

64 6.13E0 1.31E1 1.01E8 2.20E-13 1.20E-109 2.55E8

128 8.39E0 5.23E0 7.79E7 3.46E8 3.83E-12 2.27E10

256 1.14E3 1.67E1 1.76E8 8.51E7 1.05E8 8.38E11

The errors bigger than E-5 are very deceiving

in the first two columns. They indicate failure to invert.

MatLab does indicate failure for n=16 and larger, other codes had

the matrix singular error suppressed.

A reasonable conclusion, for this matrix, is that an n by n matrix

needs more than n bits of floating point precision in order to

get a reliable inverse. Twice the number of bits as n to get

good results.

Before you panic, notice the results for the same test in MatLab

for this hard to invert matrix verses a pseudo random matrix.

n=2, avgerr=0 , rnderr=4.16334e-017

n=4, avgerr=2.39808e-013 , rnderr=1.04246e-016

n=8, avgerr=7.31534e-007 , rnderr=7.86263e-016

n=16, avgerr=237.479 , rnderr=3.57e-016

n=32, avgerr=1.46377e+006 , rnderr=7.5899e-016

n=64, avgerr=2.55034e+008 , rnderr=2.34115e-015

n=128, avgerr=2.2773e+010 , rnderr=6.70795e-015

n=256, avgerr=8.38903e+011 , rnderr=1.9137e-014

Well conditioned matrices may be inverted for n in the range

10,000 to 20,000 with IEEE 64-bit floating point.

Many large matrices are sparse, having many zero elements,

and may have only bands of non zero elements. Unfortunately

the inverse of sparse matrices are not sparse, thus

sparse matrix storage techniques may actually be slower.

The MatLab code is, of course, the shortest (stripped here):

n=1;

for r=1:8

n=2*n;

A=zeros(n,n);

B=rand(n,n);

for i=1:n

for j=1:n

A(i,j)=1.0/(i+j);

end

end

avgerr=sum(sum(abs(A*inv(A)-eye(n,n))))/(n*n)

rnderr=sum(sum(abs(B*inv(B)-eye(n,n))))/(n*n)

end

The detailed code and results are:

invrnd.m actual MatLab code

invrnd_m.out file output

invrnd_mm.out partial screen output

inverse.f90 basic inverse

test_inverse.f90 test program

test_inverse_f90.out output

inverse.py basic inverse

test_inverse.fpy test program

test_inverse_py.out output

Simeq.scala has inverse

TestSimeq.scala test has inverse

TestSimeq_scala.out output has inverse

Extracted form test_inverse_f90.out is

initializing big matrix, n= 2 , n*n= 4

sum of error= 1.84748050191530E-16 , avg error= 4.61870125478825E-17

initializing big matrix, n= 4 , n*n= 16

sum of error= 2.19971263426544E-12 , avg error= 1.37482039641590E-13

initializing big matrix, n= 8 , n*n= 64

sum of error= 0.00000604139304982709 , avg error= 9.43967664035483E-8

initializing big matrix, n= 16 , n*n= 256

sum of error= 83.9630735209012 , avg error= 0.327980755941020

initializing big matrix, n= 32 , n*n= 1024

sum of error= 4079.56590417946 , avg error= 3.98395107830025

initializing big matrix, n= 64 , n*n= 4096

sum of error= 53735.8765782488 , avg error= 13.1191104927365

initializing big matrix, n= 128 , n*n= 16384

sum of error= 85784.2643647822 , avg error= 5.23585597929579

initializing big matrix, n= 256 , n*n= 65536

sum of error= 1097119.16168229 , avg error= 16.7407098645368

initializing big matrix, n= 512 , n*n= 262144

sum of error= 1.36281435213093E+7 , avg error= 51.9872418262837

initializing big matrix, n= 1024 , n*n= 1048576

sum of error= 1.24247404738082E+9 , avg error= 1184.91558778841

Very similar results from the C version:

inverse.c

inverse.h

test_inverse.c

test_inverse.out

Similar results from float rather than double in the C version:

inversef.c

inversef.h

test_inversef.c

test_inversef.out

Exploring results from 50 digit multiple precision arithmetic version:

mpf_inverse.c

mpf_inverse.h

test_mpf_inverse.c

test_mpf50_inverse.out

Changing 'digits' to 100 digit multiple precision arithmetic version:

test_mpf100_inverse.out

Changing 'digits' to 200 digit multiple precision arithmetic version:

test_mpf200_inverse.out

The 200 digit run with more output:

test_mpf_inverse.out

Java version, double

inverse.java

test_inverse.java

test_inverse_java.out

Java version, BigDecimal 300 bits or more

Big_inverse.java

test_Big_inverse.java

test_Big_inverse_java.out

Big_simeq.java

test_Big_simeq.java

test_Big_simeq_java.out

time_Big_simeq.java

time_Big_simeq_java.out

Big_nuderiv.java

test_Big_nuderiv

test_Big_nuderiv_java.out

Ada version, double

hilbert_inverse.adb

hilbert_inverse_ada.out

Ada version 50, 100 and 200 digits

digits_hilbert_inverse.adb

digits_hilbert_inverse_ada_50.out

digits_hilbert_inverse_ada_100.out

digits_hilbert_inverse_ada_200.out

Basic gcc stack storage limitation prevented getting all output.

Even happens on 64-bit computer with 8GB or RAM.

We have a number of clusters at UMBC, I happen to have used

our Bluegrit, Bluewave, Tara and Maya and Taki clusters and the

MPI examples are from these multi processor machines.

For multi core machines, there are Java Threads and

"C" pthreads and Ada tasks. I have a 12 core desktop

and Intel has a many core computer.

Examples are presented below.

At the end are a few multi core benchmarks for you to run.

We can use our biggest super computer on campus, Taki.

NOTE:

MPI is running on a distributed memory system.

Each process may be considered to have local memory and

in general, there is no common shared memory.

Multicore machines are described here as shared memory systems.

All memory is available to all threads and tasks.

Also know technically as a single address space.

Some parallel programming techniques apply to both

distributes and shared memory, some techniques do not

apply to both memory systems.

MPI

MPI stands for Message Passing Interface and is

available on many multiprocessors. MPI may be installed

as the open source version MPICH. There are other

software libraries and languages for multiprocessors,

yet, this lecture only covers MPI.

The WEB page here at UMBC is

www.csee.umbc.edu/help/MPI

Programming in MPI is the SPMD Single Program Multiple Data style of

programming. One program runs on all CPU's in the multiprocessor.

Each CPU has a number, called a rank in MPI, called myid in my code and

called node or node number in comments.

"if-then-else" code may be based on node number is used to have

unique computation on specific nodes. There is a master node,

typically the node with rank zero in MPI. The node number may

also be used in index expressions and other computation. Many

MPI programs use the master as a number cruncher along with the

other nodes in addition to the master serving as overall control

and synchronization.

Examples below are given first in "C" and then a few in Fortran.

Other languages may interface with the MPI library.

These just show a simple MPI use, these are combined later for

solving simultaneous equations on a multiprocessor.

Just check that a message can be sent and received from each

node, processor, CPU, etc. numbered as "rank".

roll_call.c

roll_call.out

Just scatter unique data from the "master" to all nodes.

Then gather the unique results from all nodes.

scat.c

scat.out

Here is the Makefile I used.

Makefile for C on Bluegrit cluster

Repeating the "roll_call" just changing the language to Fortran.

roll_call.F

roll_call_F.out

Repeating scatter/gather just changing the language to Fortran.

scat.F

scat_F.out

The Fortran version of the Makefile with additional files I used.

Makefile for Fortran on Bluegrit cluster

my_mpif.h only needed if not on cluster

nodes only needed if default machinefile not used

MPI Simultaneous Equations

Now, the purpose of this lecture, solve huge simultaneous

equations on a highly parallel multiprocessor.

Well, start small when programming a multiprocessor and print out

every step to be sure the indexing and communication is exactly

correct.

This is hard to read, yet it was a necessary step.

psimeq_debug.c

psimeq_debug.out

Then, some clean up and removing or commenting out most debug print:

psimeq1.c

psimeq1.out

The input data was created so that the exact answers were 1, 2, 3 ...

It is interesting to note: because the data in double precision floating

point was from the set of integers, the answers are exact for

8192 equations in 8192 unknowns.

psimeq1.out8192

|A| * |X| = |Y| given matrix |A| and vector |Y| find vector |X|

| 1 2 3 4 5 | |5| | 35| for 5 equations in 5 unknowns

| 2 2 3 4 5 | |4| | 40| the solved problem is this

| 3 3 3 4 5 |*|3|=| 49|

| 4 4 4 4 5 | |2| | 61|

| 5 5 5 5 5 | |1| | 75|

A series of timing runs were made, changing the number of equations.

The results were expected to increase in time as order n^3 over the

number of processors being used. Reasonable agreement was measured.

Using 16 processors:

Number of Time computing Cube root of

equations solution (sec) 16 times Time (should approximately double

1024 3.7 3.9 as number of equations double)

2048 17.2 6.5

4096 83.5 11.0

8192 471.9 19.6

More work may be performed to minimize the amount of

data send and received in "rbuf".

C pthreads Simultaneous Equations

Basic primitive barrier in C pthreads

run_thread.c

run_thread_c.out

Simultaneous equation solution using AMD 12 core

tsimeq.h

tsimeq.c

time_tsimeqb.c

time_tsimeqb.out

More examples of pthreads with debug printout

thread_loop.c with comments

thread_loop_c.out

Java Simultaneous Equations

Java threads are demonstrated by the following example.

RunThread.java

When run, there are four windows, each showing a dot as that thread runs.

RunThread.out

Note that CPU and Wall time are measured and printed. (on some Java versions)

The basic structure of threads needed for my code:

(I still have not figured out why I need the dumb "sleep" in 2)

Barrier2.java

Barrier2_java.out

(OK, several versions later)

CyclicBarrier4.java

CyclicBarrier4_java.out

Simultaneous equation solution with multiple processors in

a shared memory configuration is accomplished with:

psimeq.java

test_psimeq.java test driver

test_psimeq_java.out output

psimeq_dbg.java with lots of debug print

test_simeq_dbg.java with debug

test_psimeq_dbg_java.out output with debug

A better version making better use of threads and cyclic barrier:

simeq_thread.java

test_simeq_thread.java test driver

test_simeq_thread_java.out output

And, test results for "diff" the non threaded version

test_simeq_java.out output

Some crude timing tests:

time_simeq.java test driver

time_pimeq_java.out output

time_psimeq.java test driver

time_psimeq_java.out output yuk!

time_simeq_thread.java test driver

time_pimeq_thread_java.out output quad core

Ada Simultaneous Equations

Simultaneous equation solution with multiple processors in

a shared memory configuration is accomplished with:

psimeq.adb

test_psimeq.adb test driver

test_psimeq_ada.out output

psimeq_dbg.adb with lots of debug print

test_simeq_dbg.adb with debug

test_psimeq_dbg_ada.out output with debug

time_psimeq.adb test driver

time_psimeq_ada.out outputs

Then using Barriers

bsimeq_2.adb

time_bsimeq.adb test driver

time_bsimeq_ada.out outputs

Another tutorial type example

task_loop.adb with comments

task_loop_ada.out

Python Simultaneous Equations

The basic structure of threads needed for my code (python2):

barrier2.py

barrier2_py.out

Multiprocessor Benchmarks

"C" pthreads are demonstrated by an example that measures the

efficiency of two cores, four cores or eight cores.

time_mp2.c

time_mp2.out

The ratio of Wall time to CPU time indicates degree of parallelism.

time_mp4.c 4 core shared memory

time_mp4.out

time_mp8.c 8 core shared memory

time_mp8_c.out

My AMD 12-core desktop computer July 2010

time_mp12.c 12 core shared memory

time_mp12_c.out

time_mp4.java 4 core shared memory

time_mp4_java.out

time_mp8.java 8 core shared memory

time_mp8_java.out

pthreads using mutex.c and mutex.h

mutex.c encapsulate pthreads

mutex. encapsulate pthreads

thread4m.c main plus 4 threads

thread4m_c.out output

thread11m.c main plus 11 threads

thread11m_c.out output

for comparison, using just basic pthreads

thread4.c main plus 4 threads

thread4_c.out output

Comparison using Java threads, C pthreads on big matrix multiply

c[1000][1000] = a[1000][1000] * b[1000][1000]

for comparison, one thread time and four thread time:

Java example

matmul_thread4.java source code

matmul_thread4_java.out output

matmul_thread1.java source code no threads

matmul_thread1_java.out output

C pthread example

matmul_pthread4.c source code

matmul_pthread4.out output

matmul.c source code no threads

matmul_c.out output

Python3 threading and multitasking

thread3.py3 source code

thread3_py3.out output

multi.py3 source code

multi_py3.out output

C OpenMP example 1000 caused segfault, cut to 510

omp_matmul.c source code

omp_matmul.out output

Given numeric data points, find an equation that approximates the data

with a least square fit. This is one of many techniques for getting

an analytical approximation to numeric data.

The problem is stated as follows :

Given measured data for values of Y based on values of X1,X2 and X3. e.g.

Y_actual X1 X2 X3 observation, i

-------- ----- ----- -----

32.5 1.0 2.5 3.7 1

7.2 2.0 2.5 3.6 2

6.9 3.0 2.7 3.5 3

22.4 2.2 2.1 3.1 4

10.4 1.5 2.0 2.6 5

11.3 1.6 2.0 3.1 6

Find a, b and c such that Y_approximate = a * X1 + b * X2 + c * X3

and such that the sum of (Y_actual - Y_approximate) squared is minimized.

(We are minimizing RMS error.)

The method for determining the coefficients a, b and c follows directly

form the problem definition and mathematical analysis given below.

Set up and solve the system of linear equations:

(Each SUM is for i=1 thru 6 per table above, note symmetry)

| SUM(X1*X1) SUM(X1*X2) SUM(X1*X3) | | a | | SUM(X1*Y) |

| SUM(X2*X1) SUM(X2*X2) SUM(X2*X3) | x | b | = | SUM(X2*Y) |

| SUM(X3*X1) SUM(X3*X2) SUM(X3*X3) | | c | | SUM(X3*Y) |

Easy to program, not good data:

lsfit_lect.c

lsfit_lect_c.out

lsfit_lect.java

lsfit_lect_java.out

lsfit_lect.py

lsfit_lect_py.out

lsfit_lect.f90

lsfit_lect_f90.out

lsfit_lect.m similar to c

lsfit_lect_m.out

test_lsfit.py3 Python3 two methods

test_lsfit_py3.out output

test_lsfit2.py3 Python3 two methods

test_lsfit2_py3.out output better

Now, suppose you wanted a constant term to make the fit:

Y_approximate = Y0 + a * X1 + b * X2 + c * X3

Then the linear equations would be:

| SUM( 1* 1) SUM( 1*X1) SUM( 1*X2) SUM( 1*X3) | | Y0 | | SUM( 1*Y) |

| SUM(X1* 1) SUM(X1*X1) SUM(X1*X2) SUM(X1*X3) | | a | | SUM(X1*Y) |

| SUM(X2* 1) SUM(X2*X1) SUM(X2*X2) SUM(X2*X3) | x | b | = | SUM(X2*Y) |

| SUM(X3* 1) SUM(X3*X1) SUM(X3*X2) SUM(X3*X3) | | c | | SUM(X3*Y) |

Note the symmetry! Easy to program.

Note the simultaneous equations, from Lecture 3: |A| x |X| = |Y|

|A| and |Y| easily computable, solve for |X| to get Y0, a, b and c

We now have a simple equation to compute Y approximately from a reasonable

range of X1, X2, and X3.

Y is called the dependent variable and X1 .. Xn the independent variables.

The procedures below implement a few special cases and the general case.

The number of independent variables can vary, e.g. 2D, 3D, etc. .

The approximation equation may use powers of the independent variables

The user may create additional independent variables e.g. X2 = SIN(X1)

with the restriction that the independent variables are linearly

independent. e.g. Xi not equal p Xj + q for all i,j,p,q

Mathematical derivation

The mathematical derivation of the least square fit is as follows :

Given data for the independent variable Y in terms of the dependent

variables S,T,U and V consider that there exists a function F

such that Y = F(S,T,U,V)

The problem is to find coefficients a,b,c and d such that

Y_approximate = a * S + b * T + c * U + d * V

and such that the sum of ( Y - Y_approximate ) squared is minimized.

Note: a, b, c, d are scalars. S, T, U, V, Y, Y_approximate are vectors.

To find the minimum of SUM((Y - Y_approximate)^2)

the derivatives must be taken with respect to a,b,c and d and

all must equal zero simultaneously. The steps follow :

SUM((Y - Y_approximate)^2) = SUM((Y - a*S - b*T - c*U - d*V)^2)

d/da = -2 * S * SUM( Y - a*S - b*T - c*U - d*V )

d/db = -2 * T * SUM( Y - a*S - b*T - c*U - d*V )

d/dc = -2 * U * SUM( Y - a*S - b*T - c*U - d*V )

d/dd = -2 * V * SUM( Y - a*S - b*T - c*U - d*V )

Setting each of the above equal to zero (derivative minimum at zero)

and putting constant term on left, the -2 is factored out,

the independent variable is moved inside the summation

SUM( a*S*S + b*S*T + c*S*U + d*S*V = S*Y )

SUM( a*T*S + b*T*T + c*T*U + d*T*V = T*Y )

SUM( a*U*S + b*U*T + c*U*U + d*U*V = U*Y )

SUM( a*V*S + b*V*T + c*V*U + d*V*V = V*Y )

Distributing the SUM inside yields

a * SUM(S*S) + b * SUM(S*T) + c * SUM(S*U) + d * SUM(S*V) = SUM(S*Y)

a * SUM(T*S) + b * SUM(T*T) + c * SUM(T*U) + d * SUM(T*V) = SUM(T*Y)

a * SUM(U*S) + b * SUM(U*T) + c * SUM(U*U) + d * SUM(U*V) = SUM(U*Y)

a * SUM(V*S) + b * SUM(V*T) + c * SUM(V*U) + d * SUM(V*V) = SUM(V*Y)

To find the coefficients a,b,c and d solve the linear system of equations

| SUM(S*S) SUM(S*T) SUM(S*U) SUM(S*V) | | a | | SUM(S*Y) |

| SUM(T*S) SUM(T*T) SUM(T*U) SUM(T*V) | x | b | = | SUM(T*Y) |

| SUM(U*S) SUM(U*T) SUM(U*U) SUM(U*V) | | c | | SUM(U*Y) |

| SUM(V*S) SUM(V*T) SUM(V*U) SUM(V*V) | | d | | SUM(V*Y) |

Some observations :

S,T,U and V must be linearly independent.

There must be more data sets (Y, S, T, U, V) than variables.

The analysis did not depend on the number of independent variables

A polynomial fit results from the substitutions S=1, T=x, U=x^2, V=x^3

The general case for any order polynomial of any number of variables

may be used with a substitution, for example, S=1, T=x, U=y, V=x^2,

W=x*y, X= y^2, etc to terms such as exp(x), log(x), sin(x), cos(x).

Any number of terms may be used. The "1" is for the constant term.

Using the S,T,U,V notation above, fitting Y_approx to find a, b, c, d

Y_approx = a*S + b*T + c*U + d*V + ...

Choose S = 1.0 thus a is the constant term

Choose T = log(x) thus b is the coefficient of log(x)

Choose U = log(x*x) thus c is the coefficient of log(x*x)

Choose V = sin(x) thus d is the coefficient of sin(x)

see 4 additional terms in lsfit_log.c

Thus our x data, n = 21 samples in code, is fit to

Y_approx = a + b*log(x) + c*log(x*x) + d*sin(x) + ...

By putting the terms in a vector, simple indexing builds the matrix:

A[i][j] = A[i][j] + term_i * term_j summing over n terms using k

lsfit_log.c fitting log and other terms

lsfit_log_c.out

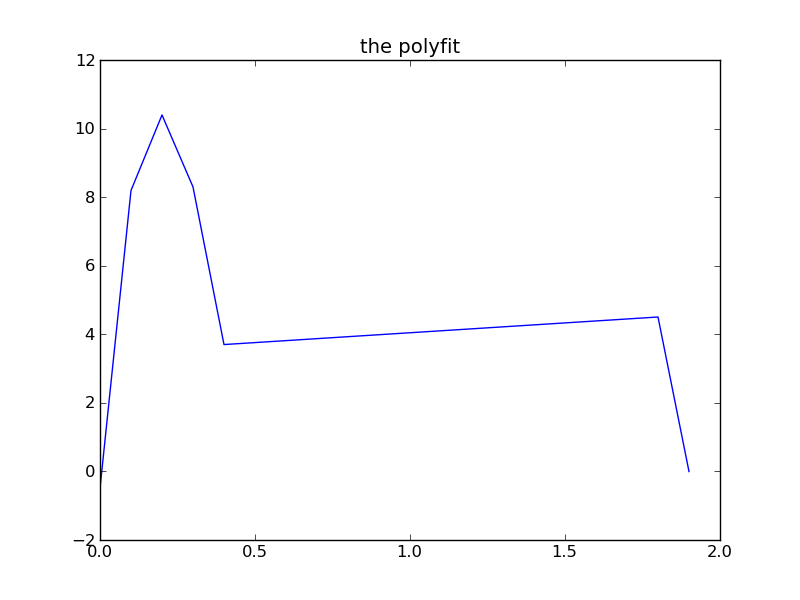

fitting a simple polynomial, 1D

Now, suppose you wanted to fit a simple polynomial:

Given the value of Y for at least four values of X,

Y_approximate = C0 + C1 * X + C2 * X^2 + C3 * X^3

Then the linear equations would be A*X=Y:

| SUM(1 *1) SUM(1 *X) SUM(1 *X^2) SUM(1 *X^3) | | C0 | | SUM(1 *Y) |

| SUM(X *1) SUM(X *X) SUM(X *X^2) SUM(X *X^3) | | C1 | | SUM(X *Y) |

| SUM(X^2*1) SUM(X^2*X) SUM(X^2*X^2) SUM(X^2*X^3) |x| C2 | = | SUM(X^2*Y) |

| SUM(X^3*1) SUM(X^3*X) SUM(X^3*X^2) SUM(X^3*X^3) | | C3 | | SUM(X^3*Y) |

Note that the (i,j) subscript in the A matrix has SUM(X^(i)*X^(j))

for i=0..3, j=0..3

In C, to build A matrix and Y vector for solving simultaneous equations:

// sample polynomial least square fit, nth power, m values of xd and yd

for(i=0; i<n+1; i++)

{

for(j=0; j<n+1; j++)

{

A[i][j] = 0.0;

}

Y[i] = 0.0;

}

for(k=0; k<m; k++)

{

y = yd[k];

x = xd[k];

pwr[0] = 1.0;

for(i=1; i<=n+1; i++) pwr[i] = pwr[i-1]*x;

for(i=0; i<n+1; i++)

{

for(j=0; j<n+1; j++)

{

A[i][j] = A[i][j] + pwr[i]*pwr[j]; // SUM

}

Y[i] = Y[i] + y*pwr[i];

}

}

Solve the simultaneous equations A*X=Y for X[0]=C0, X[1]=C1, X[2]=C2, X[3]=C3

Note that the sum is taken over all observations and the "1" is

just shown to emphasize the symmetry.

Sample code in various languages:

least_square_fit.c really old

least_square_fit_c.out

least_square.py3

least_square_py3.out

test_lsfit.py3 Python3 two methods

test_lsfit_py3.out output

lsfit.py3 just 1D version for copying

peval.py3 just 1D version for copying

least_square_fit.f90

least_square_fit_f90.out

Least_square.rb Ruby class new

test_least_square.rb test

test_least_square_rb.out test output

least_square_fit.java

least_square_fit_java.out

least_square_fit_3d.java

least_square_fit_3d_java.out

least_square_fit_4d.java

least_square_fit_4d_java.out

A specialized version for use later with PDE's

lsfit.java

test_lsfit.java

test_lsfit_java.out

test_lsfit2.java

test_lsfit2_java.out

test_lsfit3.java

test_lsfit3_java.out

test_lsfit4.java

test_lsfit4_java.out

test_lsfit5.java

test_lsfit5_java.out

test_lsfit6.java

test_lsfit6_java.out

test_lsfit7.java

test_lsfit7_java.out

uses simeq.java

The Makefile entry that makes test_lsfit_java.out

test_lsfit_java.out: test_lsfit.java lsfit.java simeq.java

javac -cp . simeq.java

javac -cp . lsfit.java

javac -cp . test_lsfit.java

java -cp . test_lsfit > test_lsfit_java.out

rm -f *.class

least_square_fit.adb

least_square_fit_ada.out

least_square_fit_2d.adb

least_square_fit_2d_ada.out

least_square_fit_3d.adb

least_square_fit_3d_ada.out

least_square_fit_4d.adb

least_square_fit_4d_ada.out