These are not intended to be complete lecture notes. Complicated figures or tables or formulas are included here in case they were not clear or not copied correctly in class. Computer commands, directory names and file names are included. Specific help may be included here yet not presented in class. Source code may be included in line or by a link. Lecture numbers correspond to the syllabus numbering.

Introduction: Hello, my name is Jon Squire and I have been programming computers since 1959. I have served my time in corporate management for 25 years. This course covers a little history of computer architecture through some of the latest advances and practical information you may use in buying, upgrading or building your own computer. After this course, you can say that you have performed "modeling and simulation" possibly a valuable asset in finding a job. You will be skilled in converting graphical and schematic information to textual information and the reverse. Some Brief History: The ISA card slots were replaced by PCI card slots that are replaced by external USB devices. The serial port for RS232 devices is replaced by the USB port. Floppy disk are disappearing along with that connector on the motherboard. RAM still uses DIMM's and the slots have grown to handle 4, 8 and 16 gigabytes of memory. ATA hard drives are replaced by SATA hard drives, 5TB and more available. Some rotating hard drives are being replaced by SSD, solid state drives. The printer port will be going as will the AGP graphics connector. HDMI and now DP. That expensive graphics card you bought will probably not work in your new computer. I have been saving architecture news. Overview: This course will present detailed information on the internal working of the CPU, cache, memory, busses and peripheral devices such as disk drives and DVD's. The course five part project will have each student simulate a small computer using the VHDL digital simulation language. Either Cadence VHDL or free GHDL. Read the syllabus. All of the lectures are covered in these WEB pages. Lecture notes are often updated. (You may ask questions.) And, sometimes corrected after questions. Some information is still presented on the blackboard/whiteboard. Check UMBC "Blackboard" for announcements and grades. The Top 500 Multiprocessor systems are evaluated about every six months. These are not your typical home computers. The Top 10 are shown www.top500.org/lists/2020/06 As many as 10 million cores! (How many in your computer?) More Lecture 1, pdf format The free market system and resulting competition, provide better and more economical products to consumers. Expect flip-flop between vendors for best or most economical products. A standard engineering statement is: Fast, Cheap, Reliable - pick any two. Monopolies: Ford Motor Company, Standard Oil of New Jersey, IBM, AT&T, ... Microsoft. Computer Architecture Development: System Architecture

Logic Design

Circuit Design

Device Physics For the inverter above, a chip cross section is:

N type and P type impurities are diffused into the silicon substrate through a mask, typically in a high temperature vacuum process. Oh! Oh! It is now predicted that Moore's Law: The gate width of transistors will halve every 18 months, will end in 2021. Prior estimates ended in 2028. Never fear, monolithic 3D is here. Mask Making and Processing

The black would be a metalization mask, here showing the transistor input connection. Other masks are for P+, N+, N well and via (the etch through the SiO2 to allow electrical connection to metal.)

The large round wafer, after processing with all the masks, is broke up into many rectangular dies. Each die is placed in a package and the input and output pads on the die are connected to the pins on the package. The die in the package is called a chip or IC chip or Integrated Circuit Chip. "Feature size" is the smallest dimension of metal width, gate width, metal spacing, etc. coming 12 nanometers is 0.000 000 012 meter or less than 1 millionth of an inch.

This gets smaller every year or so.

BYOC

Build your own computer

I have built several computers buying a case, motherboard, cpu, ram, drives, video, audio. My older desktop, AMD FX 8-core is Cybertron G1244A 16 GB ram, 1/2 TB SDD. (Replacing my old 12 core AMD that is acting up.) Now new Dell Precision 7920 Tower with 16 cores. You want DDR3, SATA3, SSD we will cover these in future lectures. Look at Homework 1, it is assigned today.

The best method of measuring a computers performance is to use benchmarks. Some suggestions from my personal experience preparing a benchmark suite and several updates and personal benchmark experience are presented in pdf format. Lecture 2 Smaller time is better, higher clock frequency is better. time = 1 / frequency T = 1/F and F = 1/T 1 picosecond = 1 / 1 THz 1 nanosecond = 1 / 1 GHz 1 microsecond = 1 / 1 MHz 1 millisecond = 1 / 1 Khz kilohertz KHz = 10^3 cycles per second clock megahertz MHz = 10^6 cycles per second clock gigahertz GHz = 10^9 cycles per second clock terahertz THz = 10^12 cycles per second clock Definitions: CPI Clocks Per Instruction MHz Megahertz, millions of cycles per second MIPS Millions of Instructions Per Second = MHz / CPI MOPS Millions of Operations Per Second MFLOPS Millions of Floating point Operations Per Second MIOPS Millions of Integer Operations Per Second Do not trust your computers clock or the software that reads and processes the time. First: Test the wall clock time against your watch. time_test.c time_test.java time_test.py time_test.f90 The program displays 0, 5, 10, 15 ... at 0 seconds, 5 seconds, 10 seconds etc.

demonstrate time_test if possible

Note the use of <time.h> and 'time()' Beware, midnight is zero seconds. Then 60 sec/min * 60 min/hr * 24 hr/day = 86,400 sec/day Just before midnight is 86,399 seconds. Running a benchmark across midnight may give a negative time. Then: Test CPU time, this should be just the time used by the program that is running. With only this program running, checking against your watch should work. time_cpu.c time_cpu.java time_cpu.py The program displays 0, 5, 10, 15 ... at 0 seconds, 5 seconds, 10 seconds etc. Note the use of <time.h> and '(double)clock()/(double)CLOCKS_PER_SEC' I have found one machine with the constant CLOCKS_PER_SECOND completely wrong and another machine with a value 64 that should have been 100. A computer used for real time applications could have a value of 1,000,000 or more. More graphs of FFT benchmarks The source code, C language, for the FFT benchmarks: Note the check run to be sure the code works. Note the non uniform data to avoid special cases. fft_time.c main program fftc.h header file FFT and inverse FFT for various numbers of complex data points The same source code was used for all benchmark measurements. These were optimized for embedded computer use where all constants were burned into rom. fft16.c ifft16.c fft32.c ifft32.c fft64.c ifft64.c fft128.c ifft128.c fft256.c ifft256.c fft512.c ifft512.c fft1024.c ifft1024.c fft2048.c ifft2048.c fft4096.c ifft4096.c Some of the result files: P1-166MHz P1-166MHz -O2 P2-266MHz P2-266MHz -O2 Celeron-500MHz P3-450MHz MS P3-450MHz Linux PPC-2.2GHz PPC-2.5GHz P4-2.53GHz XP Alpha-533MHz XP Xeon-2.8GHz Athlon-1.4GHz MS Athlon-1.4GHz XP Athlon-1.4GHz SuSe Laptop Win7 Laptop Ubuntu What if you are benchmarking a multiprocessor? For example, a two core or quad core, then use both CPU time and wall time to get average processor loading: time_mp2.c for two cores time_mp4.c for quad cores time_mp8.c for two quad cores time_mp12.c for two six cores The output from a two cores is: time_mp2_c.out for two core Xeon The output from four cores is: time_mp4_c.out for Mac quad G5 The output from eight cores is: time_mp8_c.out for AMD 12-core The output from twelve cores is: time_mp12_c.out for AMD 12-core end of time_mp12_c.out file: total CPU time is 342.970000 seconds wall time is 29.000000 seconds average number of processors used = 11.826552 time_mp12.c exiting Similar tests in Java time_test.java time_cpu.java time_mp4.java for quad cores time_mp8.java for eight cores time_mp8.java for eight and twelve cores time_mp4_java.out for quad Xeon G5 time_mp8_java.out for 8 thread Xeon G5 time_mp8_java_fx.out for 8 core AMD FX time_mp12_java.out for 8 thread Xeon G5 time_mp12_12_java.out for 12 core AMD matmul_thread4.java matmul_thread4_java.out Time_test and threads in Python time_test.py time_cpu.py parallel_matmul.py parallel_matmul_py.out OK, since these were old and I did not want to change them, they give some indications of performance on various machines with various operating systems and compiler options. To measure very short times, a higher quality, double-difference method is needed. The following program measures the time to do a double precision floating point add. This may be a time smaller than 1ns, 10^-9 seconds. A test harness is needed to calibrate the loops and make sure dead code elimination can not be used by the compiler. The the item to be tested is placed in a copy of the test harness to make the measurement. The time of the test harness is the stop minus start time in seconds. The time for the measurement is the stop minus start time in seconds. The difference, thus double difference, between the harness and measurement is the time for the item being measured. Here A = A + B with B not known to be a constant by the compiler, is reasonably expected to be a single instruction to add B to a register. If not, we have timed the full statement. The double difference time must be divided by the total number of iterations from the nested loops to get the time for the computer to execute the item once. An attempt is made to get a very stable time measurement. Doubling the number of iterations should double the time. Summary of double difference t1 saved run test harness t2 saved t3 saved run measurement, test harness with item to be timed t4 saved tdiff = (t4-t3) - (t2-t1) t_item = tdiff / number of iterations check against previous time, if not close, double iterations The source code is: time_fadd.c fadd on P4 2.53GHz fadd on Xeon 2.66GHz fadd on Mac 2.5GHz end of Mac output: time_fadd.c ... rep=16384, t measured=0.814363 rep=32768, t measured=1.62344 rep=65536, t measured=3.28666 tmeas=3.28666, t_prev=0, rep=65536 rep=65536, t measured=3.28829 tmeas=3.28829, t_prev=3.28666, rep=65536 time measured=3.28829, under minimum raw time=3.28829, fadd time=5.01629e-10, rep=65536, stable=0.000497342 Some extra information for students wanting to explore their computer: Windows OS Linux OS

What is in my computer?

start cd /proc control panel cat cpuinfo system device manager processor etc.

What processes are running in my computer?

ctrl-alt-del ps -el process top How do I easily time a program? command prompt time prog < input > output time

prog < input > output time The time available through normal software calls may be updated less than 30 times per second to more than a million times per second. A general rule of thumb is to have the time being measured be 10 seconds or more. This will give a reasonable accurate time measurement on all computers. Just repeat what is being measured if it does not run 10 seconds. Some history about computer time reporting. There were time sharing system where you bought time on the computer by the cpu second. There is the cpu time your program requires that is usually called your process time. There is also operating system cpu time. When there are multiple processes running, the operating system time slices, running each job for a short time, called a quanta. The operating system must manage memory, devices, scheduling and related tasks. In the past we had to keep a very close eye on how cpu time was charged to the users process verses the systems processes and was "dead time" the idle process, charged to either. From a users point of view, the user did not request to be swapped out, thus the user does not want any of the operating system time for stopping and restarting the users process to be charged to the user. Another historic tidbit, some Unix systems would add one microsecond to the time reported on each system request for the time. Never allowing the same time to be reported twice even if the clock had not updated. This was to ensure that all disk file times were unique and thus programs such as 'make' would be reliable. For more recent SPEC benchmarks, 2006 is suit date, run 2015,2016,2017,2018,2019,2020 see CPU integer benchmarks,SPECint, floating point benchmarks,SPECfp www.spec.org/cpu2006/Docs/ Some times you just have to buy the top of the line and forget benchmarks. Now find a display with 2,560 by 2,048 resolution! (other than the NASA display)

Newegg has an Acer 22 inch HDMI 1920 by 1080 for under $100 in 2013 HDMI replaces VGA connection from computer to display.

Repeating some definitions: CPI Clocks Per Instruction MHz megahertz, millions of cycles per second MIPS Millions of Instructions Per Second = MHz / CPI MOPS Millions of Operations Per Second MFLOPS Millions of Floating point Operations Per Second MIOPS Millions of Integer Operations Per Second (Classical, old, terms. Today would be billions.) Amdahl's Law (many forms, understand the concept) the part of time improved new time = ------------------------- + the part of time not improved factor improved by old time = the part of time improved + the part of time not improved old time speedup = -------- (always bigger over smaller when faster) new time Given: on some program, the CPU takes 9 sec and the disk I/O takes 1 sec What is the speedup using a CPU 9 times faster? 9 sec Answer: new time = ----- + 1 sec = 2 sec 9 old time = 9 + 1 = 10 sec speedup = 10 / 2 = 5 a pure number ------------------------------------------------------------------------------ Amdahl's Law (many forms, understand the concept) new performance speedup = --------------- old performance Given: Performance of M1 is 100 MFLOPS and 200 MIOPS Performance of M2 is 50 MFLOPS and 250 MIOPS On a program using 10% floating point and 90% integer Which is faster? What is the speedup? Answer; .1 * 100 + .9 * 200 = 190 MIPS .1 * 50 + .9 * 250 = 230 MIPS (M2 is faster) speedup = 230/190 = 1.21 ------------------------------------------------------------------------------ old performance new performance = ----------------------------------------------------- fraction of old improved ------------------------ + fraction of old unimproved improvement factor Given: half of a 100 MIPS machine is speeded up by a factor of 3 what is the speedup relative to the original machine? 1 1 Answer: new performance = --------- * 100 MIPS = ---- * 100 MIPS = 150 MIPS 0.5 .666 --- + 0.5 3 1 speedup = 150 / 100 = 1.5 (same as -------------------------------) fraction improved ------------------ + fraction improvement factor unimproved speedup is a pure number, no units. The units must cancel. ------------------------------------------------------------------------------ SPEC Benchmarks The benchmarks change infrequently, for example 2006 - 2016 same The speed seems to increase every year. SPEC Int2006, 9 in C, 3 in C++ SPEC Flt2006, 17 in assorted Fortran, C, C++ SPEC many rules to follow recent int results recent flt results Note number of core available, results seem to be using just one core. ------------------------------------------------------------------------------ CPI is average Clocks Per Instruction. units: clock/inst MHz is frequency, we use millions of clocks per second. units: clock/sec MIPS is millions of instruction per second. units: inst/sec Note: MIPS=MHz/CPI because (clock/sec) / (clock/inst) = 10^6 inst/sec ( 5/4 of people do not understand fractions. ) Computing average CPI, Clocks Per Instruction -------given--------------- ----------compute------------ type clocks %use product RR 3 25% 3 * 25 = 75 RM 4 50% 4 * 50 = 200 MM 5 25% 5 * 25 = 125 ______ ____ 100% 400 sum 400/100 = 4 average CPI -------given--------------- ----------compute------------ type clocks instructions product RR 3 25,000 3 * 25,000 = 75,000 RM 4 50,000 4 * 50,000 = 200,000 MM 5 25,000 5 * 25,000 = 125,000 _______ _______ 100,000 400,000 sum 400,000/100,000 = 4 average CPI Find the faster sequence of instructions Prog1 vs Prog2 -------given--------------------- type clocks A 1 B 2 C 3 instruction counts for A B C Prog1 2 1 2 Prog2 4 1 1 ----------compute------------------------------ Prog1 A 1 2 1 * 2 = 2 B 2 1 2 * 1 = 2 C 3 2 3 * 2 = 6 __ sum 10 clocks Prog2 A 1 4 1 * 4 = 4 B 2 1 2 * 1 = 2 C 3 1 3 * 1 = 3 __ sum 9 clocks more instructions yet faster speedup = 10 clocks / 9 clocks = 1.111 a number (no units) cs411_opcodes.txt different from Computer Organization and Design 1/8/2020 rd is register destination, the result, general register 1 through 31 rs is the first register, A, source, general register 0 through 31 rt is the second register, B, source, general register 0 through 31 --val---- generally a 16 bit number that gets sign extended --adr---- a 16 bit address, gets sign extended and added to (rx) "i" is generally immediate, operand value is in the instruction Opcode Operands Machine code format 6 5 5 5 5 6 number of bits in field nop RR 00 0 0 0 0 00 add rd,rs,rt RR 00 rs rt rd 0 32 sub rd,rs,rt RR 00 rs rt rd 0 34 mul rd,rs,rt RR 00 rs rt rd 0 27 div rd,rs,rt RR 00 rs rt rd 0 24 and rd,rs,rt RR 00 rs rt rd 0 13 or rd,rs,rt RR 00 rs rt rd 0 15 srl rd,rt,shf RR 00 0 rt rd shf 03 sll rd,rt,shf RR 00 0 rt rd shf 02 cmpl rd,rt RR 00 0 rt rd 0 11 j jadr J 02 ------jadr-------- lwim rd,rs,val M 15 rs rd ---val---- addi rd,rs,val M 12 rs rd ---val---- beq rs,rt,adr M 29 rs rt ---adr---- lw rd,adr(rx) M 35 rx rd ---adr---- sw rt,adr(rx) M 43 rx rt ---adr---- instruction bits (binary of 6 5 5 5 5 6 format above) 3 3 2 2 2 2 2 2 2 2 2 2 1 1 1 1 1 1 1 1 1 1 1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0 | | | | | 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 nop 0 0 0 0 0 0 a a a a a b b b b b r r r r r -ignored- 1 0 0 0 0 0 add r,a,b 0 0 0 0 0 0 a a a a a b b b b b r r r r r -ignored- 1 0 0 0 1 0 sub r,a,b 0 0 0 0 0 0 a a a a a b b b b b r r r r r -ignored- 0 1 1 0 1 1 mul r,a,b 0 0 0 0 0 0 a a a a a b b b b b r r r r r -ignored- 0 1 1 0 0 0 div r,a,b 0 0 0 0 0 0 a a a a a b b b b b r r r r r -ignored- 0 0 1 1 0 1 and r,a,b 0 0 0 0 0 0 a a a a a b b b b b r r r r r -ignored- 0 0 1 1 1 1 or r,a,b 0 0 0 0 0 0 0 0 0 0 0 b b b b b r r r r r s s s s s 0 0 0 0 1 1 srl r,b,s 0 0 0 0 0 0 0 0 0 0 0 b b b b b r r r r r s s s s s 0 0 0 0 1 0 sll r,b,s 0 0 0 0 0 0 0 0 0 0 0 b b b b b r r r r r -ignored- 0 0 1 0 1 1 cmpl r,b 0 0 0 0 1 0 -----address to bits (27:2) of PC------------------ j adr 0 0 1 1 1 1 x x x x x r r r r r ---2's complement value-------- lwim r,val(x) 0 0 1 1 0 0 x x x x x r r r r r ---2's complement value-------- addi r,val(x) 0 1 1 1 0 1 a a a a a b b b b b ---2's complement address------ beq a,b,adr 1 0 0 0 1 1 x x x x x r r r r r ---2's complement address------ lw r,adr(x) 1 0 1 0 1 1 x x x x x b b b b b ---2's complement address------ sw b,adr(x) Definitions: nop no operation, no programmer visible registers or memory are changed, except PC gets PC+4 j adr bits 0 through 25 of the instruction are inserted into PC(27:2) probably should zero bits PC(1:0) but should be zero already lw r,adr(x) load word into register r from memory location (register x plus sign extended adr field) sw b,adr(x) store word from register b into memory location (register x plus sign extended adr field) beq a,b,adr branch on equal, if the contents of register a are equal to the contents of register b, add the, shifted by two, sign extended adr to the PC (The PC will have 4 added by then) lwim r,val(x) load immediate, the contents of register x is added to the sign extended value and the result put into register r addi r,val(x) add immediate, the contents of register x is added to the sign extended value and the result added to register r add r,a,b add register a to register b and put result into register r sub r,a,b subtract register b from register a and put result into register r mul r,a,b multiply register a by register b and put result into register r div r,a,b divide register a by register b and put result into register r and r,a,b and register a to register b and put result into register r or r,a,b or register a to register b and put result into register r srl r,b,s shift the contents of register b by s places right and put result in register r sll r,b,s shift the contents of register b by s places left and put result in register r cmpl r,b one's complement of register b goes into register r Also: no instructions are to have side effects or additional "features" General register list (applies to MIPS ISA and project) (note: project op codes may differ from MIPS/SGI) Register notes 0 $0 zero value, not writable 1 $1 2 $2 $v0 return values (convention, not constrained by hardware) 3 $3 $v1 4 $4 $a0 arguments (convention, not constrained by hardware) 5 $5 $a1 6 $6 $a2 7 $7 $a3 8 $8 $t0 temporaries(not saved by software convention over calls) 9 $9 $t1 10 $10 $t2 11 $11 $t3 12 $12 $t4 13 $13 $t5 14 $14 $t6 15 $15 $t7 16 $16 $s0 saved by software convention over calls 17 $17 $s1 18 $18 $s2 19 $19 $s3 20 $20 $s4 21 $21 $s5 22 $22 $s6 23 $23 $s7 24 $24 $t8 more temporaries 25 $25 $t9 26 $26 27 $27 28 $28 $gp global pointer ( more designations by software convention) 29 $29 $sp stack pointer 30 $30 $fp frame pointer 31 $31 $ra return address Remember: From a hardware view registers 1 through 31 are general purpose and identical. The above table is just software conventions. Register zero is always zero! Basic digital logic IA-64 Itanium We will cover multicore and parallel processors later. Amdahls law applies to them also.

HW2 assignment

We now look at instructions in memory, how they got there and

how they execute:

1. Start by using an editor to enter compiler language statements.

The editor writes your source code to a disk file.

2. A compiler reads the source code disk file and produces

assembly language instructions for a specific ISA that

will perform your compiler language statements. The assembly

language is written to a disk file.

3. An assembler reads the assembly language disk file and produces

a relocatable binary version of your program and writes it to

a disk file. This may be a main program or just a function or

subroutine. Typical file name extension is .o or .obj

4. A linkage editor or binder or loader combines the relocatable

binary files into an executable file. Addresses are relocated

and typically all instructions are put sequentially in a code

segment, all constant data in another segment, variables and

arrays in another segment and possibly making other segments.

The addresses in all executable files for a specific computer

start at the same address. These are virtual addresses and the

operating system will place the segments into RAM at other

real memory addresses. Windows file extension .exe

5. A program is executed by having the operating system load the

executable file into RAM and set the program counter to the

address of the first instruction that is to be executed in

the program. All programs might have the same starting address,

yet the operating system has set up the TLB to translate the

virtual instruction and data addresses to physical memory addresses.

The physical addresses are not available to the program or to a

debugger. This is part of the security an operating system

provides to prevent one persons program from affecting another

persons program.

A simple example:

Compiler input int a, b=4, c=7;

a = b + c;

Assembly language fragment (not unique)

lw $2,12($fp) b at 12 offset from frame pointer

lw $3,16($fp) c at 16 offset from frame pointer

add $2,$2,$3 R format instruction

sw $2,8($fp) a at 8 offset from frame pointer

Memory addresses in bytes, integer typically 4 bytes, 32 bits.

Loaded in machine

virtual address content 32-bits 8-hexadecimal digits

00000000 8FC2000C lw $2,12($fp)

00000004 8FC30010 lw $3,16($fp)

00000008 00000000 nop inserted for pipeline

0000000C 00431020 add $2,$2,$3

00000010 AFC20008 sw $2,8,($fp)

$fp has 10000000 (data frame)

10000000 00000000

10000004 00000001

10000008 00000000? a after execution

1000000C 00000004 b

10000010 00000007 c

Instruction field format for add $2,$2,$3

0000 0000 0100 0011 0001 0000 0010 0000 binary for 00431020 hex

vvvv vvss ssst tttt dddd dhhh hhvv vvvv 6,5,5,5,5,6 bit fields

0 | 2 | 3 | 2 | 0 | 32 decimal values of fields

Instruction field format for lw $2,12($fp) $fp is register 30

1000 1111 1100 0010 0000 0000 0000 1100 binary for 8FC2000C hex

vvvv vvxx xxxd dddd aaaa aaaa aaaa aaaa 6,5,5,16 bit fields

35 | 30 | 2 | 12 decimal values of fields

The person writing the assembler chose the format of an assembly

language line. The person designing the ISA chose the format of

the instruction. Why would you expect them to be in the same order?

A very simplified data flow of the add instruction. From the

registers to the ALU and back to the registers.

A very simplified data flow of the add instruction. From the

registers to the ALU and back to the registers.

The VHDL to use the ALU will be given to you as:

ALU: entity WORK.alu_32 port map(inA => EX_A,

inB => EX_aluB,

inst => EX_IR,

result => EX_result);

We will call the upper input "A" and the lower input "B"

and the output "result".

The extra input, EX_IR, not shown on the diagram above

is the instruction the ALU is to perform, add, sub, etc.

The instructions we will use in this course are specifically:

cs411_opcodes.txt

Each student needs to understand what the instructions are

and the use of each field in each instruction.

(Note: a few have bit patterns different from the book and

different from previous semesters in order to prevent copying.)

Our MIPS architecture computer uses five clocks to execute

a load word instruction.

1 0 0 0 1 1 x x x x x r r r r r ---2's complement address------ lw r,adr(x)

1. Fetch the instruction from memory

2. Decode the instruction and read the value of register xxxxx

3. Compute the memory address by adding the sign extended bottom

16 bits of the instruction to the contents of register xxxxx.

4. Fetch the data word from the memory address.

5. Write the data word from memory into the register rrrrr.

The VHDL to use the ALU will be given to you as:

ALU: entity WORK.alu_32 port map(inA => EX_A,

inB => EX_aluB,

inst => EX_IR,

result => EX_result);

We will call the upper input "A" and the lower input "B"

and the output "result".

The extra input, EX_IR, not shown on the diagram above

is the instruction the ALU is to perform, add, sub, etc.

The instructions we will use in this course are specifically:

cs411_opcodes.txt

Each student needs to understand what the instructions are

and the use of each field in each instruction.

(Note: a few have bit patterns different from the book and

different from previous semesters in order to prevent copying.)

Our MIPS architecture computer uses five clocks to execute

a load word instruction.

1 0 0 0 1 1 x x x x x r r r r r ---2's complement address------ lw r,adr(x)

1. Fetch the instruction from memory

2. Decode the instruction and read the value of register xxxxx

3. Compute the memory address by adding the sign extended bottom

16 bits of the instruction to the contents of register xxxxx.

4. Fetch the data word from the memory address.

5. Write the data word from memory into the register rrrrr.

When we cover "pipelining" you will see why five clocks are

used for every instruction, even though some instructions

need less than five.

Computer languages come in many varieties.

The information above applies to languages such

as C, C++, Fortran, Ada and others.

Many languages abstract the concept of binary relocatable

code, in what was originally called "crunch code".

These languages use their own form of intermediate files.

For example Pascal, Java, Python and others.

Other languages directly interpret the users source

files, possibly with some preprocessing.

For example SML, Haskel, Lisp, MatLab, Mathematica and

others.

With a completely new computer architecture, the first

"language" would be an assembly language. From this,

a primitive operating system would be built. Then,

typically an existing C compiler would be modified

for the new computer architecture. An alternative is

to build a cross compiler from C and gas, to

bootstrap existing code to the new architecture.

From then on, "reuse" goes into full effect and

millions of lines of existing software can be

running on the new computer architecture.

For Homework 3

The computer irix.gl.umbc.edu is no longer available.

This was a MIPS architecture using the same instructions

as we are using. The MIPS architecture is studied because

it is a much simpler and easier to understand architecture

than the Intel X86, IA-32.

Thus, to see the instructions in RAM, we will use the gdb

debugger on an Intel X86.

HW3 information

The information in hex.out will have lines similar to:

(gdb) disassemble

Dump of assembler code for function main:

RAM addr offset op code address and register

0x08048384 <main+0>: lea 0x4(%esp),%ecx

0x08048388 <main+4>: and $0xfffffff0,%esp

0x0804838b <main+7>: pushl 0xfffffffc(%ecx)

End of assembler dump.

(gdb) x/60x main

Note: 16 bytes per line, 4 32-bit words

but, these are X86 instructions, not MIPS !

0x8048384 <main>: 0x04244c8d 0xfff0e483 0x8955fc71 0x535657e5

0x8048394 <main+16>: 0x58ec8351 0x4589e089 0xe445c7cc 0x00000064

## ##

<main+19>----| <main+31>---|

0x8048397 0x80483A3

Because the MIPS architecture we are studying is a big endian

machine, we will count bytes from left to right for homework 3.

In hexadecimal, 0x12345678 is stored big end first 12

34

56

78

Little endian 0x12345678 is stored little end first 78

56

34

Each byte, 8 bits, is two hex digits 12

When we cover "pipelining" you will see why five clocks are

used for every instruction, even though some instructions

need less than five.

Computer languages come in many varieties.

The information above applies to languages such

as C, C++, Fortran, Ada and others.

Many languages abstract the concept of binary relocatable

code, in what was originally called "crunch code".

These languages use their own form of intermediate files.

For example Pascal, Java, Python and others.

Other languages directly interpret the users source

files, possibly with some preprocessing.

For example SML, Haskel, Lisp, MatLab, Mathematica and

others.

With a completely new computer architecture, the first

"language" would be an assembly language. From this,

a primitive operating system would be built. Then,

typically an existing C compiler would be modified

for the new computer architecture. An alternative is

to build a cross compiler from C and gas, to

bootstrap existing code to the new architecture.

From then on, "reuse" goes into full effect and

millions of lines of existing software can be

running on the new computer architecture.

For Homework 3

The computer irix.gl.umbc.edu is no longer available.

This was a MIPS architecture using the same instructions

as we are using. The MIPS architecture is studied because

it is a much simpler and easier to understand architecture

than the Intel X86, IA-32.

Thus, to see the instructions in RAM, we will use the gdb

debugger on an Intel X86.

HW3 information

The information in hex.out will have lines similar to:

(gdb) disassemble

Dump of assembler code for function main:

RAM addr offset op code address and register

0x08048384 <main+0>: lea 0x4(%esp),%ecx

0x08048388 <main+4>: and $0xfffffff0,%esp

0x0804838b <main+7>: pushl 0xfffffffc(%ecx)

End of assembler dump.

(gdb) x/60x main

Note: 16 bytes per line, 4 32-bit words

but, these are X86 instructions, not MIPS !

0x8048384 <main>: 0x04244c8d 0xfff0e483 0x8955fc71 0x535657e5

0x8048394 <main+16>: 0x58ec8351 0x4589e089 0xe445c7cc 0x00000064

## ##

<main+19>----| <main+31>---|

0x8048397 0x80483A3

Because the MIPS architecture we are studying is a big endian

machine, we will count bytes from left to right for homework 3.

In hexadecimal, 0x12345678 is stored big end first 12

34

56

78

Little endian 0x12345678 is stored little end first 78

56

34

Each byte, 8 bits, is two hex digits 12

Get paper handout, fill in values for registers and memory

as we discuss the instructions in this lecture.

The program starts with PC set to address zero.

The instructions are defined on cs411_opcodes.txt

part1.asm

part1.abs

part1.abs

address instruction assembly language

00000000 8C010074 lw $1,w1($0)

00000004 8C020078 lw $2,w2($0)

00000008 8C03007C lw $3,w3($0)

0000000C 00000000 nop

00000010 00000000 nop

00000014 00232020 add $4,$1,$3

00000018 00222822 sub $5,$1,$2

0000001C 000133C2 sll $6,$1,15

00000020 00023C03 srl $7,$2,16

00000024 0003400B cmpl $8,$3

00000028 0022480D or $9,$1,$2

0000002C 0023500F and $10,$1,$3

00000030 00435818 div $11,$2,$3

00000034 0062601B mul $12,$3,$2

00000038 AC010080 sw $1,w4($0)

0000003C 300D0074 addi $13,w1

00000040 00000000 nop

00000044 00000000 nop

00000048 8DAE0004 lw $14,4($13)

0000004C 31AF0008 addi $15,8($13)

00000050 3C100010 lwim $16,16

00000054 00000000 nop

00000058 00000000 nop

0000005C ADE30008 sw $3,8($15)

00000060 00000000 nop

00000064 00000000 nop

00000068 00000000 nop

0000006C 00000000 nop

00000070 00000000 nop

00000074 11111111 w1: word 0x11111111

00000078 22222222 w2: word 0x22222222

0000007C 33333333 w3: word 0x33333333

00000080 44444444 w4: word 0x44444444

After the CPU has executed the first instruction:

General Registers RAM memory

initial final

$0 00000000

--------

$1 11111111 00000074 11111111

-------- -------- ____________

$2 00000078 22222222

______________ -------- ____________

$3 0000007c 33333333

______________ -------- ____________

$4 00000080 44444444

______________ -------- ____________

$5 00000084 xxxxxxxx

______________ -------- ____________

$6

______________

$7

______________

$8

______________

$9

______________

$10

______________

$11

______________

$12

______________

This is part of your project: part1.abs

and the result of running that small program part1.chk:

part1.chk

Note the large amount of information printed each clock time.

Note that it takes 5 clock cycles to finish an instruction.

Basic MUX Truth Table and Schematic

How MUX are used to route data

How MUX are used to route data

You can see much of the code for the above in the

starter code for Proj1:

part1_start.vhdl

There are basic design principles for computer architecture

and many apply to broader applications.

Design Principle 1:

Simplicity is best achieved through regularity.

A few building blocks, used systematically, will have

fewer errors, be available sooner and sell for less.

A uniform instruction set allows better compilers.

Design Principle 2:

Smaller is faster:

Smaller feature size means signals can move faster.

Shorter paths, less stages, allow completion sooner.

Design Principle 3:

Good design requires good compromises.

There are no perfect architectures. There are kluges.

Design Principle 4:

Make the common part fast.

Amdahl's law, be sure you are maximizing speedup.

Pentium 4 Hyper threading

Intel Core Duo

AMD quad core, one core shown

$329 for just 1/2 quad core processor

a 4 CPU, 8GB RAM configuration

Now 12-core 16GB RAM, 3 hard drives, 2 DVD writers

Practice safe computing!

Beware Malware, Spyware and Adware:

Do everything you can to keep malware from infecting your systems,

malware authors do all they can to keep their work from being

detected and removed. By looking at the methods that malware uses

to keep itself safe, you can better root it out and remove

it before the damage is done. Downloading attachments is the

primary way malware gets into your system.

HW3 assigned

You can see much of the code for the above in the

starter code for Proj1:

part1_start.vhdl

There are basic design principles for computer architecture

and many apply to broader applications.

Design Principle 1:

Simplicity is best achieved through regularity.

A few building blocks, used systematically, will have

fewer errors, be available sooner and sell for less.

A uniform instruction set allows better compilers.

Design Principle 2:

Smaller is faster:

Smaller feature size means signals can move faster.

Shorter paths, less stages, allow completion sooner.

Design Principle 3:

Good design requires good compromises.

There are no perfect architectures. There are kluges.

Design Principle 4:

Make the common part fast.

Amdahl's law, be sure you are maximizing speedup.

Pentium 4 Hyper threading

Intel Core Duo

AMD quad core, one core shown

$329 for just 1/2 quad core processor

a 4 CPU, 8GB RAM configuration

Now 12-core 16GB RAM, 3 hard drives, 2 DVD writers

Practice safe computing!

Beware Malware, Spyware and Adware:

Do everything you can to keep malware from infecting your systems,

malware authors do all they can to keep their work from being

detected and removed. By looking at the methods that malware uses

to keep itself safe, you can better root it out and remove

it before the damage is done. Downloading attachments is the

primary way malware gets into your system.

HW3 assigned

VHDL is used for structural and functional modeling of digital circuits.

The geometric modeling is handled by other Cadence programs. First, simple VHDL statements for logic gates: logic gates and corresponding VHDL statements VHDL comments start with -- acting like C++ and Java // VHDL like C++ and Java end statements with a semicolon ; VHDL uses "library" and "use" where C++ uses #include Java uses import VHDL uses ".all" where Java uses ".*" VHDL uses names similar to Pascal, case insensitive, var is same as Var, VAR VHDL has a two part basic structure for each circuit that is more than one gate, the "entity" and the "architecture". There needs to be a "library" and "use" for features that are used. The word "port" is used to mean interface. The term "std_logic" is a type used for one bit. The term "std_logic_vector" is a type used for more than one bit. The time from an input changing to when the output may change is optional. "after 1 ps" indicates 1 pico second. "after 2 ns" indicates 2 nano seconds. This circuit is coded as a full adder component in VHDL:

library IEEE; use IEEE.std_logic_1164.all; entity fadd is -- full adder stage, interface port(a : in std_logic; b : in std_logic; cin : in std_logic; s : out std_logic; cout : out std_logic); end entity fadd; architecture circuits of fadd is -- full adder stage, body begin -- circuits of fadd s <= a xor b xor cin after 1 ps; cout <= (a and b) or (a and cin) or (b and cin) after 1 ps; end architecture circuits; -- of fadd Notice that entity fadd is ... end entity fadd; is a statement Notice that architecture circuits of fadd is ... end architecture circuits; is a statement. The "of fadd" connects the architecture to the entity. The arbitrary signal names a, b, cin, s, cout were required to be assigned a type, std_logic in this case, before being used. Typical for many programming languages. Now, use a loop to combine 32 fadd into a 32 bit adder: Note: to use fadd , a long statement must be used a0: entity WORK.fadd port map(a(0), b(0), cin, sum(0), c(0)); A unique label a0 followed by a colon : Then entity WORK.fadd naming the entity to be used in WORK library. Then port map( with actual signals for a, b, cin, s, cout ) Note subscripts for bit numbers in parenthesis, not [] . The first and last stage are slightly different from the 30 stages in the loop. add32.vhdl using the fadd above Another variation of an adder, propagate generate.

add32pg_start.vhdl for HW4 A "main" entity to use the component add32 with test data. Note: just structure of "entity" then big architecture entity tadd32 is -- test bench for add32.vhdl end tadd32; -- no requirement to use "main" architecture circuits of tadd32 is ... tadd32.vhdl for main entity for HW4 The additional file tadd32.run was needed to tell the VHDL simulator how long to run: tadd32.run used to stop simulation output of cadence simulation The cadence output from the write statements in tadd32.vhdl is: tadd32.chk output of tadd32.vhdl The GHDL output from the write statements in tadd32.vhdl is: tadd32.chkg output of tadd32.vhdl The command line commands for using cadence are: run_ncvhdl.bash -v93 -messages -linedebug -cdslib ~/cs411/vhdl2/cds.lib -hdlvar ~/cs411/vhdl2/hdl.var -smartorder add32.vhdl tadd32.vhdl run_ncelab.bash -v93 -messages -access rwc -cdslib ~/cs411/vhdl2/cds.lib -hdlvar ~/cs411/vhdl2/hdl.var tadd32 run_ncsim.bash -input tadd32.run -batch -logfile tadd32.out -messages -cdslib ~/cs411/vhdl2/cds.lib -hdlvar ~/cs411/vhdl2/hdl.var tadd32 Or use make -f Makefile_411 tadd32.out diff -iw tadd32.out tadd32.chk The command line commands for using GHDL are: ghdl -a --ieee=synopsys add32.vhdl ghdl -a --ieee=synopsys tadd32.vhdl ghdl -e --ieee=synopsys tadd32 ghdl -r --ieee=synopsys tadd32 --stop-time=65ns > tadd32.gout diff -iw tadd32.gout tadd32.chkg Or use make -f Makefile_ghdl tadd32.gout output of simulation Use a Makefile for sets of commands. You will be running more than once to get homework and projects correct. I provide a Makefile_411 for cadence and Makefile_ghdl for GHDL. Browse and use as a reference for HW4, HW6, and Project. You must do the setup exactly as stated in HW4 Sample designs and corresponding VHDL code VHDL Language Compact Summary The setup for HW4, HW6 and Project will be covered in the next lecture. You will be using command lines in a terminal window on linux.gl.umbc.edu You are given a cs411.tar file that creates the needed directories for Cadence. Makefile_ghdl sets up Makefile for GHDL. You will be modifying a Makefile for HW4, HW6, and Project parts. The basic VHDL commands are shown in the Makefile's Makefile_411 for Cadence Makefile_ghdl for GHDL

The number system of interest in computer architecture re:

Sign Magnitude - binary magnitude with sign bit

Ones Complement - negative numbers have all bits inverted

Twos Complement - Ones Complement with one added to lsb

All number systems have the sign bit 0 for positive and

1 for negative. The msb is the sign bit and thus the

word length is important.

Number systems, using 4-bit words

Hex Binary Sign Ones Twos

Digit Bits Magnitude Complement Complement

0 0000 0 0 0

1 0001 1 1 1

2 0010 2 2 2

3 0011 3 3 3

4 0100 4 4 4

5 0101 5 5 5

6 0110 6 6 6

7 0111 7 7 7

8 1000 -0 -7 -8 difference starts here

9 1001 -1 -6 -7

A 1010 -2 -5 -6

B 1011 -3 -4 -5

C 1100 -4 -3 -4

D 1101 -5 -2 -3

E 1110 -6 -1 -2

F 1111 -7 -0 -1

to negate: invert invert invert all bits

sign all bits and add one

math -(-N)=N OK OK -(-8)=-8 YUK!

Addition Sign Ones Twos

Magnitude Complement Complement

2 0010 0010 0010

+3 0011 0011 0011

___ ---- ---- ----

+5 0101 0101 0101

OK

4 0100 0100 0100

+5 0101 0101 0101

--- ---- ---- ----

9 1001 1001 1001

-1 -6 -7

overflow gives wrong answer on

fixed length, computer, numbers

Subtraction: negate second operand and add

4 0100 0100 0100

-5 1101 1010 1011

--- ---- ---- ----

-1 1001 1110 1111

-1 -1 -1

works, using correct definition of negate

Sign Magnitude bigger minus smaller, fix sign

Twos Complement, just add. Most computers today

Ones Complement, just add. e.g. Univac computers

It was discovered the "add one" was almost

zero cost, thus most integer arithmetic is

twos complement.

The hardware adder has a carry-in input that implements

the "add one" by making this input a "1".

Basic one bit adder, called a full adder.

Combining four full adders to make a 4-bit adder.

Combining four full adders to make a 4-bit adder.

Combining eight 4-bit adders to make a 32-bit adder.

Combining eight 4-bit adders to make a 32-bit adder.

A quick look at VHDL that implements the above diagrams,

with some optimization, is an add32

Using a multiplexor with 32-bit adder for subtraction.

"sub" is '1' for subtract, '0' for add.

(NC is no connection, use open in VHDL)

A quick look at VHDL that implements the above diagrams,

with some optimization, is an add32

Using a multiplexor with 32-bit adder for subtraction.

"sub" is '1' for subtract, '0' for add.

(NC is no connection, use open in VHDL)

There are many types of adders. "Bit slice" will be covered in the

next lecture on the ALU. First, related to Homework 4 is the

"propagate generate" adder, then the "Square root N" adder for

Computer Engineering majors.

The "Propagate Generate" PG adder has a propagation time

proportional to log_2 N for N bits.

There are many types of adders. "Bit slice" will be covered in the

next lecture on the ALU. First, related to Homework 4 is the

"propagate generate" adder, then the "Square root N" adder for

Computer Engineering majors.

The "Propagate Generate" PG adder has a propagation time

proportional to log_2 N for N bits.

The "add4pg" unit has four full adders and extra circuits,

defined by equations rather than logic gates:

-- add4pg.vhdl entity and architecture

-- for 4 bits of a propagate-generate, pg, adder

library IEEE;

use IEEE.std_logic_1164.all;

entity add4pg is

port(a : in std_logic_vector(3 downto 0);

b : in std_logic_vector(3 downto 0);

cin : in std_logic;

sum : out std_logic_vector(3 downto 0);

p : out std_logic;

g : out std_logic );

end entity add4pg ;

architecture circuits of add4pg is

signal c : std_logic_vector(2 downto 0);

begin -- circuits of add4pg

sum(0) <= a(0) xor b(0) xor cin after 2 ps;

c(0) <= (a(0) and b(0)) or (a(0) and cin) or (b(0) and cin) after 2 ps;

sum(1) <= a(1) xor b(1) xor c(0) after 2 ps;

c(1) <= (a(1) and b(1)) or

(a(1) and a(0) and b(0)) or

(a(1) and a(0) and cin) or

(a(1) and b(0) and cin) or

(b(1) and a(0) and b(0)) or

(b(1) and a(0) and cin) or

(b(1) and b(0) and cin) after 2 ps;

sum(2) <= a(2) xor b(2) xor c(1) after 2 ps;

c(2) <= (a(2) and b(2)) or (a(2) and c(1)) or (b(2) and c(1)) after 2 ps;

sum(3) <= a(3) xor b(3) xor c(2) after 2 ps;

p <= (a(0) or b(0)) and (a(1) or b(1)) and

(a(2) or b(2)) and (a(3) or b(3)) after 2 ps;

g <= (a(3) and b(3)) or ((a(3) or b(3)) and

((a(2) and b(2)) or ((a(2) or b(2)) and

((a(1) and b(1)) or ((a(1) or b(1)) and

((a(0) and b(0)))))))) after 2 ps;

end architecture circuits; -- of add4pg

The "PG4" box is defined by equations and thus no schematic:

-- pg4.vhdl entity and architecture Carry-Lookahead unit

-- pg4 is driven by four add4pg entities

library IEEE;

use IEEE.std_logic_1164.all;

entity pg4 is

port(p0 : in std_logic;

p1 : in std_logic;

p2 : in std_logic;

p3 : in std_logic;

g0 : in std_logic;

g1 : in std_logic;

g2 : in std_logic;

g3 : in std_logic;

cin : in std_logic;

c1 : out std_logic;

c2 : out std_logic;

c3 : out std_logic;

c4 : out std_logic);

end entity pg4 ;

architecture circuits of pg4 is

begin -- circuits of pg4

c1 <= g0 or (p0 and cin) after 2 ps;

c2 <= g1 or (p1 and g0) or (p1 and p0 and cin) after 2 ps;

c3 <= g2 or (p2 and g1) or (p2 and p1 and g0) or

(p2 and p1 and p0 and cin) after 2 ps;

c4 <= g3 or

(p3 and g2) or

(p3 and p2 and g1) or

(p3 and p2 and p1 and g0) or

(p3 and p2 and p1 and p0 and cin) after 2 ps;

end architecture circuits; -- of pg4

The "Carry Select" CS, adder gets increased speed from computing

the possible output with carry in to that stage being both

'0' and '1'. The "Carry Select" adder has a propagation time

proportional to sqrt(N) for N bits.

The "add4pg" unit has four full adders and extra circuits,

defined by equations rather than logic gates:

-- add4pg.vhdl entity and architecture

-- for 4 bits of a propagate-generate, pg, adder

library IEEE;

use IEEE.std_logic_1164.all;

entity add4pg is

port(a : in std_logic_vector(3 downto 0);

b : in std_logic_vector(3 downto 0);

cin : in std_logic;

sum : out std_logic_vector(3 downto 0);

p : out std_logic;

g : out std_logic );

end entity add4pg ;

architecture circuits of add4pg is

signal c : std_logic_vector(2 downto 0);

begin -- circuits of add4pg

sum(0) <= a(0) xor b(0) xor cin after 2 ps;

c(0) <= (a(0) and b(0)) or (a(0) and cin) or (b(0) and cin) after 2 ps;

sum(1) <= a(1) xor b(1) xor c(0) after 2 ps;

c(1) <= (a(1) and b(1)) or

(a(1) and a(0) and b(0)) or

(a(1) and a(0) and cin) or

(a(1) and b(0) and cin) or

(b(1) and a(0) and b(0)) or

(b(1) and a(0) and cin) or

(b(1) and b(0) and cin) after 2 ps;

sum(2) <= a(2) xor b(2) xor c(1) after 2 ps;

c(2) <= (a(2) and b(2)) or (a(2) and c(1)) or (b(2) and c(1)) after 2 ps;

sum(3) <= a(3) xor b(3) xor c(2) after 2 ps;

p <= (a(0) or b(0)) and (a(1) or b(1)) and

(a(2) or b(2)) and (a(3) or b(3)) after 2 ps;

g <= (a(3) and b(3)) or ((a(3) or b(3)) and

((a(2) and b(2)) or ((a(2) or b(2)) and

((a(1) and b(1)) or ((a(1) or b(1)) and

((a(0) and b(0)))))))) after 2 ps;

end architecture circuits; -- of add4pg

The "PG4" box is defined by equations and thus no schematic:

-- pg4.vhdl entity and architecture Carry-Lookahead unit

-- pg4 is driven by four add4pg entities

library IEEE;

use IEEE.std_logic_1164.all;

entity pg4 is

port(p0 : in std_logic;

p1 : in std_logic;

p2 : in std_logic;

p3 : in std_logic;

g0 : in std_logic;

g1 : in std_logic;

g2 : in std_logic;

g3 : in std_logic;

cin : in std_logic;

c1 : out std_logic;

c2 : out std_logic;

c3 : out std_logic;

c4 : out std_logic);

end entity pg4 ;

architecture circuits of pg4 is

begin -- circuits of pg4

c1 <= g0 or (p0 and cin) after 2 ps;

c2 <= g1 or (p1 and g0) or (p1 and p0 and cin) after 2 ps;

c3 <= g2 or (p2 and g1) or (p2 and p1 and g0) or

(p2 and p1 and p0 and cin) after 2 ps;

c4 <= g3 or

(p3 and g2) or

(p3 and p2 and g1) or

(p3 and p2 and p1 and g0) or

(p3 and p2 and p1 and p0 and cin) after 2 ps;

end architecture circuits; -- of pg4

The "Carry Select" CS, adder gets increased speed from computing

the possible output with carry in to that stage being both

'0' and '1'. The "Carry Select" adder has a propagation time

proportional to sqrt(N) for N bits.

The above diagram has only 10 bits drawn.

You need 32 bits. Thus you need additional group of 5,

group of 6, group of 7, and a final group of 4.

1+2+3+4+5+6+7+4=32

If N = 64, log2 N = 6, sqrt(N) = 8 speedup vs complexity (size)

Behavioral VHDL for our add32:

library IEEE;

use IEEE.std_logic_1164.all;

entity add32 is

port(a : in std_logic_vector(31 downto 0);

b : in std_logic_vector(31 downto 0);

cin : in std_logic;

sum : out std_logic_vector(31 downto 0);

cout : out std_logic);

end entity add32; -- same for all implementations

library IEEE;

use IEEE.std_logic_arith.all;

architecture behavior of add32 is

signal temp : std_logic_vector(32 downto 0);

signal vcin : std_logic_vector(32 downto 0) := X"00000000"&'0';

signal va : std_logic_vector(32 downto 0) := X"00000000"&'0';

signal vb : std_logic_vector(32 downto 0) := X"00000000"&'0';

-- 33 bits (32 downto 0) needed to compute cout

begin -- circuits of add32

vcin(0) <= cin;

va(31 downto 0) <= a;

vb(31 downto 0) <= b;

temp <= unsigned(va) + unsigned(vb) + unsigned(vcin); -- 33 bit add

cout <= temp(32) after 6 ps;

sum <= temp(31 downto 0) after 6 ps;

end architecture behavior; -- of add32

Now go to Homework 4 and the setup commands.

Expect errors. Nobody's perfect.

For many errors after typing 'make'

touch add32.vhdl

make |& more # hit space for next page, enter for next line

make >& add32.prt # results, including error go to a file

# use editor to read file, you can search

FIX THE FIRST ERROR !!!!

Yes, you can fix other errors also, but one error can cause

a cascading effect and produce many errors.

Don't panic when there was only one error, you fixed that,

then the next run you get 37 errors. The compiler has stages,

it stops on a stage if there is an error. Fixing that error

lets the compiler move to the next stage and check for other

types of errors.

Don't give up. Don't make wild guesses. Do experiment with

one change at a time. You may actually have to read some

of the handouts :)

Cadence VHDL error message. (actually an extra semicolon)

ncvhdl: 05.40-s011: (c) Copyright 1995-2005 Cadence Design Systems, Inc.

OUTT : out std_logic;);

|

ncvhdl_p: *E,PORNKW (error.vhdl,10|28): identifier expected.

OUTT : out std_logic;);

Then to VHDL resource.

The above diagram has only 10 bits drawn.

You need 32 bits. Thus you need additional group of 5,

group of 6, group of 7, and a final group of 4.

1+2+3+4+5+6+7+4=32

If N = 64, log2 N = 6, sqrt(N) = 8 speedup vs complexity (size)

Behavioral VHDL for our add32:

library IEEE;

use IEEE.std_logic_1164.all;

entity add32 is

port(a : in std_logic_vector(31 downto 0);

b : in std_logic_vector(31 downto 0);

cin : in std_logic;

sum : out std_logic_vector(31 downto 0);

cout : out std_logic);

end entity add32; -- same for all implementations

library IEEE;

use IEEE.std_logic_arith.all;

architecture behavior of add32 is

signal temp : std_logic_vector(32 downto 0);

signal vcin : std_logic_vector(32 downto 0) := X"00000000"&'0';

signal va : std_logic_vector(32 downto 0) := X"00000000"&'0';

signal vb : std_logic_vector(32 downto 0) := X"00000000"&'0';

-- 33 bits (32 downto 0) needed to compute cout

begin -- circuits of add32

vcin(0) <= cin;

va(31 downto 0) <= a;

vb(31 downto 0) <= b;

temp <= unsigned(va) + unsigned(vb) + unsigned(vcin); -- 33 bit add

cout <= temp(32) after 6 ps;

sum <= temp(31 downto 0) after 6 ps;

end architecture behavior; -- of add32

Now go to Homework 4 and the setup commands.

Expect errors. Nobody's perfect.

For many errors after typing 'make'

touch add32.vhdl

make |& more # hit space for next page, enter for next line

make >& add32.prt # results, including error go to a file

# use editor to read file, you can search

FIX THE FIRST ERROR !!!!

Yes, you can fix other errors also, but one error can cause

a cascading effect and produce many errors.

Don't panic when there was only one error, you fixed that,

then the next run you get 37 errors. The compiler has stages,

it stops on a stage if there is an error. Fixing that error

lets the compiler move to the next stage and check for other

types of errors.

Don't give up. Don't make wild guesses. Do experiment with

one change at a time. You may actually have to read some

of the handouts :)

Cadence VHDL error message. (actually an extra semicolon)

ncvhdl: 05.40-s011: (c) Copyright 1995-2005 Cadence Design Systems, Inc.

OUTT : out std_logic;);

|

ncvhdl_p: *E,PORNKW (error.vhdl,10|28): identifier expected.

OUTT : out std_logic;);

Then to VHDL resource.

The Arithmetic Logic Unit is the section of the CPU that actually performs add, subtract, multiply, divide, and, or, floating point and other operations. The choice of which operations are implemented is determined by the Instruction Set Architecture, ISA. Most modern computers separate the integer unit from the floating point unit. Many modern architectures have simple integer, complex integer, and an assortment of floating point units.The ALU gets inputs from registers reg_use.jpg Where did numbers such as 100010 for subop and 000010 for sllop come from ? cs411_opcodes.txt -- alu_start.vhdl library IEEE; use IEEE.std_logic_1164.all; entity alu_32 is port(inA : in std_logic_vector (31 downto 0); inB : in std_logic_vector (31 downto 0); inst : in std_logic_vector (31 downto 0); result : out std_logic_vector (31 downto 0)); end entity alu_32; architecture schematic of alu_32 is signal cin : std_logic := '0'; signal cout : std_logic; begin -- schematic -- -- REPLACE THIS SECTION FOR PROJECT PART 1 -- (add the signals you need above the "begin" -- add logic below the "begin") adder: entity WORK.add32 port map(a => inA, b => inB, -- change cin => cin, -- change sum => result, -- change cout => cout); -- examples of entity instantiations: -- bsh: entity WORK.bshift port map (left => sllop, -- logical => '1', -- shift => inst(10 downto 6), -- input => inB, -- output => bresult); -- r1: entity WORK.equal6 port map (inst => inst(31 downto 26), -- test => "000000", -- equal => rrop); -- s1: entity WORK.equal6 port map (inst => inst(5 downto 0), -- test => "100010", -- 34 -- equal => subop1); -- s1a: subop <= subop1 and rrop; -- S_sel <= sllop_or_srlop; -- for mux32_6 -- much more end architecture schematic; -- of alu_32 Many variations of subop, subop1, subop_and, subopa Your starter part1ce_start.vhdl uses subopa short for subop_and. part1ce_start.vhdl

-- mux32_3.vhdl library IEEE; use IEEE.std_logic_1164.all; entity mux32_3 is port(in0 : in std_logic_vector (31 downto 0); in1 : in std_logic_vector (31 downto 0); in2 : in std_logic_vector (31 downto 0); ct1 : in std_logic; -- pass in1(has priority) ct2 : in std_logic; -- pass in2 result : out std_logic_vector (31 downto 0)); end entity mux32_3; architecture behavior of mux32_3 is begin -- behavior -- no process needed with concurrent statements result <= in1 when ct1='1' else in2 when ct2='1' else in0 after 50 ps; end architecture behavior; -- of mux32_3 -- mux_32_6.vhdl have only zero or one ctl ='1' library IEEE; use IEEE.std_logic_1164.all; entity mux_32_6 is port(in0 : in std_logic_vector (31 downto 0); in1 : in std_logic_vector (31 downto 0); in2 : in std_logic_vector (31 downto 0); in3 : in std_logic_vector (31 downto 0); in4 : in std_logic_vector (31 downto 0); in5 : in std_logic_vector (31 downto 0); ctl1 : in std_logic; ctl2 : in std_logic; ctl3 : in std_logic; ctl4 : in std_logic; ctl5 : in std_logic; result : out std_logic_vector (31 downto 0)); end entity mux_32_6; architecture behavior of mux_32_6 is begin -- behavior -- no process needed with concurrent statements result <= in1 when ctl1='1' else in2 when ctl2='1' else in3 when ctl3='1' else in4 when ctl4='1' else in5 when ctl5='1' else in0 after 10 ps; end architecture behavior; -- of mux_32_6

Note that bshift.vhdl contains two different architectures for the same entity. A behavioral architecture using sequential programming and a circuits architecture using digital logic components. bshift.vhdl An 8-bit version of shift right logical, using single bit signals, three bit shift count, is:

There are many ways to build an ALU. Often the choice is based on mask making and requires a repeated pattern. The "bit slice" method uses the same structure for every bit. One example is:

Note that 'Operation' is two bits, 0 for logical and, 1 for logical or, 2 for add or subtract, and 3 for an operation called set used for comparison. 'Binvert' and 'CarryIn' would be set to '1' for subtract. 'Binvert' and 'a' set to '0' would be complement. The overflow detection is in every stage yet only used in the last stage. The bit slices are wired together to form a simple ALU:

The 'set' operation would give non zero if 'a' < 'b' and zero otherwise. A possible condition status or register value for a "beq" instruction. If overflow was to be detected, the circuit below uses the sign bit of the A and B inputs and the sign bit of the result to detect overflow on twos complement addition.

The ALU fits into the machine architecture as shown below:

32-bit and 64-bit ALU architectures are available. A 64-bit architecture, by definition, has 64-bit integer registers. Many computers have had 64-bit IEEE floating point for many years. The 64-bit machines have been around for a while as the Alpha and PowerPC yet have become popular for the desktop with the Intel and AMD 64-bit machines.

Software has been dragging well behind computer architecture. The chaos started in 1979 with the following "choices."

The full whitepaper www.unix.org/whitepapers/64bit.html My desire is to have the compiler, linker and operating system be ILP64. All my code would work fine. I make no assumptions about word length. I use sizeof(int) sizeof(size_t) etc. when absolutely needed. On my 8GB computer I use a single array of over 4GB thus the subscripts must be 64-bit. The only option, I know of, for gcc is -m64 and that just gives LP64. Yuk! I have to change my source code and use "long" everywhere in place of "int". If you get the idea that I am angry with the compiler vendors, you are correct! Here are sample programs and output to test for 64-bit capability in gcc: Get sizeof on types and variables big.c output from gcc -m64 big.c big.out malloc more than 4GB big_malloc.c output from big_malloc_mac.out Newer Operating Systems and compilers (note 'sizeof' changed to long) Get sizeof on types and variables big12.c output from gcc big12.c big12.out The early 64-bit computers were: DEC Alpha DEC Alpha IBM PowerPC Some history of 64-bit computers:

Java for 64-bit, source compatible Then to VHDL resource, FPGA. get free GHDL

Standard decimal and binary multiplication could look like:

234 01010 multiplicand

x 121 x 00011 x multiplier

------ -------- --------------

234 01010 product

468 01010

234 00000

------ 00000

028314 00000

| ----------

| 0000011110 5-bits times 5-bits gives a 10-bit product,

| in a computer leading zeros are kept.

|

3-digits times 3-digits gives a 6-digit product, yet in

decimal, we do not write the leading zeros.

We have covered how computer adders work and how they are built.

Exactly two numbers are added to produce one sum, thus the binary

multiply above needs to be rewritten as:

01010

x 00011

----------

001010 -- multiplier LSB anded with multiplicand

+ 01010 -- multiplier bit-1 anded with multiplicand

-----

0011110 -- partial sum, bottom bit passed down

+ 00000 -- multiplier bit-2 anded with multiplicand

-----

00011110 -- partial sum, bottom two bits passed down

+ 00000 -- multiplier bit-3 anded with multiplicand

-----

000011110 -- partial sum, bottom three bits passed down

+ 00000 -- multiplier bit-4 anded with multiplicand

-----

0000011110 -- final product, four bits passed down

Thus, by this simple method, with a 5-bit unsigned multiplier, there

are four additions needed. A circuit that uses one adder and performs

serial multiplication follows directly. This design chose to use a

multiplexor rather than an 'and' operation to select the multiplicand

or zero.

How a register works

The VHDL code that represents the above circuit is:

mula <= hi;

mulb <= md when (lo(0)='1') else x"00000000" after 50 ps;

adder:entity WORK.add32 port map(mula, mulb, '0', muls, cout);

hi <= cout & muls(31 downto 1) when mulclk'event and mulclk='1';

lo <= muls(0) & lo(31 downto 1) when mulclk'event and mulclk='1';

The signal "mulclk" runs for the number of clock cycles that

their are bits in the multiplier, 32 for this example. For

simplicity of design, zero is added in the first step. Note that

"cout" is used when loading the "hi" register. The shifting is

accomplished by wire routing.

The VHDL test source code is mul_ser.vhdl

The output from the test is mul_ser.out

P.S. The above was an introduction, never use that method or circuit.

A serial multiplier can be built using only half as many clock cycles.

We use the technique developed by Mr. Booth. Two multiplier bits are

used each clock cycle. Only one add operation is needed each cycle,

yet the augend has several possible values as shown by the

multiplexor in the schematic and the table in the VHDL source code.

The VHDL code that represents the above circuit is:

mula <= hi;

mulb <= md when (lo(0)='1') else x"00000000" after 50 ps;

adder:entity WORK.add32 port map(mula, mulb, '0', muls, cout);

hi <= cout & muls(31 downto 1) when mulclk'event and mulclk='1';

lo <= muls(0) & lo(31 downto 1) when mulclk'event and mulclk='1';

The signal "mulclk" runs for the number of clock cycles that

their are bits in the multiplier, 32 for this example. For

simplicity of design, zero is added in the first step. Note that

"cout" is used when loading the "hi" register. The shifting is

accomplished by wire routing.

The VHDL test source code is mul_ser.vhdl

The output from the test is mul_ser.out

P.S. The above was an introduction, never use that method or circuit.

A serial multiplier can be built using only half as many clock cycles.

We use the technique developed by Mr. Booth. Two multiplier bits are

used each clock cycle. Only one add operation is needed each cycle,

yet the augend has several possible values as shown by the

multiplexor in the schematic and the table in the VHDL source code.

The VHDL test source code is bmul_ser.vhdl

The output from the test is bmul_ser.out

Next, parallel multiplication with a carry-save design.

Note there is no carry propagation except in the last stage.

The VHDL test source code is bmul_ser.vhdl

The output from the test is bmul_ser.out

Next, parallel multiplication with a carry-save design.

Note there is no carry propagation except in the last stage.

Some fancy VHDL using double subscripting and "generate".

pmul4.vhdl

A 32 bit design using an add32csa entity is:

Some fancy VHDL using double subscripting and "generate".

pmul4.vhdl

A 32 bit design using an add32csa entity is:

The VHDL entity for the carry-save multiplier is mul32c.vhdl

The VHDL test source code is mul32c_test.vhdl

The output from the test is mul32c_test.out

We can now combine the Booth multiplication technique to reduce the

number of stages in half, still using the parallel multiply.

The VHDL was written without a diagram, thus no schematic exists, yet.

The VHDL entity for the carry-save multiplier is bmul32.vhdl

The VHDL test source code is bmul32_test.vhdl

The output from the test is bmul32_test.out

Homework 5 is assigned

The VHDL entity for the carry-save multiplier is mul32c.vhdl

The VHDL test source code is mul32c_test.vhdl

The output from the test is mul32c_test.out

We can now combine the Booth multiplication technique to reduce the

number of stages in half, still using the parallel multiply.

The VHDL was written without a diagram, thus no schematic exists, yet.

The VHDL entity for the carry-save multiplier is bmul32.vhdl

The VHDL test source code is bmul32_test.vhdl

The output from the test is bmul32_test.out

Homework 5 is assigned

Hopefully you understand decimal division:

49 quotient

______

divisor 47 / 2345 dividend

188

---

465

423

---

42 remainder

And check division by multiplication:

49 multiplicand is the quotient above

x 47 multiplier is the divisor above

----

2303

+ 42 add the remainder above

----

2345 final sum is the dividend above

A smaller case that is used below in binary:

12 quotient

___

divisor 7 / 85 dividend

7

--

15

14

--

1 remainder

Binary divide, conventional method and non restoring method

These examples are shown in a form that can be directly

implemented in a computer architecture.

The divisor, quotient and remainder are each one word.

The dividend is two words.

The equations dividend = quotient * divisor + remainder

and |remainder| < |divisor|

must be satisfied.

When a choice is possible, choose the sign of the remainder to

be the same as the sign of the dividend.

Save the sign bits of the dividend and divisor, if necessary,

negate the dividend and divisor to make them positive.

Fix up the sign bits of the quotient and dividend after dividing.

Example: dividend = 85 , divisor = 7

Decimal divide 85 / 7 = quotient 12 , remainder 1

Restoring (conventional) binary divide, twos complement 4-bit numbers

1 1 0 0 quotient

________________

0 1 1 1 / 0 1 0 1 0 1 0 1

-0 1 1 1 may subtract by adding twos complement

_______ - 0 1 1 1 is 1 0 0 1

5 - 7 = -2 1 1 1 0

negative, add 7 +0 1 1 1

restored _______

next bit 1 0 1 0

-0 1 1 1

_______

10 - 7 = 3 0 1 1 1

quotient=1, next bit -0 1 1 1

_______

7 - 7 = 0 0 0 0 0 0

quotient=1, next bit -0 1 1 1

_______

0 - 7 = -7 1 0 0 1

negative, add 7 +0 1 1 1

quotient=0 _______

restored, next bit 0 0 0 1

-0 1 1 1

_______

1 - 7 = -6 1 0 1 0

negative, add 7 +0 1 1 1

quotient=0 _______

restored, finished 0 0 0 1 final remainder

(8 cycles using adder)

Clock cycles can be saved by not performing the "restored" operation.

non-restoring binary divide, twos complement 4-bit numbers

note: 7 = 0 1 1 1 -7 = 1 0 0 1

1 1 0 0 quotient

________________

0 1 1 1 / 0 1 0 1 0 1 0 1

pre shift +1 0 0 1 adding twos complement of divisor

_______

10 - 7 = 3 0 0 1 1 1

quotient=1 +1 0 0 1

next bit subtract _______

7 - 7 = 0 0 0 0 0 0

quotient=1 +1 0 0 1

next bit subtract _______

0 - 7 = -7 1 0 0 1 1

quotient=0 +0 1 1 1 adding divisor

next bit add _______

2 + 7 = 9 = -7 1 0 1 0

quotient=0 +0 1 1 1

correction add _______

final remainder 0 0 0 1 remainder

(5 cycles using adder)

Correcting signs:

dividend divisor | quotient remainder

------------------+--------------------

+ + | + + +85 / +7 = +12 R +1

+ - | - + +85 / -7 = -12 R +1

- + | - - -85 / +7 = -12 R -1

- - | + - -85 / -7 = +12 R -1

Humans, not the computer, keeps track of the binary point.

Integers Fractions (fixed point)

qqqq. .qqqq q.qqq

__________ __________ __________

ssss. / dddddddd. .ssss / .dddddddd ss.ss / ddd.ddddd

_____ _____ _______

rrrr. .0000rrrr .0rrrr

qqqq. .qqqq q.qqq

* ssss. * .ssss * ss.ss

_________ ________ _________

tttttttt. .tttttttt ttt.ttttt

+ rrrr. + .0000rrrr + .0rrrr

_________ _________ _________

dddddddd. .dddddddd ddd.ddddd

for multiply, counting positions from the right, the binary point

of the product is at the sum of the positions of the multiplicand

and multiplier.

for divide, counting positions from the right, the binary point

of the quotient is at the difference of the positions of the

dividend and divisor. The binary point of the remainder is in

the same position as the binary point of the dividend.

Overflow occurs when the top half of dividend is greater than or

equal to the divisor, thus division by zero is always overflow.

No schematic or VHDL is provided for restoring division because

it is never used in practice. The serial non restoring division is:

A possible design for a serial divide, does not include remainder correction:

diva <= hi(30 downto 0) & lo(31) after 50 ps; -- shift

divb <= not md when sub_add='1' else md after 50 ps; -- subtract or add

adder:entity WORK.add32 port map(diva, divb, sub_add, divs, cout);

quo <= not divs(31) after 50 ps; -- quotient bit

hi <= divs when divclk'event and divclk='1';

lo <= lo(30 downto 0) & quo when divclk'event and divclk='1';

sub_add <= quo when divclk'event and divclk='1';

The full VHDL code is div_ser.vhdl

with output div_ser.out

Note that the remainder is not corrected by this circuit.

The FFFFFFFA should have the divisor 00000007 added to it,

making the remainder 00000001

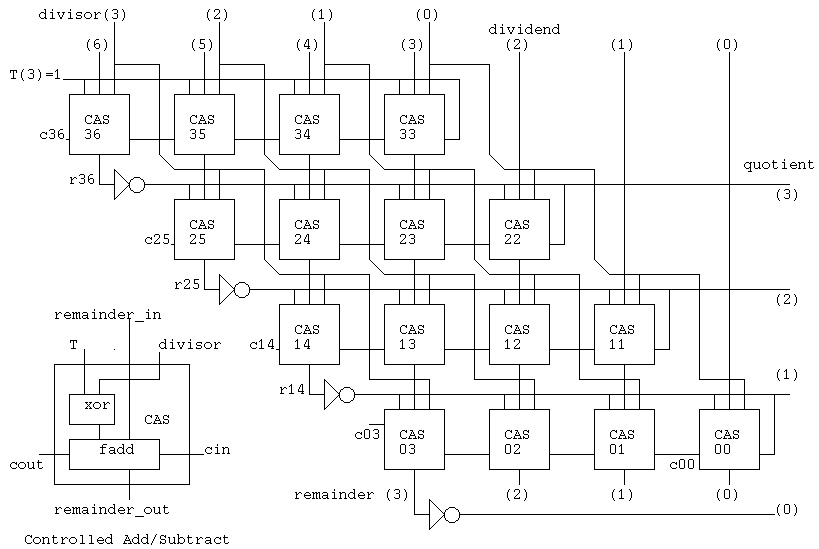

Now that you understand how binary division works and understand

how multiplication can be speeded up using parallel circuits,

we show a parallel division circuit and its simulation.

The full VHDL code is div_ser.vhdl

with output div_ser.out

Note that the remainder is not corrected by this circuit.

The FFFFFFFA should have the divisor 00000007 added to it,

making the remainder 00000001

Now that you understand how binary division works and understand

how multiplication can be speeded up using parallel circuits,

we show a parallel division circuit and its simulation.

divcas4_test.vhdl

divcas4_test.out

Note that the output includes the time.

Observe the first few lines of printout replacing 'U' undefined,

meaning not computed, with zeros or ones. Unfortunately, if VHDL

prints hexadecimal, any state except one is printed as zero.

For part1 project you are given divcas16.vhdl

This divides as 32 bit number by a 16 bit number and

produces a 16 bit quotient and 16 bit remainder.

divcas16.vhdl

It would be nice if I could have a 4-bit radix 2 or radix 4 SRT

division schematic here. Parallel circuits that perform division

may use (-2, -1, 0, 1, 2) values for intermediate signals.

Two or more bits of the quotient may be computed at each stage,

based on a table and a few bits of the divisor and partial

remainder.

SRT Divide, click on slide show .pdf

SRT Divide .pdf local

freepatentsonline.com/5272660.html

Software can be copyrighted. Just doing a physical embodiment makes

you the owner of the copyright. Add Copyright year name to the

document or computer file. If you want your copyright to stand up

in a court of law, you need to file the copyright. Get the latest

information, at one time there was a $40.00 filing fee and the

copyright was good for 28 years, renewable for 67 more years, for

a total of 95 years.

There is a "fair use" clause that allows personal use of parts

of a copyrighted document.

Software and hardware and processes may be patented. A utility

patent is good for 20 years, a design patent is good for 14 years.

The cost of completing the process of getting a patent is variable.

20 years ago the average cost was $5,000.00 and today the average

cost is about $15,000.00. There are companies that can help you,

do-it-yourself, with advertised cost starting from about $1,500.00.

(There may be additional maintenance fees at 3 1/2 years etc.)

((It may take a year or more to get a patent.))

One version of the process to get a patent is:

divcas4_test.vhdl

divcas4_test.out

Note that the output includes the time.

Observe the first few lines of printout replacing 'U' undefined,

meaning not computed, with zeros or ones. Unfortunately, if VHDL

prints hexadecimal, any state except one is printed as zero.

For part1 project you are given divcas16.vhdl

This divides as 32 bit number by a 16 bit number and

produces a 16 bit quotient and 16 bit remainder.

divcas16.vhdl

It would be nice if I could have a 4-bit radix 2 or radix 4 SRT

division schematic here. Parallel circuits that perform division

may use (-2, -1, 0, 1, 2) values for intermediate signals.

Two or more bits of the quotient may be computed at each stage,

based on a table and a few bits of the divisor and partial

remainder.

SRT Divide, click on slide show .pdf

SRT Divide .pdf local

freepatentsonline.com/5272660.html

Software can be copyrighted. Just doing a physical embodiment makes

you the owner of the copyright. Add Copyright year name to the

document or computer file. If you want your copyright to stand up

in a court of law, you need to file the copyright. Get the latest