CS437 Selected Lecture Notes

This is one big WEB page, used for printing

These are not intended to be complete lecture notes.

Complicated figures or tables or formulas are included here

in case they were not clear or not copied correctly in class.

Computer commands, directory names and file names are included.

Specific help may be included here yet not presented in class.

Source code may be included in line or by a link.

Lecture numbers correspond to the syllabus numbering.

Lecture 1, Introduction

Getting Started, solving setup problems

Lecture 2, Mouse Handling

Lecture 3, Color

Lecture 4, Multiple windows and motion

Lecture 5, Menu Design and Implementation

Lecture 6, Getting user input

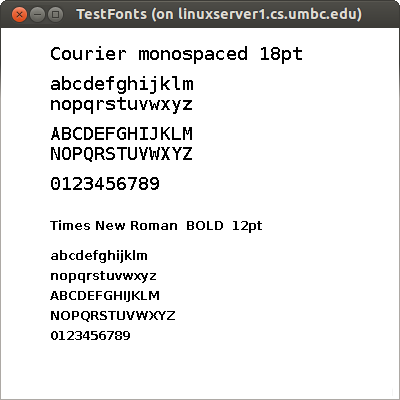

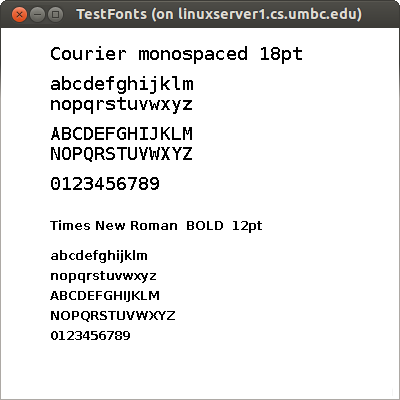

Lecture 7, Text sizes and fonts, international

Lecture 8, Writing and restoring users work

Lecture 9a, Painters Algorithm, Display list

Lecture 9, Review 1

Lecture 10, Quiz 1

Lecture 11, Pan, Zoom, Scroll Bars

Lecture 12, Timing

Lecture 13, Motion and movement, scenes

Lecture 14, Curves and surfaces, targets

Lecture 15, Parallelism in your GUI

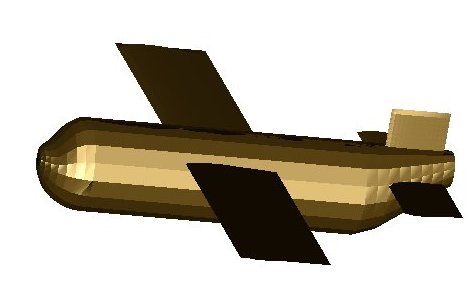

Lecture 16, 3D with motion

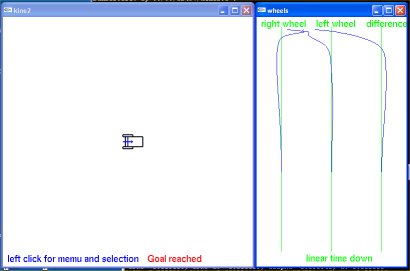

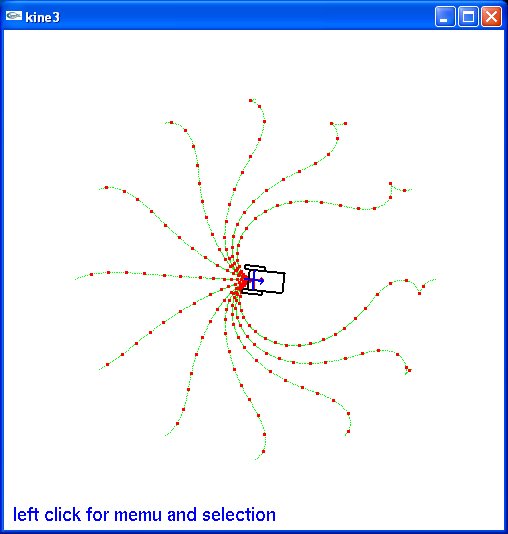

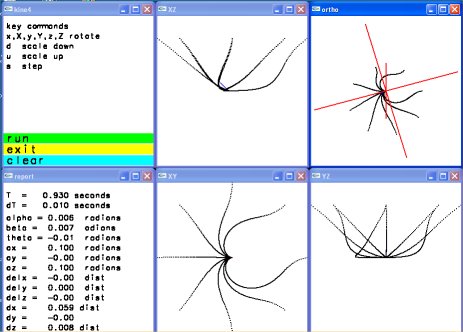

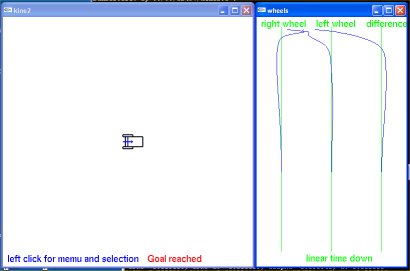

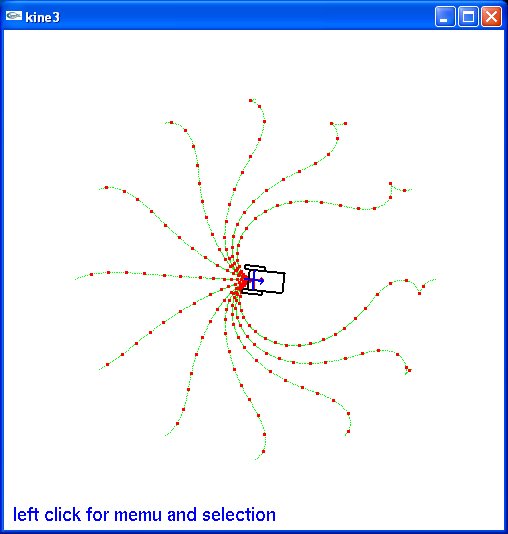

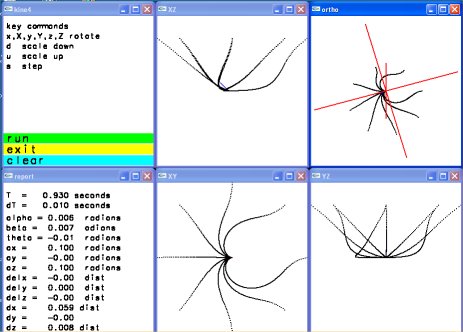

Lecture 17, Kinematics and timing

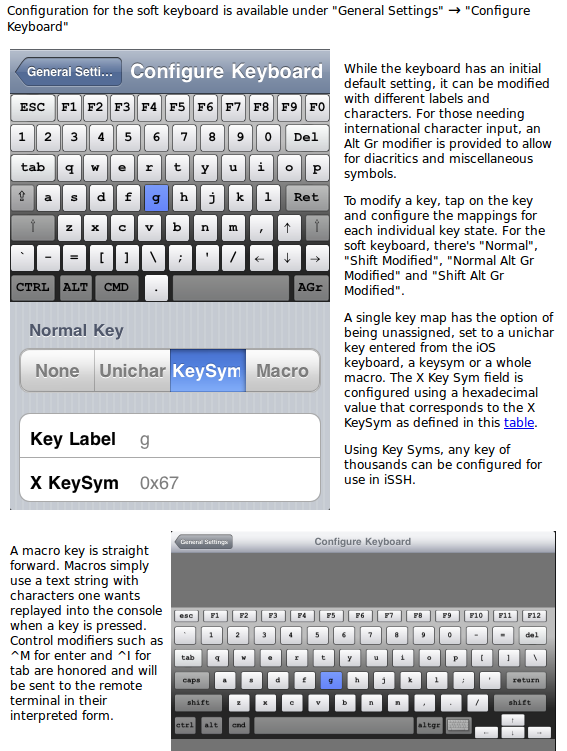

Lecture 18, User Interface for Platform

Lecture 18, Rendering Survey

Lecture 19a, Captuting Screen

Lecture 19, Review 2

Lecture 20, Quiz 2

Lecture 21, Perspective Viewing

Lecture 22, Effective efficient lighting

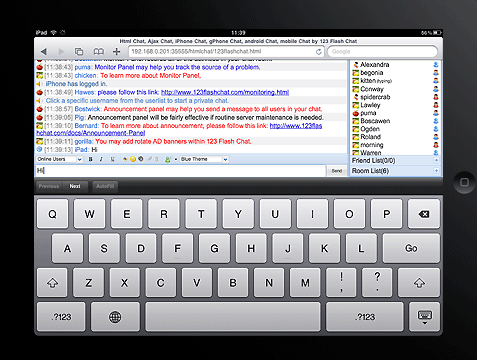

Lecture 23, HTML5, javascript, CSS

Lecture 24, Windowing systems

Lecture 25, 3D with glasses and without

Lecture 26, Texture mapping in 3D, zoom glasses

Lecture 27, Color Scale

Lecture 28, Output Jpeg, PostScript

Lecture 29, Project Demonstrations and Review

Lecture 30, Final Exam

Lecture 31, More graphics math

Other Links

We will cover information on User Interface

The user interface includes visual and sound output.

There may be physical output when using a game controller.

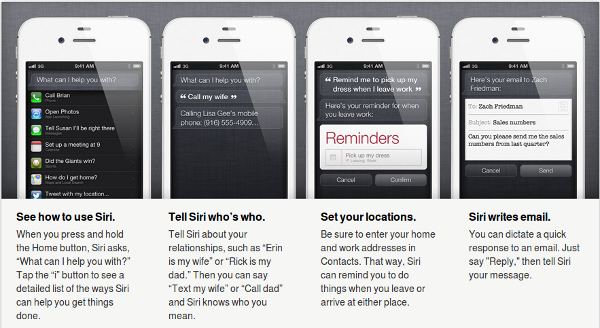

The user interface includes keyboard, mouse, touch, multi-touch

and game controller input. Voice recognition may be available.

The desired output responce time from the time of an input

varies widely with application.

As various applications are covered, the differences in

Style, conventions and standards will be presented.

Application of User Interface

1) desktop, labtop, tablet computers

both application and web interface

Windows, Mac OSX, Unix, Linux differences

(Some demonstrations)

Notice how the user interfaces have changed with time.

Many Mac conventions have been adopted by Windows

and some Linux distributions.

Touch screens are becoming available and the

user interfaces are changing, some adopting

user interface similar to smart phones.

2) game consoles

WII, Playstation 3, XBox 360 (Playstation 4, Xbox One)

game controllers (some samples shown)

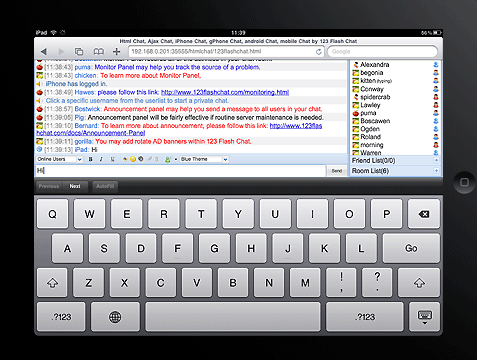

3) cell phones

touch methods, size, speed, resolution

One finger moving in place of scroll bar.

Two finger roatation in place of mouse motion for rotation.

Take into account "fat fingering" in addition to obvious

finger size and resolution.

4) Automotive, aircraft "glass cockpit"

replacing traditional instruments with a display

I am doing contract software in this area.

My part was the primary flight display that gets input

from the inertial measurement unit and Global Positioning

System, GPS. (Sample demo later when we cover motion)

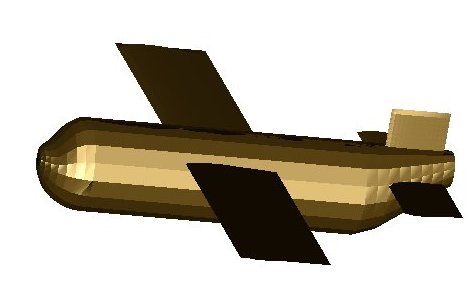

5) RPV, remotely piloted vehicle

flying over Afghanistan from Colorado

This requires the aircraft "glass cockpit" plus

mapping and threat displays. Reaction time to

get aircraft information back to the displays

becomes a critical factor.

6) Internationalization

marketing around the world

Basically, do not put any text into graphics.

All text is pulled from a file or files so that translations

to other languages can be changed in the files rather

than changing many places in the application.

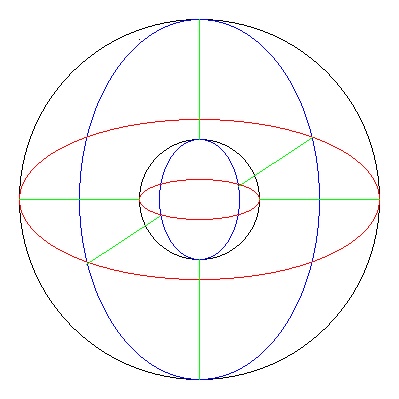

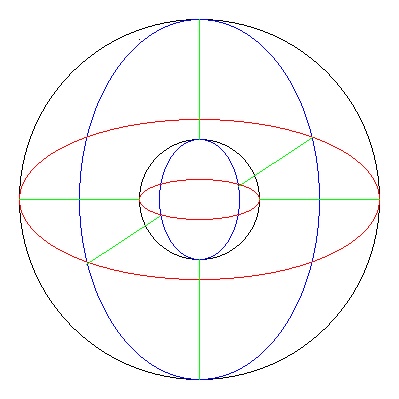

7) real 3D displays

cameras, games, TV, graphics

Real 3D is here both with and without glasses.

Processing and developing real 3D applications

is covered in several lectures.

This course will provide the student both

knowledge and a basic Graphical User Interface, GUI, program

that the student has written and can be expanded into

various applications the student wants to develop.

This is very broad and includes cell phone apps,

desktop, laptop, tablet applications, games for

either computer or game console, etc.

Building GUI programs is non-trivial yet rewarding.

The student needs to understand the operating system,

the windowing system and "tool kits."

There are many potential employment opportunities for

graduates with computer graphics skills. The film

industry and advertising industry have many types of

positions available. The gaming industry, with some

firms local to the Baltimore area, have various

positions available. Check out Firaxis, Breakaway,

Day 1 Studios, Big Huge Games and others.

Automotive and aircraft companies are using

GUI increasingly to add new capability and

replace old instrument displays.

Course motto: If it works, use it.

If not, find another way.

You will be dealing with windowing systems and graphical

libraries that are much larger and more complex than

operating systems. I guarantee they will have bugs.

Your grade depends of finding a way around any bugs.

Your program must work in spite of system/library bugs.

The basic prerequisite for this course is to be able to

write working code in some reasonable programming language.

You will probably be writing 1,000 to 10,000 lines of code

in this course. Do not panic. A lot of code is repetitive.

You are expected to know the software development cycle:

Edit <-----------+

Compile |

Run |

Curse ---+

As an acknowledged expert, Edsger Dijkstra, has stated:

"Top down design and programming is right every time

except the first time." For your rapid learning you

do not want to use the "waterfall model" or even

Barry Boehms "spiral model", but rather use

"rapid prototyping".

Do not worry about the details, for a while, yet look over

the organization and structure of the same GUI application

written for X Windows Motif, OpenGL, Java and Python.

Remember, putty is useless for graphics. Your desk top or

lap top should have Linux as dual boot in order to use

ssh -Y username@linux.gl.umbc.edu

You will need to make a choice of "platform" for doing

the programming for this course. My lectures will cover:

Microsoft Windows - Java, JavaScript (same code every where)

- Python, HTML5 etc (probably same code every where)

- OpenGL in C, C++ (same code for Linux and Mac OSX)

Linux, Unix - Java, JavaScript (same code every where)

- Python, HTML5 etc (probably same code every where)

- X Windows Motif (same code for Mac OSX)

- OpenGL in C, C++ (same code MS Windows and Mac OSX)

Mac OSX - Java, JavaScript (same code every where)

- Python, HTML5 etc (probably same code every where)

- X Windows Motif (same code for Linux, Unix)

- xcode, cocoa (Mac specific)

- OpenGL in C, C++ (same code for MS Windows and Linux)

etc - The adventurous student may use raw wx or Qt or Tk,

PyQt4, or other language and graphics tool kit.

Microsoft's C# and game software may be used.

HTML5 with JavaScript may be used.

3D graphics may be used.

Cell Phone - You may choose to do an app for your project

Game Console - You may choose to do a game for your project

On Microsoft Windows you need compilers and possibly

some graphics tool kit, or Java SDK, Python with graphics,

editor and browser using HTML5, either

Microsoft Visual Studio or Cygwin to do 3D.

On Linux, Unix you may need Motif (called Lesstif or OpenMotif) installed.

UMBC linux.gl.umbc.edu has all software installed. Java, Python,

editors and compilers and browsers for JavaScript and 3D.

The Firefox browser has HTML5 and JavaScript on all OS.

On Mac the underlying operating system is Unix. Thus you can

have Java, Python, compilers,

it is not already installed. You may also use the Mac IDE.

Java has two execution models. "Frame" makes standard applications

that run in a standard window on all platforms. "App" or applet is

much more restrictive and must run in a WEB browser or appletviewer.

Then you have a choice of using just AWT or additionally Swing

or Swing2 and optionally Java3D. Explore "java.sun.com".

"etc" becomes the students responsibility to set up the

environment and do the homework and project. Just running a

demo project is not acceptable. You must make significant

additions and changes.

HTML5, JavaScript, 3D and more are available in latest

web browsers. Be sure your system is up to date.

GUI Human factors: Make sure it is obvious to the user of your

application how to quit, exit, kill or stop.

Just a quick look at some sample code.

See which will run on your development system

w1.c basic X windows

w1.jpg - screen

w1gl.c - w1.c in OpenGL

w1gl.jpg - screen

W1frame.java - w1.c in Java

W1frame_java.png - screen

W1frame.jpg - screen

W1app.java - W1frame as an applet

hw1s.py - contributed Python2 wx Windows

w1tk.py - simple Python2 tk on GL

w1qt.py - simple Python2 pyqt on GL

w1wx.py - simple Python Windows wx

w1tk.py3 - simple Python3

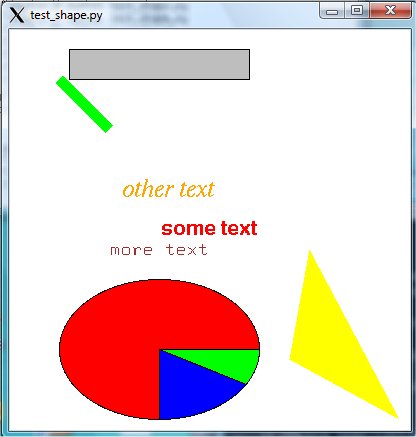

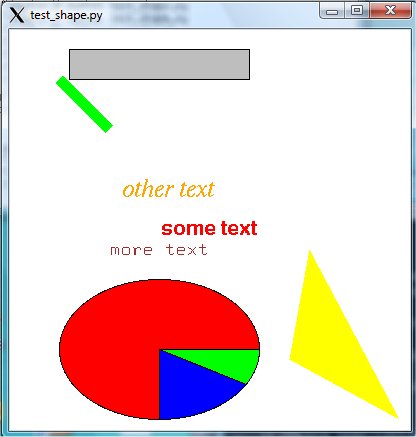

shape_def.py3 source code

shape_def3d.py3 source code

shape_def3d.py3 source code

shape_def3d_py3.out vertex,faces

w1.html - HTML5 using javascript

Many w1, w2, w3, w4 files with various languages

download file you want as filename on linux.gl.umbc.edu with

cp /afs/umbc.edu/users/s/q/squire/pub/download/filename

cp /afs/umbc.edu/users/s/q/squire/pub/www/filename .html files

app1qt4.py - contributed Python Qt

hw5qt4s.py - contributed Python qt clock

hw2wxs.py - contributed Python wx

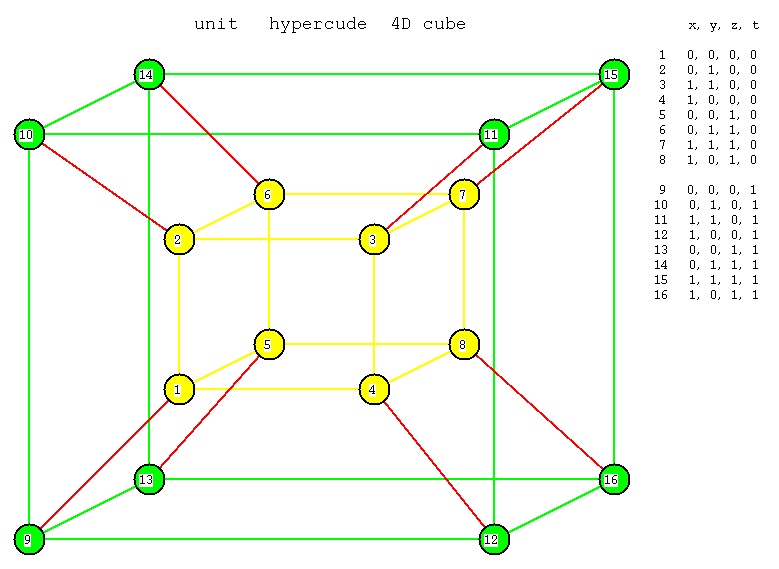

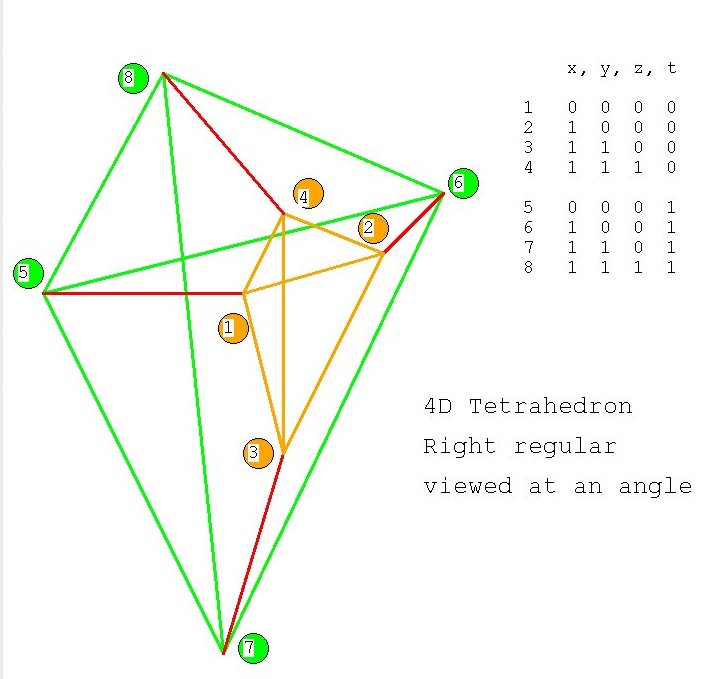

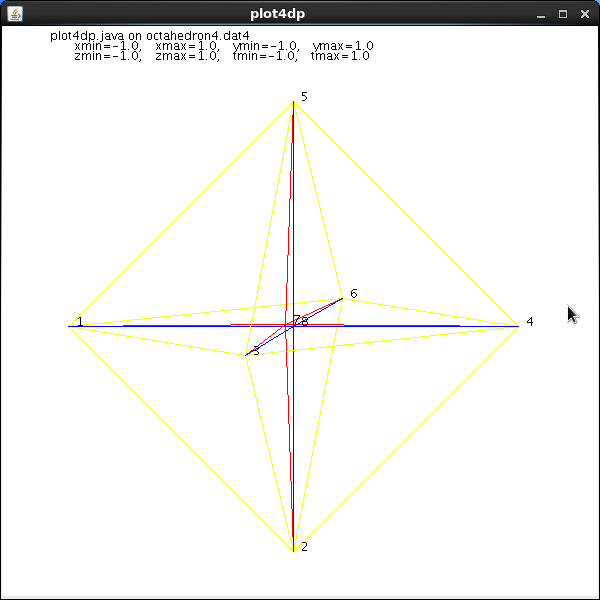

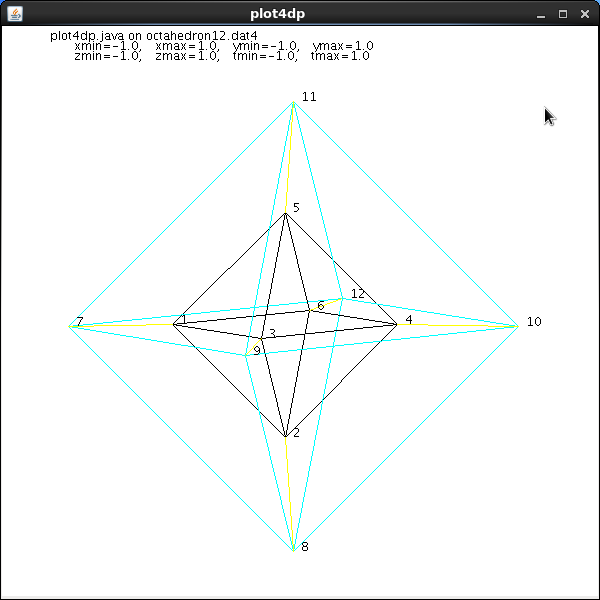

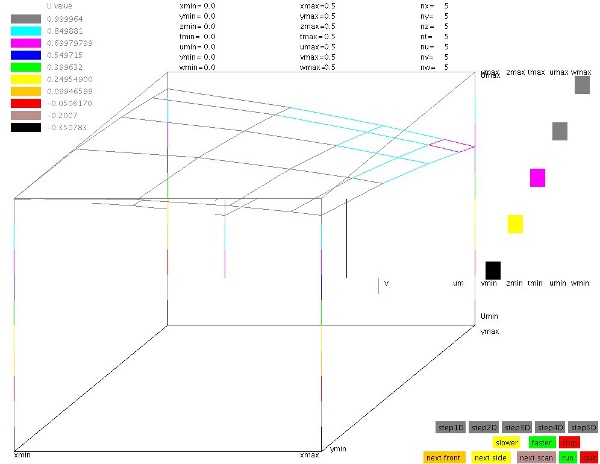

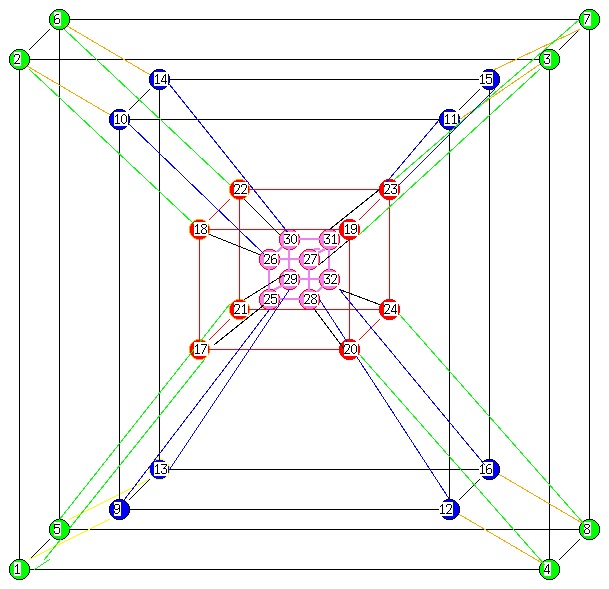

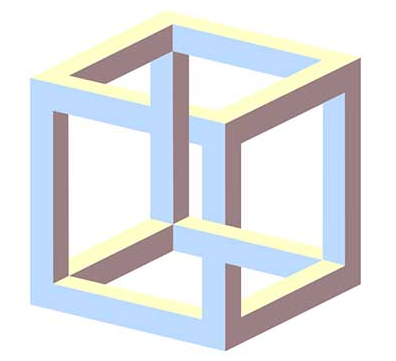

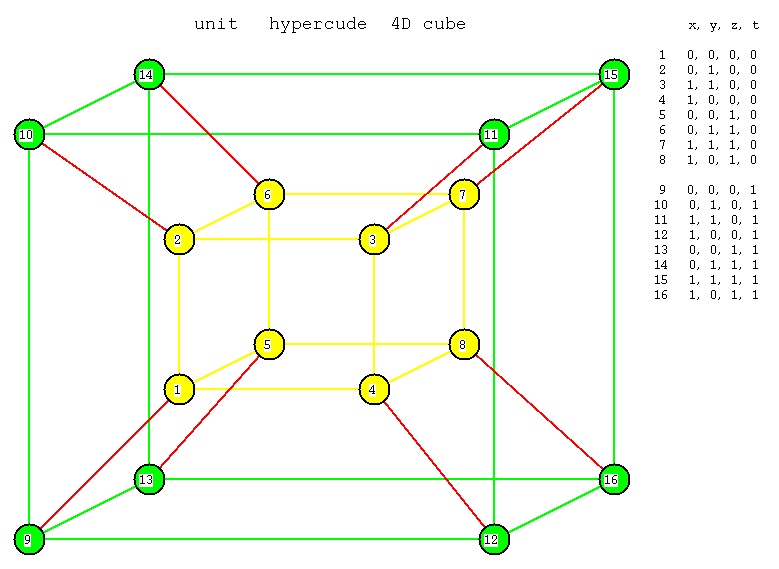

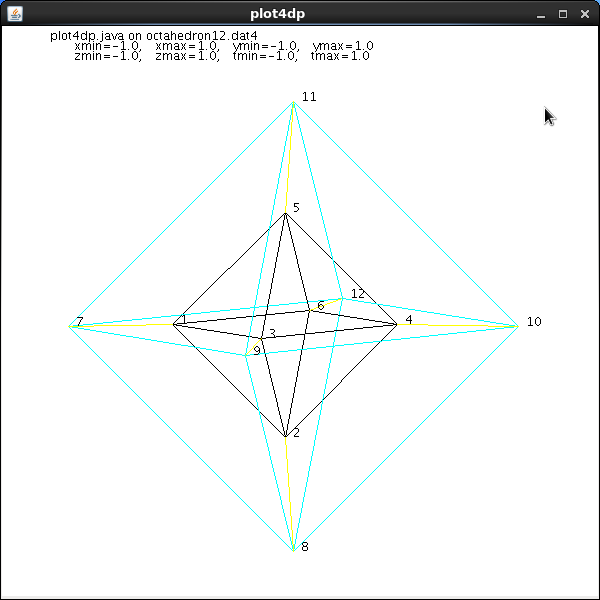

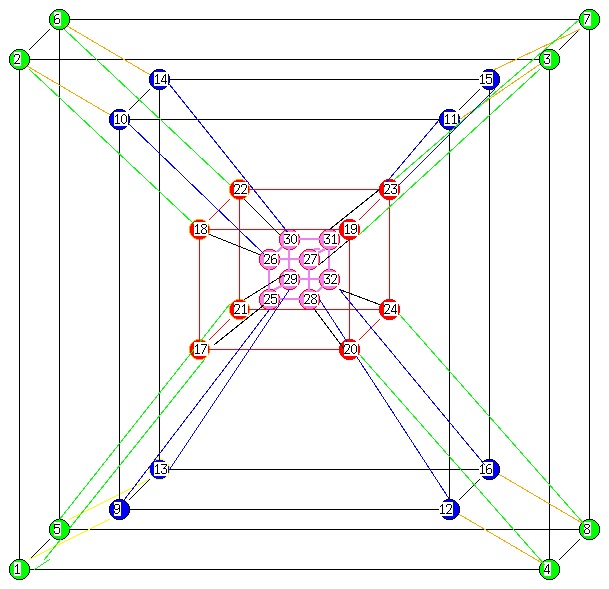

3D HyprCube.java

run 3D HyprCube.html

http://www.cs.umbc.edu/~squire/myapplets/HyprCube.html

Note that:

w1.c, the basic X Windows GUI application can be compiled

and executed on all Unix based operating systems,

including MacOS X

w1gl.c, the OpenGL GUI application can be compiled

and executed on almost all operating systems

that provide windowing (All forms of Unix,

MacOS and Microsoft Windows, etc.)

W1frame.java, the Java GUI application can be compiled

and run on any system that has Java

J2SE 1.6, JDK 6 or later available.

W1app.java, the Java GUI application can be compiled

on any system that has Java J2SE 1.6 or

later available. Then run in almost any

WEB browser. But, the user may not have

Java applets enabled. There are also some

severe restrictions on applets. Also, JavaScript

and Flash may not work well for this course.

Other demonstrations of sample applications may include:

split_cube - visualization, color, movement, inside

f to slow, O to close, c no color, C color helps visualize, R/L click

Actually running split_cube6 x for demonstration

teapots - lighting, move light with arrow keys, beware background

planets - lighting and glowing sun

sky_fly - terrain

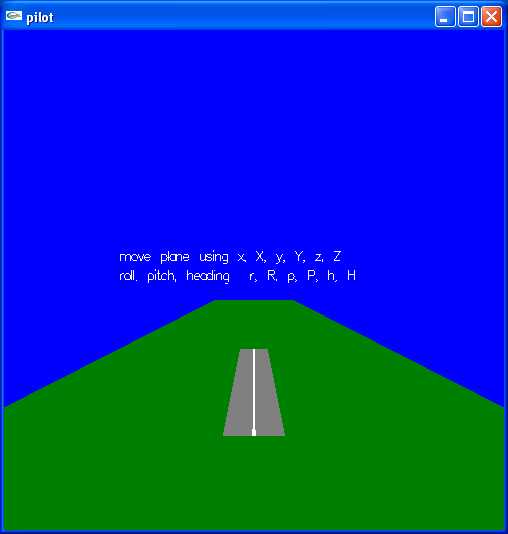

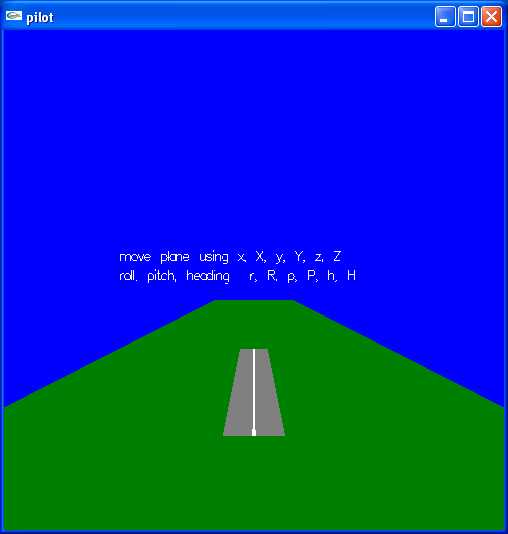

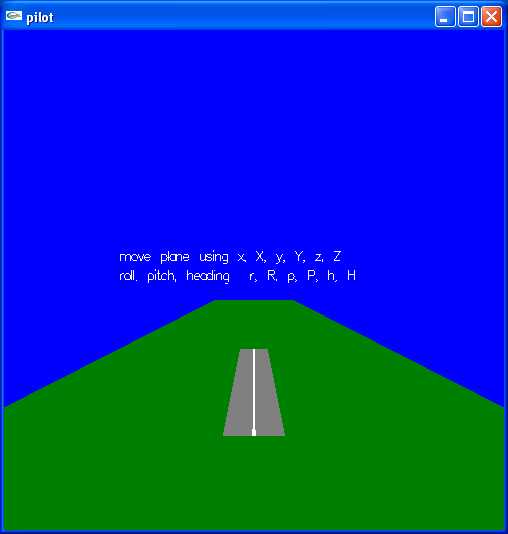

pilot - do your own flight simulator, y,z underground?

springgl - education, teach something for project

spring2gl - build on previous applications

alpha_fade - scene transitions using fading

earth - texture map pictures onto objects

gears4 - modeling and simulation

tenseg2gl - modeling user controls viewing

light_dat - skull.dat , more modeling

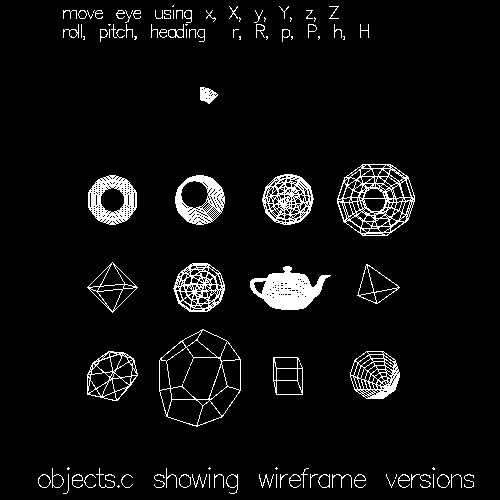

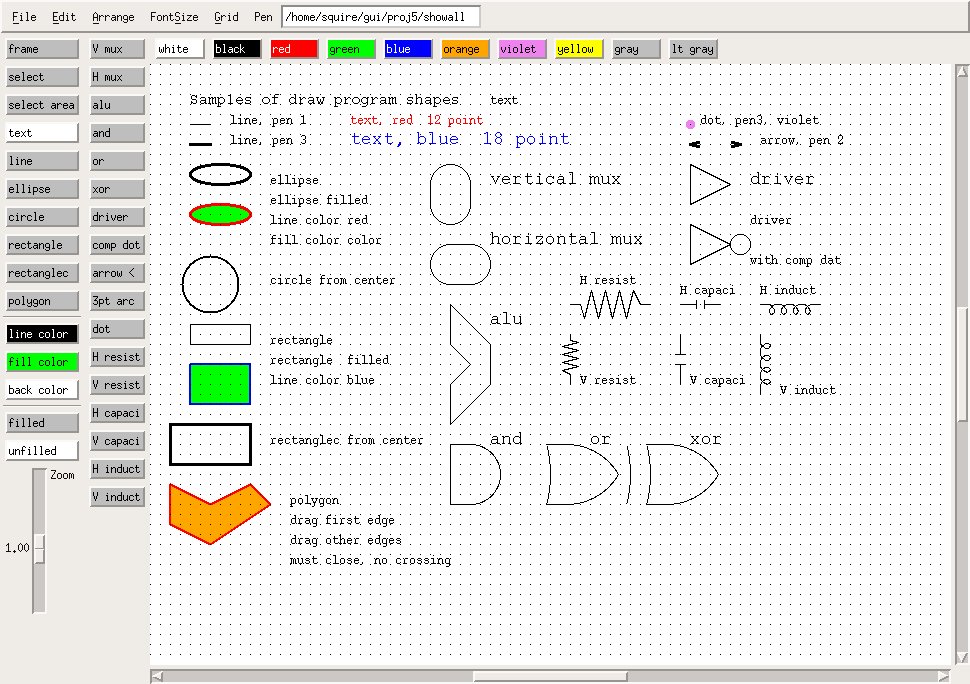

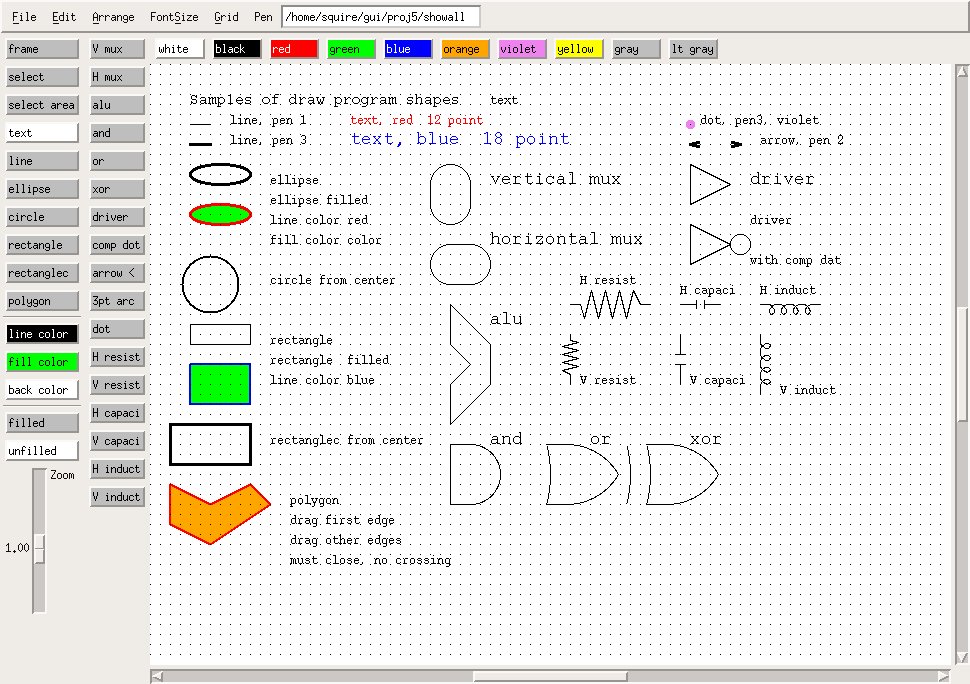

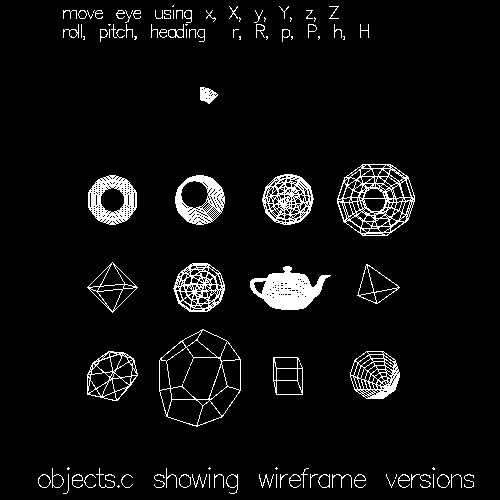

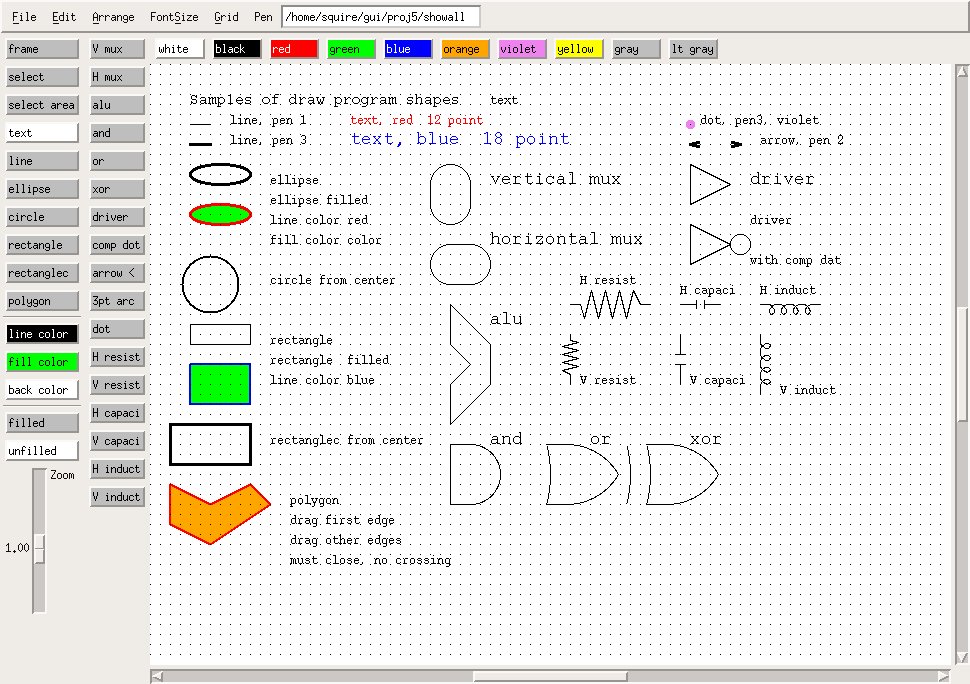

draw - default object oriented graphics (digital logic and circuit symbols added)

pairs2 - card game

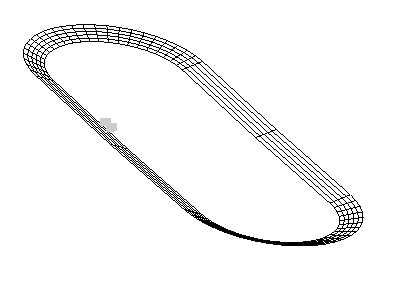

hull_draw - modeling boat hull

mover - independent window control

fractal.c - create scenes (art vs composition)

fractalgl.c - create scenes (art vs composition)

Fractal.java - create scenes (art vs composition)

Now, you need to set up your system for GUI programming.

linux.gl.umbc.edu has everything for Linux X Windows,

OpenGL and java. You may have to download software or

set up links or change directory names on your Linux

or Unix system.

Microsoft Windows needs to have Microsoft Visual Studio

or Cygwin or possibly some free compilers. There are many

versions of Microsoft Visual Studio and thus they are

not covered in this course. The essential component is

"cl.exe" the C and C++ compiler that can be used from

a standard command prompt. If you use Visual Studio

be sure you turn off preference "precompiled header files".

Mac OSX, use either the Mac IDE or download the X environment.

More information is in getting started

That said, here are the Linux/Unix/Mac "Makefile" and the

Microsoft Windows "make.bat" files that compile and execute

the source code shown above.

Makefile1.linux

Makefile_mac_w1

make1.bat make1.bat.txt

In my personal directory, I have some Makefiles and

some make.bat files that includes all commands to make

most programs in that directory.

A start of my Makefile and make.bat is shown above.

An option to make.bat is to use nmake on Microsoft Windows.

(This is an optional exercise for the student.)

Or, use an IDE such as Visual Studio, Eclipse, etc. etc.

Now, a quick look forward to your project.

Start trying various OS, languages, and toolkits.

Do homework 1 fall

If you have been on WOW, World of Warcraft or angry birds or

other virtual worlds, you might note how they handle the human interface.

opensimulator.org is a

virtual environment builder that may be of interest.

This course in interested in the user interface. Both to

develop applications and to use applications.

Human factors, intuitiveness, speed of learning, levels of

expertise are of interest.

Add music or voice to make your interaction more interesting (uses flash):

words and music

copy any of my files that may interest you, on linux.gl.umbc.edu

cp /afs/umbc.edu/users/s/q/squire/pub/download/fileyouwant .

cp /afs/umbc.edu/users/s/q/squire/pub/www/w1.html .

Homework 1 is assigned hw1

A few sample programs in Python 3

w1tk.py3 source code

w2tk.py3 source code

shape_def3d_py3.out vertex,faces

w1.html - HTML5 using javascript

Many w1, w2, w3, w4 files with various languages

download file you want as filename on linux.gl.umbc.edu with

cp /afs/umbc.edu/users/s/q/squire/pub/download/filename

cp /afs/umbc.edu/users/s/q/squire/pub/www/filename .html files

app1qt4.py - contributed Python Qt

hw5qt4s.py - contributed Python qt clock

hw2wxs.py - contributed Python wx

3D HyprCube.java

run 3D HyprCube.html

http://www.cs.umbc.edu/~squire/myapplets/HyprCube.html

Note that:

w1.c, the basic X Windows GUI application can be compiled

and executed on all Unix based operating systems,

including MacOS X

w1gl.c, the OpenGL GUI application can be compiled

and executed on almost all operating systems

that provide windowing (All forms of Unix,

MacOS and Microsoft Windows, etc.)

W1frame.java, the Java GUI application can be compiled

and run on any system that has Java

J2SE 1.6, JDK 6 or later available.

W1app.java, the Java GUI application can be compiled

on any system that has Java J2SE 1.6 or

later available. Then run in almost any

WEB browser. But, the user may not have

Java applets enabled. There are also some

severe restrictions on applets. Also, JavaScript

and Flash may not work well for this course.

Other demonstrations of sample applications may include:

split_cube - visualization, color, movement, inside

f to slow, O to close, c no color, C color helps visualize, R/L click

Actually running split_cube6 x for demonstration

teapots - lighting, move light with arrow keys, beware background

planets - lighting and glowing sun

sky_fly - terrain

pilot - do your own flight simulator, y,z underground?

springgl - education, teach something for project

spring2gl - build on previous applications

alpha_fade - scene transitions using fading

earth - texture map pictures onto objects

gears4 - modeling and simulation

tenseg2gl - modeling user controls viewing

light_dat - skull.dat , more modeling

draw - default object oriented graphics (digital logic and circuit symbols added)

pairs2 - card game

hull_draw - modeling boat hull

mover - independent window control

fractal.c - create scenes (art vs composition)

fractalgl.c - create scenes (art vs composition)

Fractal.java - create scenes (art vs composition)

Now, you need to set up your system for GUI programming.

linux.gl.umbc.edu has everything for Linux X Windows,

OpenGL and java. You may have to download software or

set up links or change directory names on your Linux

or Unix system.

Microsoft Windows needs to have Microsoft Visual Studio

or Cygwin or possibly some free compilers. There are many

versions of Microsoft Visual Studio and thus they are

not covered in this course. The essential component is

"cl.exe" the C and C++ compiler that can be used from

a standard command prompt. If you use Visual Studio

be sure you turn off preference "precompiled header files".

Mac OSX, use either the Mac IDE or download the X environment.

More information is in getting started

That said, here are the Linux/Unix/Mac "Makefile" and the

Microsoft Windows "make.bat" files that compile and execute

the source code shown above.

Makefile1.linux

Makefile_mac_w1

make1.bat make1.bat.txt

In my personal directory, I have some Makefiles and

some make.bat files that includes all commands to make

most programs in that directory.

A start of my Makefile and make.bat is shown above.

An option to make.bat is to use nmake on Microsoft Windows.

(This is an optional exercise for the student.)

Or, use an IDE such as Visual Studio, Eclipse, etc. etc.

Now, a quick look forward to your project.

Start trying various OS, languages, and toolkits.

Do homework 1 fall

If you have been on WOW, World of Warcraft or angry birds or

other virtual worlds, you might note how they handle the human interface.

opensimulator.org is a

virtual environment builder that may be of interest.

This course in interested in the user interface. Both to

develop applications and to use applications.

Human factors, intuitiveness, speed of learning, levels of

expertise are of interest.

Add music or voice to make your interaction more interesting (uses flash):

words and music

copy any of my files that may interest you, on linux.gl.umbc.edu

cp /afs/umbc.edu/users/s/q/squire/pub/download/fileyouwant .

cp /afs/umbc.edu/users/s/q/squire/pub/www/w1.html .

Homework 1 is assigned hw1

A few sample programs in Python 3

w1tk.py3 source code

w2tk.py3 source code

w3tk.py3 source code

w3tk.py3 source code

w4tk.py3 source code

w5tk.py3 source code

rubber_box.py3 source code

w4tk.py3 source code

w5tk.py3 source code

rubber_box.py3 source code

colorw.py3 source code

colorw.py3 source code

Each student needs to choose an operating

system to which they have access.

Your version of operating system and toolkit may

be very different from examples below.

Ignore anything that does not apply to your system.

UMBC makes available computers running Microsoft Windows,

Linux, Solaris, MacOS and several others.

Students may configure and use their personal computers.

UMBC offers economical software through my.umbc.edu "business".

The "windowing" system is chosen by default from the operating

system choice. MS Windows, X windows or Macintosh.

In Unix/Linux operating systems the user has a choice of window

manager and possibly a choice of desktop. There may be a graphical

software development environment available. Students may use any

tools they have learned and like. This course can not hope to

cover all possible development environments. Thus, tested command

line instructions are provided that will work for this course.

Do not expect help from the instructor on other development

environments, they generally have a long learning curve and are

found to be marginally more productive that command line development.

In the chosen operating system, the student

should choose a programming language, "C", C++,

Java, python, or other that has available interface to OpenGL.

You may start by using a UMBC machine and getting sample files

From any computer on the Internet that has "ssh" available

ssh -X linux.gl.umbc.edu (older systems)

ssh -Y linux.gl.umbc.edu (up to date systems)

(then enter your UMBC username and password)

Starter files may be copied to your subdirectory on

GL using commands such as (be sure to type the last space-dot):

cp /afs/umbc.edu/users/s/q/squire/pub/download/w1.c .

cp /afs/umbc.edu/users/s/q/squire/pub/download/w1gl.c .

cp /afs/umbc.edu/users/s/q/squire/pub/download/w1tk.py3 .

cp /afs/umbc.edu/users/s/q/squire/pub/download/W1frame.java .

cp /afs/umbc.edu/users/s/q/squire/pub/download/W1app.java .

cp /afs/umbc.edu/users/s/q/squire/pub/download/W1app.html .

cp /afs/umbc.edu/users/s/q/squire/pub/download/Makefile1.linux .

*** currently on linux.gl.umbc.edu most students also need:

cp /afs/umbc.edu/users/s/q/squire/pub/download/libglut.so.3.7 .

cp /afs/umbc.edu/users/s/q/squire/pub/download/libXm.so.1 .

ln -s libglut.so.3.7 libglut.so.3

ln -s libglut.so.3.7 libglut.so

cp /afs/umbc.edu/users/s/q/squire/pub/download/Makefile_w1 .

mkdir GL

cd GL

cp /afs/umbc.edu/users/s/q/squire/pub/download/glut.h GL

setenv LD_LIBRARY_PATH .

cd ..

make -f Makefile_w1 w1gl

*** the above is needed when GLUT and Motif are not installed

type make -f Makefile1.linux

make -f Makefile1.linux java

The java program runs upon typing the second command.

Type w1 to run the basic X Windows program.

Type w1gl to run the OpenGL program.

If you get a message about a missing .so file, you also need

setenv LD_LIBRARY_PATH .

in order for the ".so" shared object files to be found.

On the old UMBC lab machine running MS Windows 2000, there was a lot more

setup required. Here are the steps I needed to be able to use OpenGL

with Glut. (do NOT type the (stuff) ) (J: may be S:)

((Or you may use WinSCP, it works great for me.))

log on (I was in J:\umbc.edu\users\s\q\squire\home,

you will be in your /afs directory)

md cs437 (a special directory for this course)

cd cs437 (be there)

md GL (needed for GLUT)

cd GL (be there)

copy J:\umbc.edu\users\s\q\squire\pub\download\glut.h

cd .. (you are back in cs437)

copy J:\umbc.edu\users\s\q\squire\pub\download\glut32.lib

copy J:\umbc.edu\users\s\q\squire\pub\download\glut32.dll

copy J:\umbc.edu\users\s\q\squire\pub\download\w1gl.c

copy J:\umbc.edu\users\s\q\squire\pub\download\cl_setup.bat

cl_setup (you are running the .bat file)

cl /GX /ML /I. w1gl.c

w1gl

On Mac OSX you can use Cocoa, the native Mac graphics,

or use "fink" to download X Windows, Motif, possibly OpenGL

if not installed.

Cocoa will look like

#import

#import "your_stuff.h"

int main(int argc, char *argv[])

{

return NSAapplicationMain(argc,(const char *)argv);

}

or use the same command line commands as Linux, SunOS, or

any version of Unix.

X11 that is X windows (Different from native Mac OSX windows) can be

run on any Mac OSX computer. Here is the Makefile I have used for w1, etc

# Makefile_mac_w1 for CS437

# after you have installed X windows, X11

# e.g. using /sw/bin/fink install

# compile anywhere, execute in an X11, xterm

CC=gcc

CFLAGS= -I/sw/include -I/usr/X11R6/include

LIBX= -L/usr/X11R6/lib -L/sw/lib -lXext -lXm -lXt -lXi -lX11 -lm

LIBGL= -L/usr/X11R6/lib -L/sw/lib -lGLw -lGL -lGLU -lglut

LIBS=$(LIBGL) $(LIBX)

all: w1 w2 w1gl w2gl

w1: w1.c

$(CC) $(CFLAGS) -o w1 w1.c $(LIBS)

w2: w2.c

$(CC) $(CFLAGS) -o w2 w2.c $(LIBS)

w1gl: w1gl.c

$(CC) $(CFLAGS) -o w1gl w1gl.c $(LIBS)

w2gl: w2gl.c

$(CC) $(CFLAGS) -o w2gl w2gl.c $(LIBS)

Follow this link to Solve Setup Problems, Unix-Linux

Follow this link to Solve Setup Problems, Microsoft

Do not expect you system to be set up for GUI programming.

You are now into the expert programmer realm.

You must be able to find out how your specific computer is configured.

Use the command printenv | more to see your environment.

Specifically look at some environment variables:

echo $PATH # direct access to executable programs

echo $INCLUDE # direct access to include files

echo $LIB # direct access to linking libraries

You can modify environment variables for your use using:

set LIB=$LIB;/your-directory-path

export LIB

On some systems, X Windows and Motif may not be installed in default

directories. For these, use find /usr -name Xm.h -print

to get the include directory, CFLAGS= -I<path to directory>

CFLAGS= -I/usr/X11R6/include

Use find /usr -name libXm\* -print

to get the link directory, LIBX= -L<path to directory>

LIBX= -L/usr/X11R6/lib -lXm -lXt -lXi -lX11 -lm

Then use expanded compile and link command in the Makefile

tab gcc -o w1 $(CFLAGS) w1.c $(LIBS)

To get X windows manual pages, you may need, in your .bashrc file

set MANPATH=/usr/local/man:/usr/X11R6/man

export MANPATH

or in your .cshrc file

setenv MANPATH /usr/local/man:/usr/X11R6/man

OpenGL use requires access to the file GL/gl.h

and libgl.so or libgl.a

For gl.h, use find /usr -name gl.h -print

to get the include directory, CFLAGS= -I<path to directory>

(do not keep the trailing "/GL" in the "path to directory")

CFLAGS= -I/web/www/help/C++/opengl/glut-3.7/include

For libgl, use find /usr -name libgl\* -print

to get the link directory, LIBGL= -L<path to directory>

LIBGL= -L/usr/lib -lGLw -lGL

glut use requires access to the file GL/glut.h

and libglut.so or libglut.a

For glut.h, use find /usr -name glut.h -print

to get the include directory, CFLAGS= -I<path to directory>

(do not keep the trailing "/GL" in the "path to directory")

For libglut, use find /usr -name libglut\* -print

to get the link directory, LIBGL= -L<path to directory>

LIBGL= -L/usr/lib -lGLw -lGL -lGLU

There may be systems where links may be missing in /usr/lib

On one system, it was necessary, to specifically include the

".so" file

LIBGL= /usr/lib/libglut.so.3 -lGLw -lGL -lGLU

Combine library information using:

LIBS=$(LIBGL) $(LIBX)

Then compile using:

gcc -o w1 $(CFLAGS) w1.c $(LIBS)

You may want to use the Perl Script below to set up a UMBC lab

computer running Linux to have a friendly environment:

Be in a UMBC computer lab, booted up in Linux. Be in your cs437 directory.

ssh linux.gl.umbc.edu # log in, cd to your cs437 directory

do above to get w1.c, w2gl.c, Makefile1

cp /afs/umbc.edu/users/s/q/squire/pub/download/oglsetup.pl.txt .

mv oglsetup.pl.txt oglsetup.pl

./oglsetup.pl

1

this should set up a directory and links, if successful:

./oglsetup.pl

2

Makefile1

this augments Makefile1

^D log off linux.gl.umbc.edu back to lab machine

make

This should compile, without error, w1.c and w1gl.c

w1 # run w1

w1gl # run w1gl if it does not work, read the Pearl script

Do not expect you system to be set up for GUI programming.

You are now into the expert programmer realm.

Use the command set | more to see your environment.

Specifically look at some environment variables:

echo %PATH% # direct access to executable programs

echo %INCLUDE% # direct access to include files

echo %LIB% # direct access to linking libraries

You can modify environment variables for your use using:

set LIB=%LIB%;S:\your-directory-path

On some systems, OpenGL and glut may not be installed in default

directories. If not, just copy the needed files to the required

directories. The assumption is that Microsoft Visual Studio is

installed. This is not free software and must be purchased in

order to have a C and C++ compiler and associated libraries.

The following shows the directories and the necessary files:

(uppercase is the same as lowercase on Microsoft)

(replace Microsoft Visual Studio\VC98 with

Microsoft Visual Studio .NET 2003\VC7\PlatformSDK

Microsoft Visual Studio .NET\VC7\PlatformSDK

Microsoft Visual Studio 9.0\VC

for various versions)

C:\Program Files\Microsoft Visual Studio\VC98\include\GL\gl.h

C:\Program Files\Microsoft Visual Studio\VC98\include\GL\glaux.h

C:\Program Files\Microsoft Visual Studio\VC98\include\GL\glu.h

C:\Program Files\Microsoft Visual Studio\VC98\include\GL\glut.h

C:\Program Files\Microsoft Visual Studio\VC98\lib\opengl32.lib

C:\Program Files\Microsoft Visual Studio\VC98\lib\glu32.lib

C:\Program Files\Microsoft Visual Studio\VC98\lib\glaux.lib

C:\Program Files\Microsoft Visual Studio\VC98\lib\glut32.lib

C:\Windows\System32\opengl32.dll

C:\Windows\System32\glu32.dll

C:\Windows\System32\glut32.dll

You can get these files, if not on your system, from

/afs/umbc.edu/users/s/q/squire/pub/download

basically 7 files glut32 and opengl32 for .lib and .dll

and gl.h glut.h glu.h

If you are not set up for Command Prompt "C" programming, you need

to set up Environment Variables

Mouse your way to Control Panel on your computer

System

Advanced

Environment Variables

You have a choice of "user variables " just for current user

or "system variables" apply to all users

Check or add for appropriate version:

lib ;C:\Program Files\Microsoft Visual Studio\VC98\lib

lib ;C:\Program Files\Microsoft Visual Studio .NET 2003\VC7

\platformSDK\lib

lib ;C:\Program Files\Microsoft Visual Studio 9.0\VC\lib

include ;C:\Program Files\Microsoft Visual Studio\VC98\include

include ;C:\Program Files\Microsoft Visual Studio .NET 2003\VC7

\platformSDK\include

include ;C:\Program Files\Microsoft Visual Studio 9.0\VC\include

path ;C:\Program Files\Microsoft Visual Studio\VC98\bin

path ;C:\Program Files\Microsoft Visual Studio\VC7\bin

path ;C:\Program Files\Microsoft Visual Studio 9.0\VC\bin

(Concatenate, separating them by a semicolon, ;)

To set your environment variable on GL for a UMBC lab machine:

Right click on "my computer" click on properties,

advanced,

environment variables.

Note: There may be a \Microsoft Visual Studio .net\ (no 2003 )

To find missing or misplaced .dll files

cd \

dir /s mspdb71.dll (this is an example, probably not found)

dir /s mspdb80.dll (for visual studio 9.0)

Then copy the misplaced .dll to \windows\system32

(it is safe to add .dll files to \windows\system32 but suggest not overwrite)

Now use a Command Prompt window to compile

cl /GX /ML w1gl.c

Then execute the program

w1gl

You may use "nmake" on Microsoft, similar but not quite the same

as "make" or "gmake" on Unix-Linux

Note: When in command prompt window, the two commands:

cd \

dir /s opengl32.lib

will tell you if you have OpenGL and where the "lib" is

dir /s cl.exe will tell you the "path" to the compiler

dir /s gl.h will tell you where its "include" directory is.

You will probably have to add glut.h in directory with gl.h

You will probably have to add glut32.lib in directory with opengl32.lib

You will probably have to add glut32.dll in \windows\system32

or in working directory

Setup is a one time effort per machine per operating system.

Windows XP commands are command.help

Microsoft C and C++ compiler options are cl.help

Remember: Microsoft came after Unix and copied much.

Unix command line works in Microsoft command window

prog < data redirection of file 'data' to stdin

prog > reslt redirection of stdout to file 'reslt'

prog | more pipe output through 'more' same as Unix

prog -help often both /option and -option are allowed

"root" and directories are forward slash on Unix

"root" and directories are backward slash on Microsoft

some tools accept both " / " and " \ " on Microsoft, WWW, FTP, etc.

Microsoft 'nmake' much line Unix 'make' or 'gmake'

"C", "C++", Java, etc languages same on both.

Microsoft is case insensitive file system, thus

use all lower case in programs for compatibility.

e.g. #include <stdio.h> /* include path */

#include "your.h" /* local directory */

fopen("my_file.stuff", "r");

Both take long file names. No more 8.3 restriction.

Both allow spaces but save the headache, use underscore, _.

Both use environment variables and substitution in scripts.

Know and use tools to help yourself be efficient.

You many wish to keep old versions of programs (renamed or in separate

directories) and use "diff" on Unix, "fc" on MS Windows to find

the DIFFerences using a File Compare tool.

An extension of the very basic w1.c is to use the mouse to

select points, then connect the points with lines.

You may download these programs, changing "1" to "2" in

the 'cp' commands in lecture 1.

cp /afs/umbc.edu/users/s/q/squire/pub/download/w2.c

cp /afs/umbc.edu/users/s/q/squire/pub/download/w2gl.c

cp /afs/umbc.edu/users/s/q/squire/pub/download/W2frame.java

cp /afs/umbc.edu/users/s/q/squire/pub/download/w2tk.py3

cp /afs/umbc.edu/users/s/q/squire/pub/download/rubber_box.py3

scp /afs/umbc.edu/users/s/q/squire/pub/download/wxmouse.py : C:\home

Modify the Makefile1.linux by copying the groups of lines and

also changing "1" to "2" in the copied lines.

After running the programs, look through the source code to

see how the mouse is handled (in a number of places!).

3D select will be covered in Lecture 11.

w2.c connect points X windows

w2gl.c - w2.c in OpenGL

W2frame.java - w2.c in Java

W2app.java - W2frame as an applet

w2tk.py python - in Python2 Tk

w2tk.py3 python3 - in Python3 tk

test_mouse.py python2 Tk basic mouse

test_mouse_py.out python2 output

test_mouse.py3 python3 tk basic mouse

test_mouse_py3.out python3 output

wxmouse.py python wx basic mouse on Windows

rubber_box.py3 python3 - in Python3 tk

test_mouse.py python2 Tk basic mouse

test_mouse_py.out python2 output

test_mouse.py3 python3 tk basic mouse

test_mouse_py3.out python3 output

wxmouse.py python wx basic mouse on Windows

rubber_box.py3 python3 - in Python3 tk

One common GUI for the user to place objects at a position

with a user chosen size is to draw a "rubber band" rectangle.

This GUI feature uses "mouse motion" and typically has

the user first select the object to be placed, then press

and hold left mouse button down. The start coordinate is recorded

on the button down, the rectangle is displayed stippled (dashed)

while the user moves the mouse, then the end coordinate is

recorded on the button up.

Most systems provide a three button mouse with the buttons

labeled left, middle and right or 1, 2 and 3. Any of the buttons

may be used for any action, yet users expect the left button to

be used for the most common actions.

First the code is shown for just showing the rubber band

rectangle.

rubber.c Xlib code

rubbergl.c GL code

Rubber.java Java code

Next the code is augmented to draw rectangles and do

selections. Now the code leaves a red rectangle when the

mouse button comes up. Note: "select" is also available.

With multiple rectangles on the scene, left click in one

rectangle, then another. Note that the selected "object" is

changed to green color.

rubber1.c Motif code

rubber1gl.c GL code

Rubber1.java Java code

An option is to have a grid and snap to grid.

The grid is always on in this example, yet should be under menu

control (grid spacing, snap, hide, etc. as shown in "draw" demo.)

I consider a grid essential on a mouse input GUI.

rubber2.c Motif code

rubber2gl.c GL code

Rubber2.java Java code

Visual Effects, visual understanding

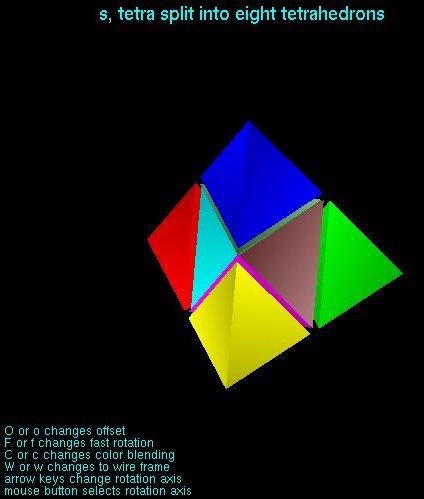

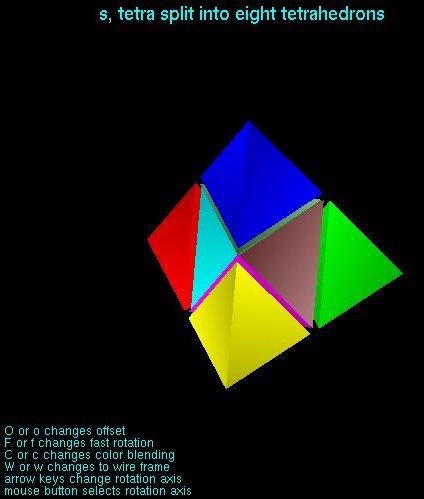

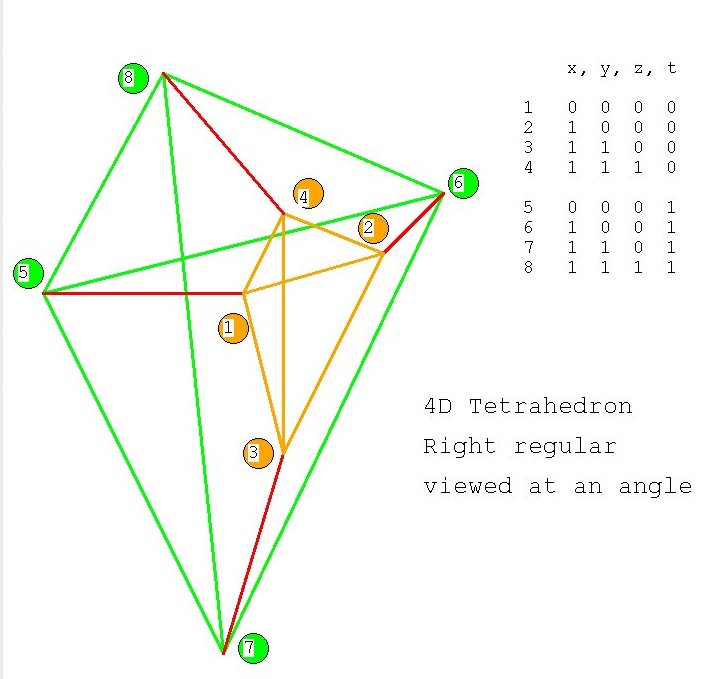

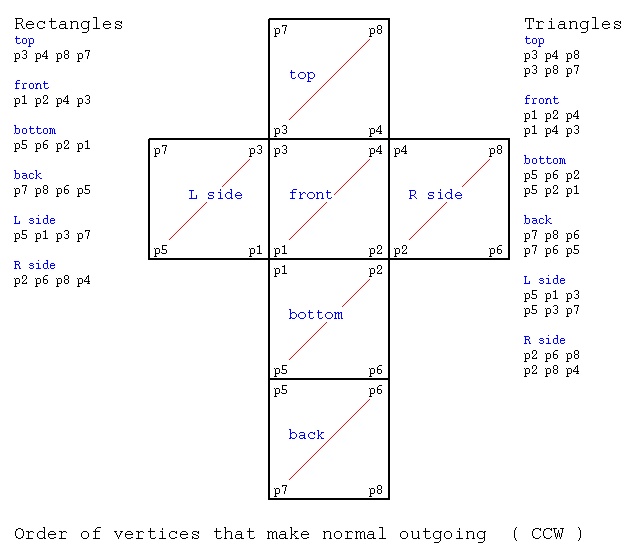

The program split_cube.c shows

a solid cube that is made up of five (5) tetrahedrons.

This would be hard to visualize without some considerations:

1) In order to see how the cube is constructed, an offset is provided.

("O" for larger offset, "o" for smaller offset, down to zero)

Note: at very small or zero offset, it is hard to understand how

the cube is built.

2) In order to see how the cube is constructed, the viewer may

change the axis of rotation (from the present orientation).

(Mouse press left, mouse press right, switches axis of rotation.

If there is a middle mouse button, that also switches the axis

of rotation.)

Note: In this example, almost every axis of rotation provides

a lot of information.

3) In order to see how the cube is constructed, the color of

adjacent faces are made unequal. This is accomplished by

slightly changing the color of the vertices of the triangles

that make up the faces of the tetrahedrons.

("C" for larger contrast, "c" for smaller contrast, down to zero)

Note: at very small or zero color contrast, it is hard to

understand the shape of the rotating objects.

4) In order to see how the cube is constructed, the speed

of rotation must be reasonable for the viewer.

A static image does not convey all the information about

how the cube is constructed.

("F" for faster rotation, "f" for slower, down to zero)

Note: at very small or zero rotation, it is hard to

understand the shape of the rotating objects.

5) In order to see how the cube is constructed, a wireframe

can be displayed for the viewer.

The wireframe shows edges of polyhedrons. It this case,

the five tetrahedrons, each with unique color edges.

("W" for wireframe, "w" for solid)

Note: That the edges merge and only one color is

displayed with the offset goes to zero.

Experiment with rotations (speed and direction), color shade,

offsets, and wireframe vs solid. Consider what information

your viewers need from your application. Provide the appropriate

user interface.

Then try split_cube6.c run from

the command line with split_cube6 -x

Unrecognizable, thus slow it down with f's. Change rotation with mouse

and open it up with uppercase O's. Deepen colors with uppercase C.

Two dimensional static pictures do not have the visualization

capability of user movable and colorable objects.

Similarly, for a tetrahedron:

split_tetra2.c

A classic demonstration, that measures frames per second,

is gears.c Compile and run

this demonstration. Note use of either letter keys

'x' 'y' 'z' or arrow keys.

A classic demonstration, that measures frames per second,

is gears.c Compile and run

this demonstration. Note use of either letter keys

'x' 'y' 'z' or arrow keys.

Human Factors considerations

These are very loose time estimates and there is significant

variation from person to person, yet the concepts are worth

covering.

Human beings are very slow compared to computers in many

situations. But, human beings get very impatient if the

computer does not respond in a timely manner.

What is timely?

A person sees an event and must take action. Here is

the approximate time line:

1/10 second to "see" or recognize the event.

1/10 second to make a decision to take action

1/10 second to physically move a finger (e.g. press a key)

Thus, the fastest a person can respond to a "message" on a

computer screen is three tenths of a second.

A person presses a key and expects a response from the computer.

The person needs at least 1/10 second to "see" that there is

a response. Another 1/10 second to "understand" the response.

There seems to be some dead time between the key press and

expecting to "see" the response. Experiments have been conducted

and found, on average, that a computer response within one-half

second did not slow down most users. A few users could tell the

difference between two tenths of a second response and three

tenths of a second response. On a modern computer with multiple

pipelines and a 3GHz clock, about one billion instructions can

be executed in one tenth of a second.

There is a tradeoff that the GUI programmer has to make.

For rapid response activities, low quality images may be

needed and may be acceptable. For activities where the user

is creating, more quality may be needed and slower response

may be acceptable. For example, OpenGL lines are limited to

square ends while basic X Windows and Microsoft Windows allow

options for round ends and lengthen by one-half line width

in order to provide a smooth sequence of connected lines.

Consider a fast typist. Assume a person who can type 50 words

per minute. The definition of a word is five characters and a space.

Thus, 300 key presses per minute or 5 key presses per second.

But, that only allows two 1/10 second time periods per character.

Thus, the typist is multiplexing, reading ahead, selecting keys,

and pressing keys overlapped in time.

Color is in the category of "in the eyes of the beholder".

There is a good reason why American traffic lights have

red on top, yellow in middle and green on bottom as a standard.

There are many forms of "color blind" and thus the standard

position with each color emitting light is the "event" that

a driver senses. For GUI programming, file menu on the left and

help menu on the right is a defacto standard for the same

reason. Users are more efficient, and happy, when they spend

less time hunting for what they need.

Common color issues are red appearing as grey, green and

blue indistinguishable, etc. The GUI programmer can avoid

these concerns by using intensity to create contrast.

Rerun split_cube using "c" held down, then "C" held down, repeat,

to see the visual effect.

User interface speed comparing MAC OSX and Windows XP was measured

and reported in UIF_Report.pdf

The term "User Interface Friction" means friction that slows

down the user. This varies with user capability.

I call it fluff vs. function.

Let the user know what will happen

Give the user feedback

On line are many helpful hints on user interface design.

I like JJ Garrett's wisdom as given in his nine pillars of

successful web teams: It is competent people in each of these

nine areas that are more important than rolls, job descriptions,

tools or process. Then, in his elements of user experience where

he asks: "What do you expect to happen if you click there."

"Think visually." Does the user get positive feedback to know

the expected action happened? Consider a person setting a

new alarm clock for the first time. Is it really set? Might I

miss my important meeting tomorrow morning?

For students using Microsoft Windows, on linux.gl.umbc.edu

download from

/afs/umbc.edu/users/s/q/squire/pub/download

glut32.lib

glut32.dll

opengl32.lib

opengl32.dll

glut.h

opengl.h

Then copy these files to your Windows laptop in cs437 folder.

Create a sub folder named GL. Into that folder

download and copy gl.h glu.h

You need a "C" compiler, e.g. visual studio.

(my executables still ran in Windows 10)

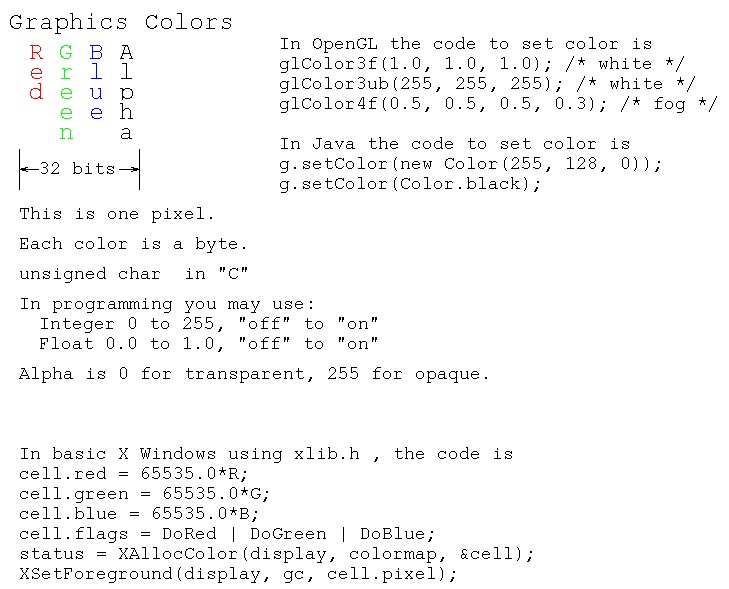

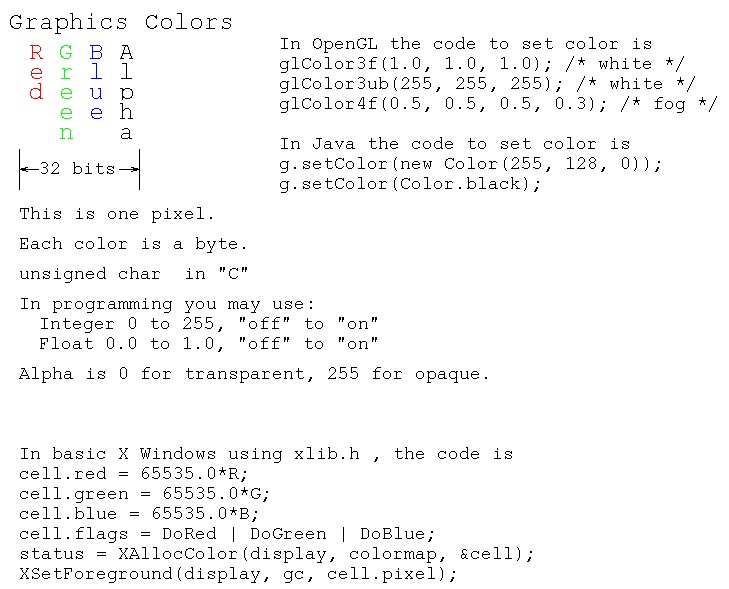

With pigment paint, the "primary colors" are red, blue and yellow.

With electronic displays the "primary colors" are red, green and blue.

The program Jcolor.java uses the built in names for colors and

lists the numeric values that correspond to the red, green and blue

color components. The output of Jcolor.java is:

Notice that each color has a group of three numbers that represent

the amount of red, green and blue, hereafter referred to as RGB.

RGB = 0,0,0 is black, no color.

RGB = 255, 255, 255 is white, all color.

RGB = 255,0,0 is red.

RGB = 0,255,0 is green.

RGB = 0,0,255 is blue.

A more complicated Java program consists of three files that need

to be compiled, gives the user "sliders" to choose a color.

The Red, Green and Blue components can be selected independently.

MyColorChooser.java

DrawPanel.java

PaletteFrame.java

in Python3

color_chooser.py3 source code

an optional Java applet is:

PaletteApp.java

PaletteApp.html

A sample of PaletteFrame output is:

Notice that each color has a group of three numbers that represent

the amount of red, green and blue, hereafter referred to as RGB.

RGB = 0,0,0 is black, no color.

RGB = 255, 255, 255 is white, all color.

RGB = 255,0,0 is red.

RGB = 0,255,0 is green.

RGB = 0,0,255 is blue.

A more complicated Java program consists of three files that need

to be compiled, gives the user "sliders" to choose a color.

The Red, Green and Blue components can be selected independently.

MyColorChooser.java

DrawPanel.java

PaletteFrame.java

in Python3

color_chooser.py3 source code

an optional Java applet is:

PaletteApp.java

PaletteApp.html

A sample of PaletteFrame output is:

In programming there is usually an alternative floating point

RGB with the color components in the range 0.0 to 1.0 equivalent

to 0 to 255. 0 is transparent, 1.0 or 255 is opaque.

A fourth component "Alpha", A, opacity can be present making

the RGBA of a pixel.

In programming there is usually an alternative floating point

RGB with the color components in the range 0.0 to 1.0 equivalent

to 0 to 255. 0 is transparent, 1.0 or 255 is opaque.

A fourth component "Alpha", A, opacity can be present making

the RGBA of a pixel.

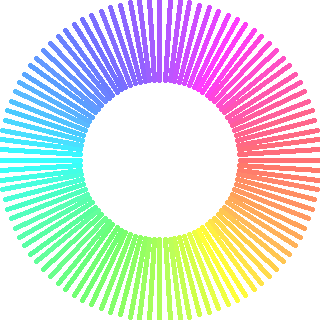

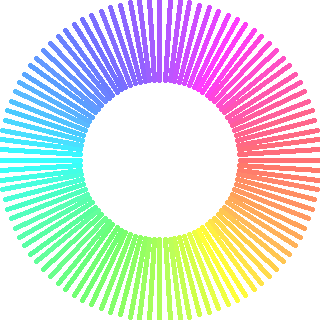

A sample of X Windows coding of a colorwheel is colorw.c uses calculated values for colors. The output is

A sample of X Windows coding of a colorwheel is colorw.c uses calculated values for colors. The output is

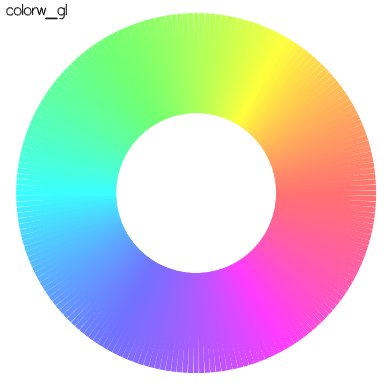

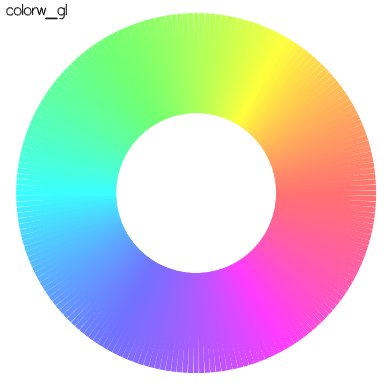

A sample of OpenGL coding of a colorwheel is colorw_gl.c

Note: calculated values for colors. The output is

A sample of OpenGL coding of a colorwheel is colorw_gl.c

Note: calculated values for colors. The output is

A sample of python coding of a colorwheel is colorw.py

Note: calculated values for colors. The output is

in Python3

colorw.py3 source code

A sample of python coding of a colorwheel is colorw.py

Note: calculated values for colors. The output is

in Python3

colorw.py3 source code

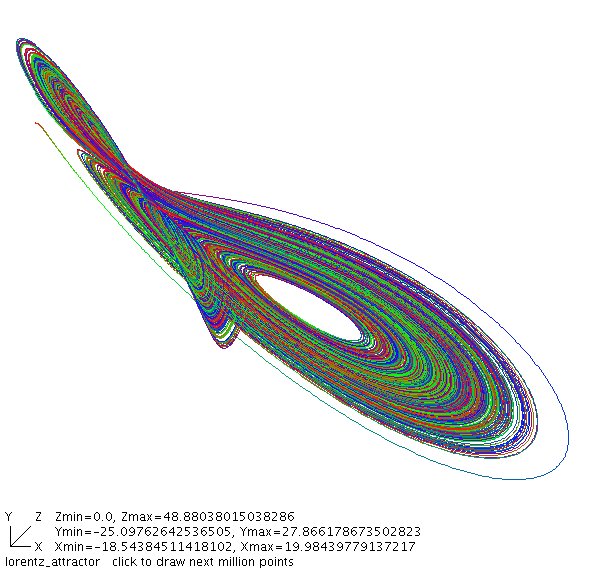

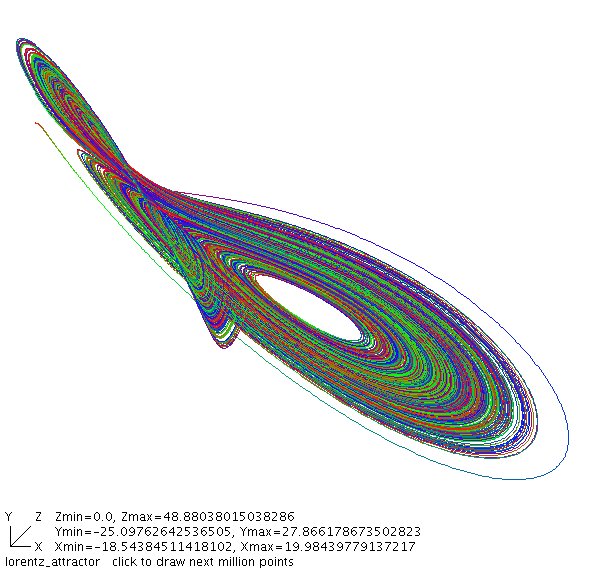

A sample of Java coding of dynamic changing colors is lorentz_attractor.java

Note: different calculated values for colors.

Execute code.

The first output is

A sample of Java coding of dynamic changing colors is lorentz_attractor.java

Note: different calculated values for colors.

Execute code.

The first output is

X Windows defines names for many more color names than Java,

these are available in rgb.txt

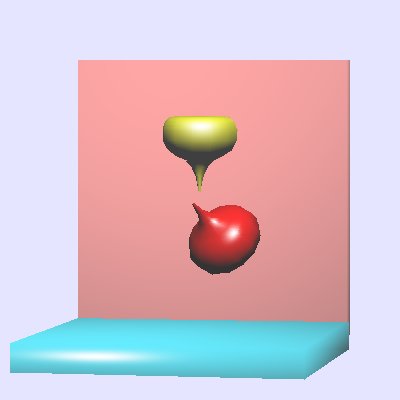

Colors are used in combination with lighting to fool the eye into

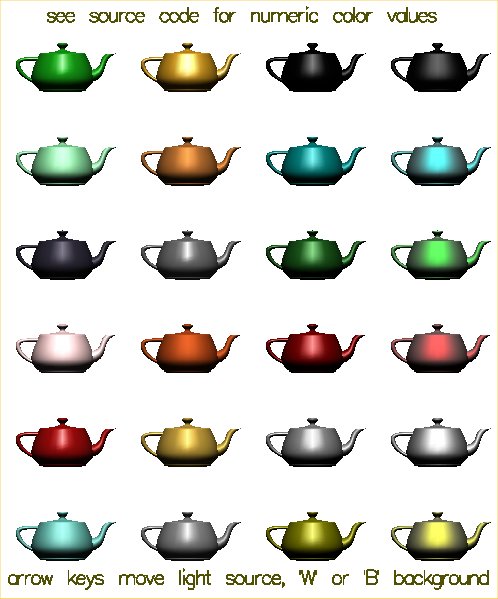

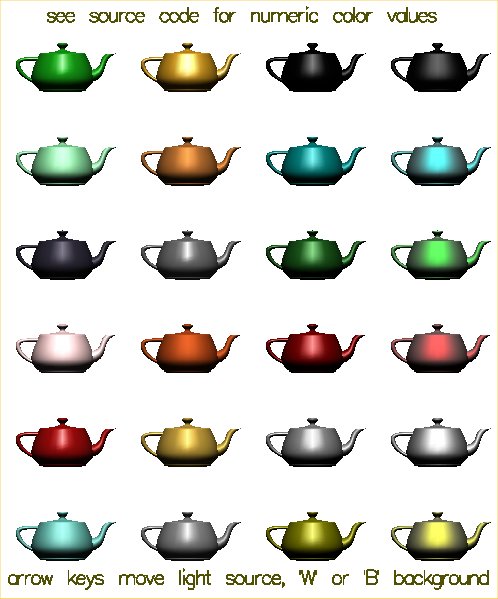

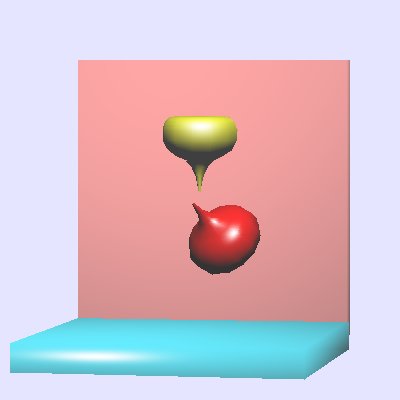

seeing various textures. teapots.c renders the

Utah Teapot with various colors and surfaces to provide the image.

10 values are used for each surface: Ambient RGB, Diffuse RGB,

Specular RBG and shine. See numeric values below renderTrapot.

X Windows defines names for many more color names than Java,

these are available in rgb.txt

Colors are used in combination with lighting to fool the eye into

seeing various textures. teapots.c renders the

Utah Teapot with various colors and surfaces to provide the image.

10 values are used for each surface: Ambient RGB, Diffuse RGB,

Specular RBG and shine. See numeric values below renderTrapot.

There are many formats for graphics files. Two of the most common used

on the WWW are .gif and .jpg, Gif and Jpeg image files. Most graphics

formats can be converted to most other graphics formats. A common program

used for modifying images and changing formats is Paint Shop Pro. A free

version of this program may be downloaded form the WWW for MS Windows.

A similar program for Linux is Gimp which comes with many Linux

distributions and may also be freely downloaded.

Images may be scanned, captured from the WWW and created using a graphics

editor. In order to use graphics in your application program, you need

to be able to read the specific file format. Two demonstration programs

alpha_fade.c and alpha_fade2.c

are provided with respective files gifread.c and

jpegread.c

These demonstration programs read four .gif or .jpg files and also

demonstrate the use of "Alpha" to fade from one image to the next.

An example deck of cards as .gif files with an OpenGL display program

is in the directory download/cards_gif

The program card_gl.c that uses gif.h and

gifread.c displays and shuffles the deck to display card_gl.jpg

An example deck of cards as .xbm files with an OpenGL display program

is in the directory download/cards_xbm

The program cards_gl.c that uses

xbmread.c displays and shuffles the deck to display cards_gl.jpg

An example Java program to display .gif .jpg and .png files is

ImageDisplay.java

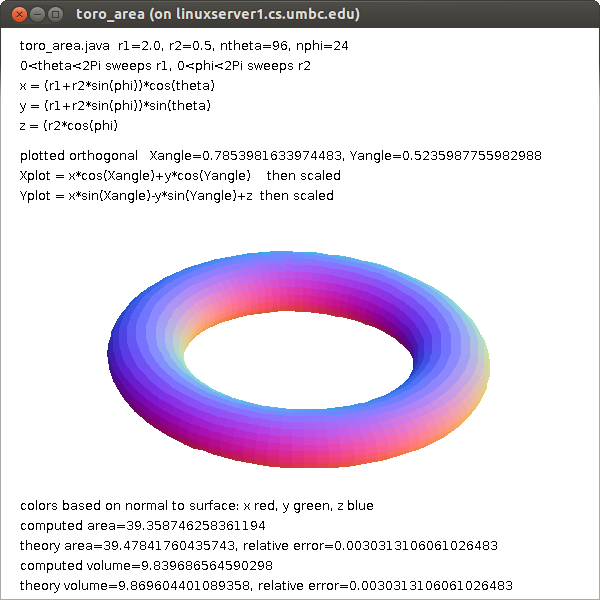

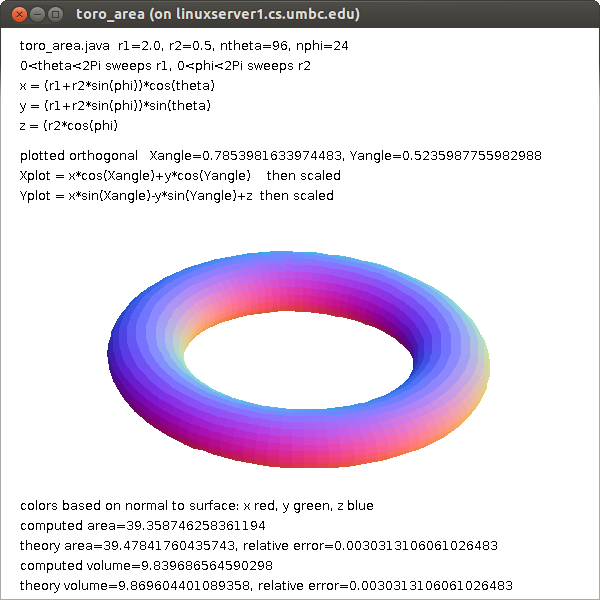

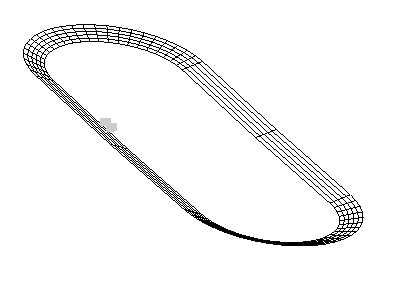

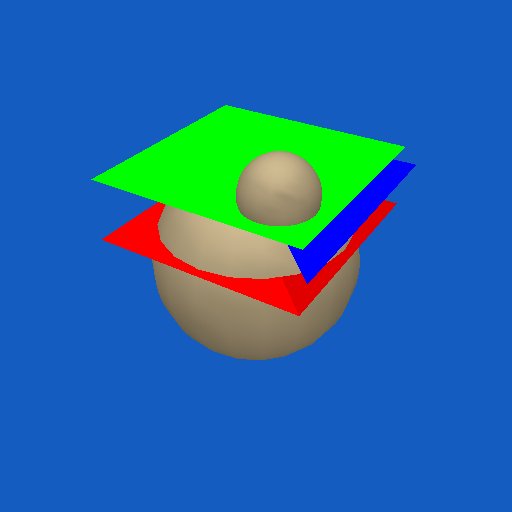

An example of using color on a 3D rendered object, toroid

toro_area.java

There are many formats for graphics files. Two of the most common used

on the WWW are .gif and .jpg, Gif and Jpeg image files. Most graphics

formats can be converted to most other graphics formats. A common program

used for modifying images and changing formats is Paint Shop Pro. A free

version of this program may be downloaded form the WWW for MS Windows.

A similar program for Linux is Gimp which comes with many Linux

distributions and may also be freely downloaded.

Images may be scanned, captured from the WWW and created using a graphics

editor. In order to use graphics in your application program, you need

to be able to read the specific file format. Two demonstration programs

alpha_fade.c and alpha_fade2.c

are provided with respective files gifread.c and

jpegread.c

These demonstration programs read four .gif or .jpg files and also

demonstrate the use of "Alpha" to fade from one image to the next.

An example deck of cards as .gif files with an OpenGL display program

is in the directory download/cards_gif

The program card_gl.c that uses gif.h and

gifread.c displays and shuffles the deck to display card_gl.jpg

An example deck of cards as .xbm files with an OpenGL display program

is in the directory download/cards_xbm

The program cards_gl.c that uses

xbmread.c displays and shuffles the deck to display cards_gl.jpg

An example Java program to display .gif .jpg and .png files is

ImageDisplay.java

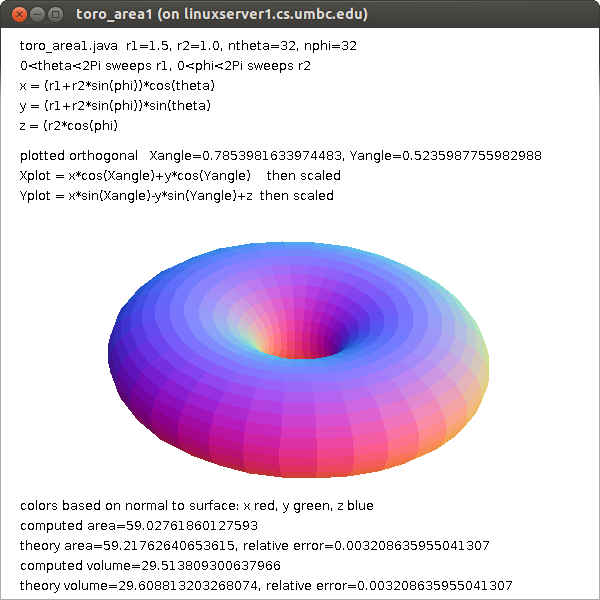

An example of using color on a 3D rendered object, toroid

toro_area.java

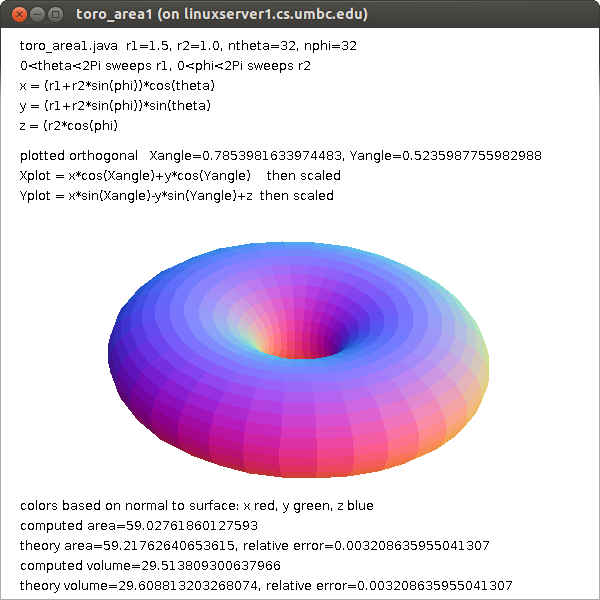

toro_area1.java fewer points

toro_area1.java fewer points

Many other graphics formats can be read. Some have code available on the WWW

but some you may have to write your own code to read the format. The basic

structure of graphic image files is a header with information about the

image such as height and width in pixels. Then there is generally a

Color Table, often coded as "ct". The basic idea is to have a set of colors,

a set of RGB's, stored in a table and then use one unsigned byte for

each pixel. The value in the unsigned byte is an index into the Color Table.

The terminology is that a color table with RGB components of eight bits

has 24 bits for each color or 2^24, over 16 million, possible colors.

The Color Table may have a maximum of 256 entries, called the pallet,

for this particular image. An unsigned byte can index from 0 to 255 thus

selecting one of the 256 colors in the pallet for each pixel.

Some graphics image formats allow compression such that the original

image is not exactly reproduced yet can look acceptable. This saves on

disk space and computer input/output time yet uses more CPU time.

But, in your application program, each pixel is usually stored as

a 32 bit word, RGBA. Note that OpenGL texture mapping files are stored

just as they would appear in RAM in your application. X Windows

bitmap files, d13.xbm ,

are actually "C" header files d13.xbm as text with the bits

encoded as hexadecimal. The .xbm files can be read at execution time

or included with "#include". For use in OpenGL use xbmread.c as

tested in xbm_to_gl.c

Each pixel in the .xbm file is on or off. The user specifies the

foreground and background color.

Just basic colors are not enough to get good looking graphics.

Shading across each, usually small, polygon provides the finishing

touch.

The subject of "lighting" will be covered in a future lecture.

Gouraud shading interpolates the colors at the vertices across the polygon.

Phong specular shading interpolates the normal vector at the vertices

across the polygon. More will be discussed on lighting in a later lecture.

If you have a color image and need to get a gray scale image,

the standard conversion is to make each RGB color have the value

0.299 * R + 0.587 * G + 0.114 * B

Remember 1.0 is white and 0.0 is black. When R equals G equals B then

you have a shade of gray.

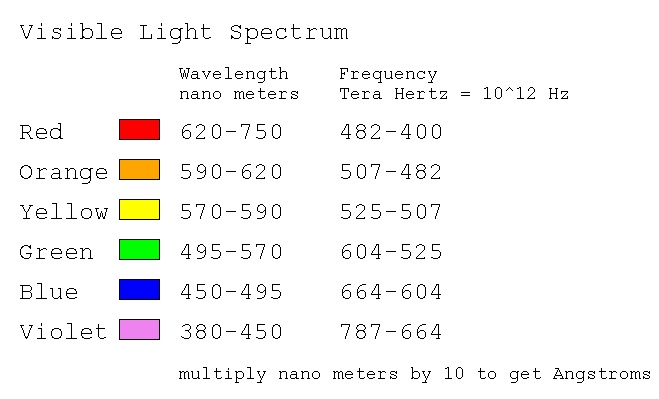

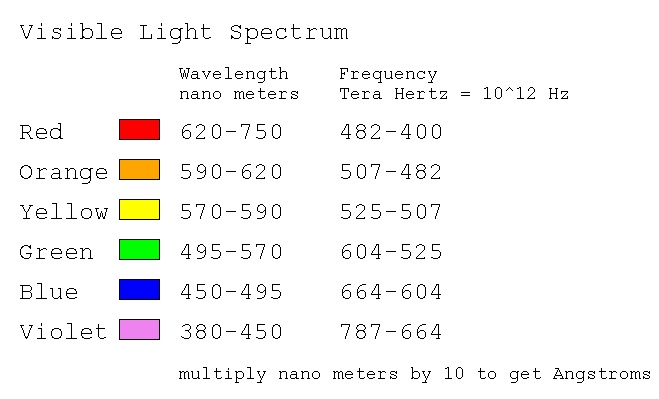

The "visible" spectrum, that which can be seen by average people,

is roughly given by wavelength in nm = nanometer or 10^(-9) meter.

Many other graphics formats can be read. Some have code available on the WWW

but some you may have to write your own code to read the format. The basic

structure of graphic image files is a header with information about the

image such as height and width in pixels. Then there is generally a

Color Table, often coded as "ct". The basic idea is to have a set of colors,

a set of RGB's, stored in a table and then use one unsigned byte for

each pixel. The value in the unsigned byte is an index into the Color Table.

The terminology is that a color table with RGB components of eight bits

has 24 bits for each color or 2^24, over 16 million, possible colors.

The Color Table may have a maximum of 256 entries, called the pallet,

for this particular image. An unsigned byte can index from 0 to 255 thus

selecting one of the 256 colors in the pallet for each pixel.

Some graphics image formats allow compression such that the original

image is not exactly reproduced yet can look acceptable. This saves on

disk space and computer input/output time yet uses more CPU time.

But, in your application program, each pixel is usually stored as

a 32 bit word, RGBA. Note that OpenGL texture mapping files are stored

just as they would appear in RAM in your application. X Windows

bitmap files, d13.xbm ,

are actually "C" header files d13.xbm as text with the bits

encoded as hexadecimal. The .xbm files can be read at execution time

or included with "#include". For use in OpenGL use xbmread.c as

tested in xbm_to_gl.c

Each pixel in the .xbm file is on or off. The user specifies the

foreground and background color.

Just basic colors are not enough to get good looking graphics.

Shading across each, usually small, polygon provides the finishing

touch.

The subject of "lighting" will be covered in a future lecture.

Gouraud shading interpolates the colors at the vertices across the polygon.

Phong specular shading interpolates the normal vector at the vertices

across the polygon. More will be discussed on lighting in a later lecture.

If you have a color image and need to get a gray scale image,

the standard conversion is to make each RGB color have the value

0.299 * R + 0.587 * G + 0.114 * B

Remember 1.0 is white and 0.0 is black. When R equals G equals B then

you have a shade of gray.

The "visible" spectrum, that which can be seen by average people,

is roughly given by wavelength in nm = nanometer or 10^(-9) meter.

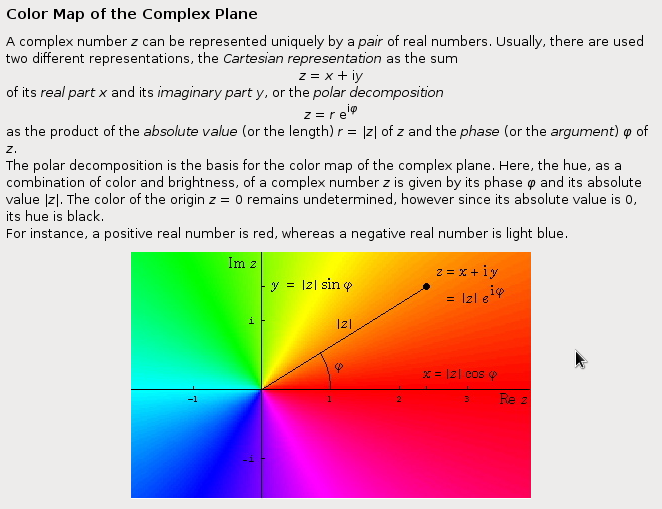

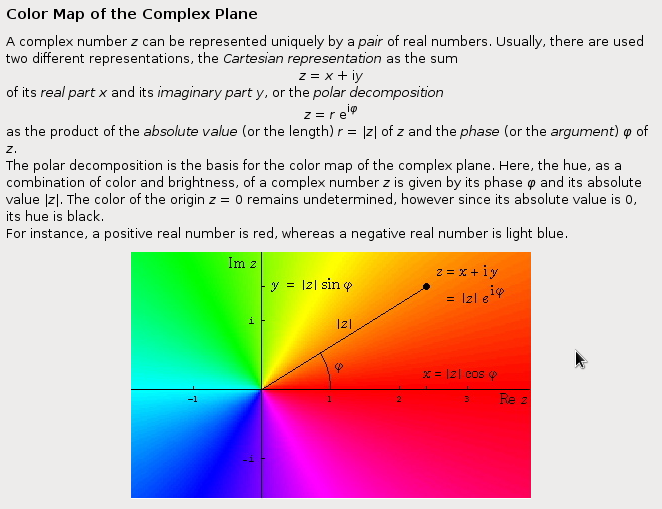

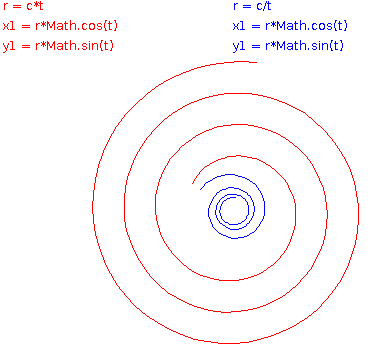

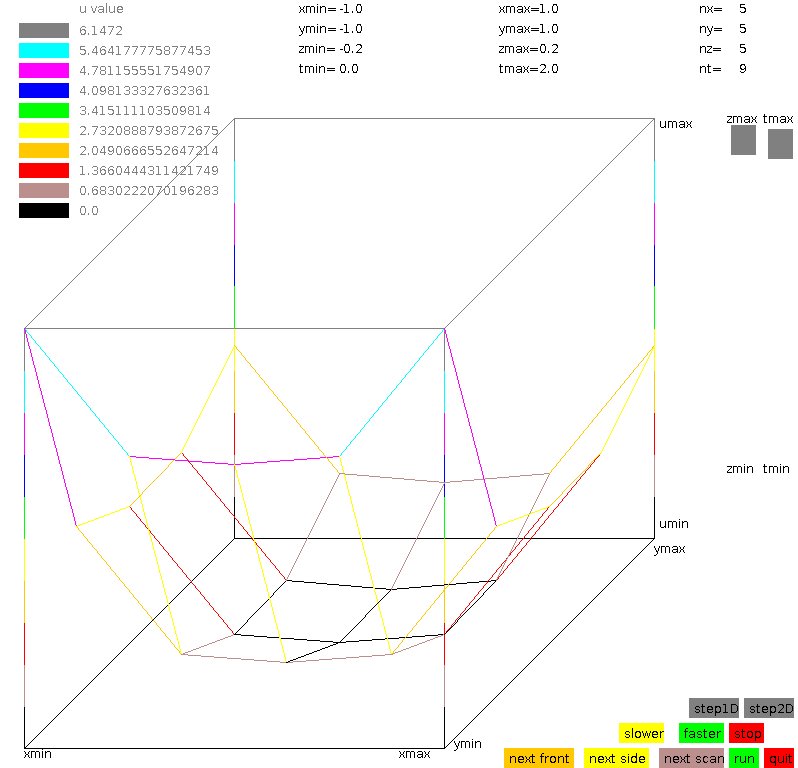

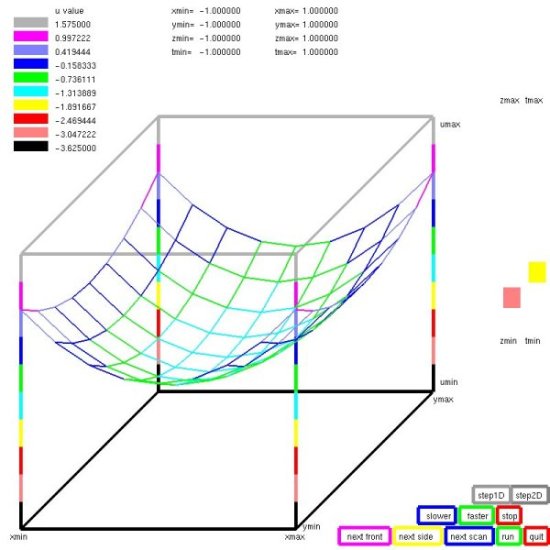

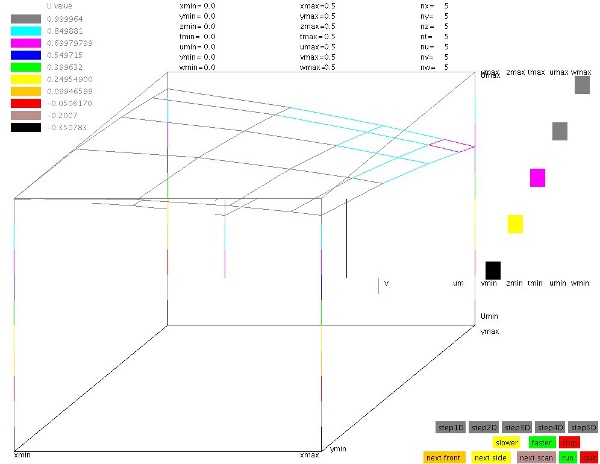

Color can be used in place of numeric data. This may require

the observer to have some understanding of the color coding.

Here is one sample of representing complex numbers with color:

Color can be used in place of numeric data. This may require

the observer to have some understanding of the color coding.

Here is one sample of representing complex numbers with color:

Notice that the colors are close to the color wheels above

in angle phi. I would have made the intensity change as

a function of |z|.

The RGB color space is called an "additive color space."

The CMYK, Cyan, Magenta, Yellow, black, color space is used for

printing and is called a "subtractive color space."

An approximate conversion, because every ink is unique, is

C1 = 1.0-R

M1 = 1.0-G

y1 = 1.0-B

K = min(C1, M1, Y1);

C = C1-K

M = M1-K

Y = Y1-K

TV uses an YIQ, luminance, inphase, quadrature, color space.

The matrix conversion is

|Y| |0.299 0.587 0.114| |R|

|I| = |0.596 -0.275 -0.321| * |G|

|Q| |0.212 -0.528 0.311| |B|

Notice that Y, luminance, is the gray scale formula, for black and

white TV. The IQ provide the color for color TV.

The CMYK and YIQ are smaller color spaces than RGB, some RGB

combinations are not representable.

Notice that the colors are close to the color wheels above

in angle phi. I would have made the intensity change as

a function of |z|.

The RGB color space is called an "additive color space."

The CMYK, Cyan, Magenta, Yellow, black, color space is used for

printing and is called a "subtractive color space."

An approximate conversion, because every ink is unique, is

C1 = 1.0-R

M1 = 1.0-G

y1 = 1.0-B

K = min(C1, M1, Y1);

C = C1-K

M = M1-K

Y = Y1-K

TV uses an YIQ, luminance, inphase, quadrature, color space.

The matrix conversion is

|Y| |0.299 0.587 0.114| |R|

|I| = |0.596 -0.275 -0.321| * |G|

|Q| |0.212 -0.528 0.311| |B|

Notice that Y, luminance, is the gray scale formula, for black and

white TV. The IQ provide the color for color TV.

The CMYK and YIQ are smaller color spaces than RGB, some RGB

combinations are not representable.

Sound and action

Action and music enhance interest

Composition may add beauty

For adding sound into your project, search Google.

A sample for java is ClipPlayer.java

and driver program ClipPlayerTest.java .

Record your own sound clips with a microphone and possibly

free download software. Also, Python and other tool kits:

sound.py plays sound files, needs WX

rocky4.wav test file

kirk.wav test file

ok.wav test file

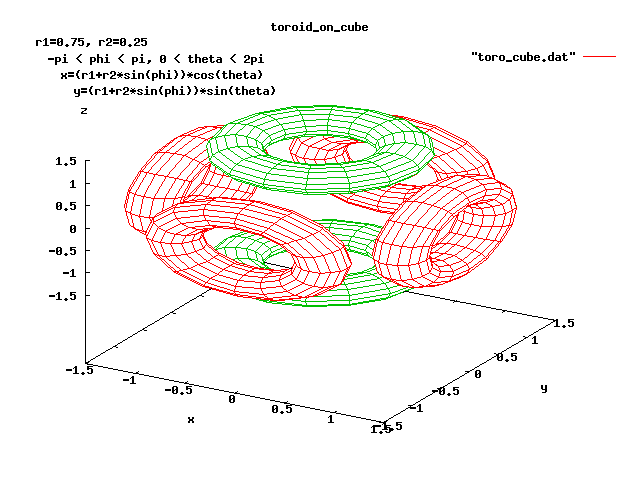

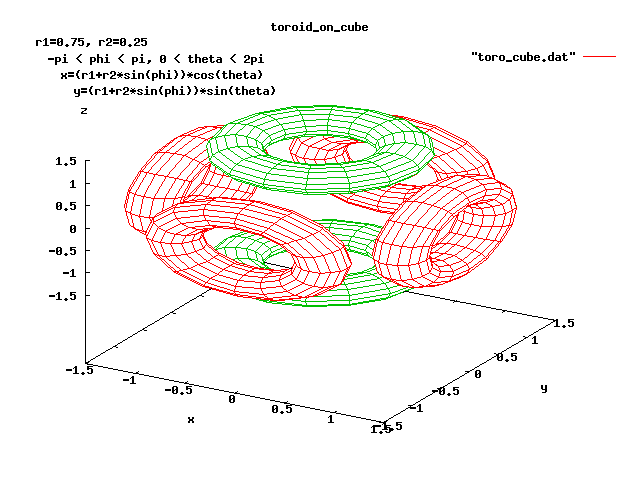

Using copies of your work

Once you have a shape you like, you may make copies.

toro_cube.c uses gnuplot and gimp

toro_cube.sh run gnuplot

toro_cube.plot run gnuplot control

toro_cube_c.out: toro_cube.c # makefile

gcc -o toro_cube toro_cube.c -lm

./toro_cube > toro_cube_c.out

rm -f toro_cube

./toro_cube.sh # on toro_cube.dat using gnuplot

./gimp toro_cube.png

w3 sample code

W3frame.java source code

after Press to change color

after Press to change color

w3tk.py3 source code

w3tk.py3 source code

tkmouse.py3 source code

tkmouse.py3 source code

Motion can be useful and impressive.

If your program must do a lot of computation for each movement,

you will need to "double buffer". With double buffering your

program is building the next screen in RAM while the previous

screen is seen by the user. Then the buffers are swapped and the

user sees the new screen and your program builds the next

screen in the other RAM buffer.

Examples to be demonstrated:

2D

single_double.c - buffers in OpenGL (motion)

(also multiple windows)

You may see redraw if not double buffered.

3D

split_cube.c - speed control (motion)

split_cube6.c - speed control (motion)

robot2.c - connected limbs movement (manual motion, mouse vs key)

robot3.c - connected limbs movement (data driven motion)

robot3 robot3.dat

pilot.c - game, exercise (motion)

planets.c - education, more on lighting later (motion)

SphereMotion.java - moving 3D lights (motion)

trackball.c - user control of view

skyfly - game, training, demo (motion)

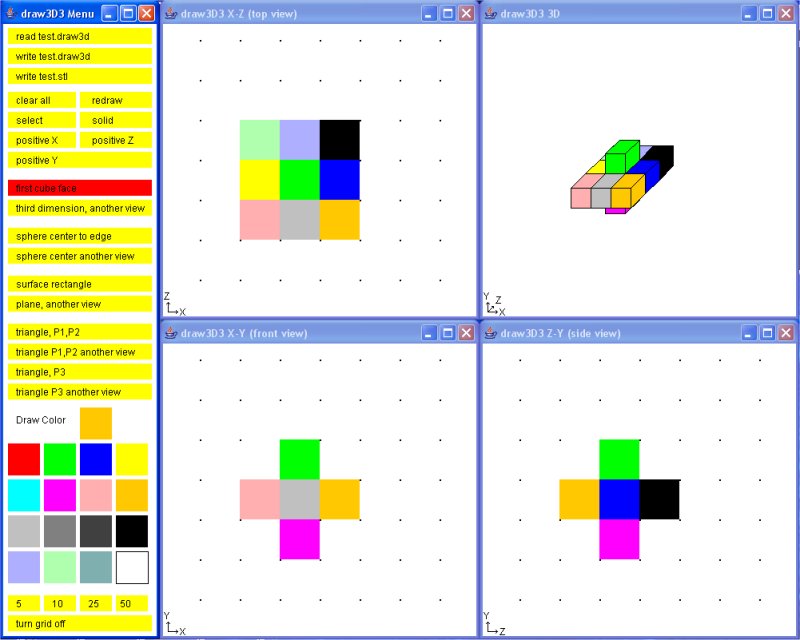

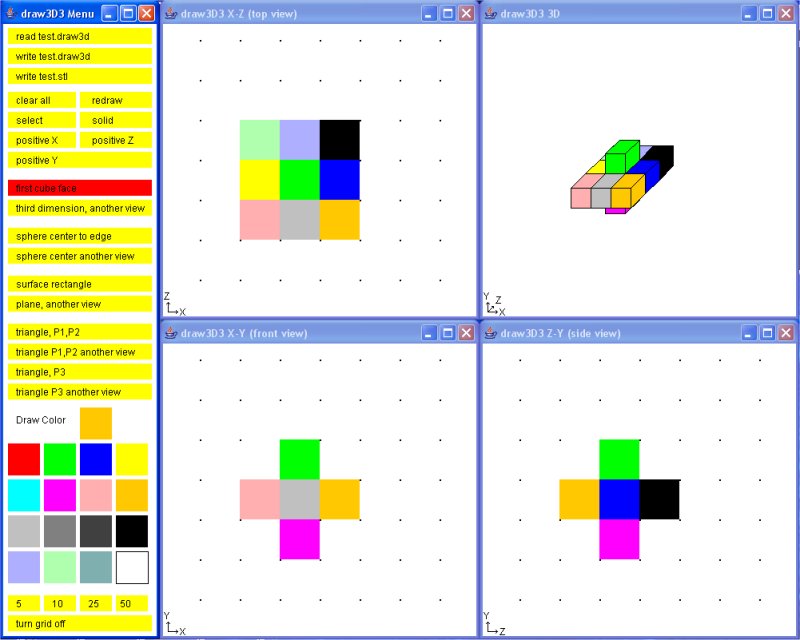

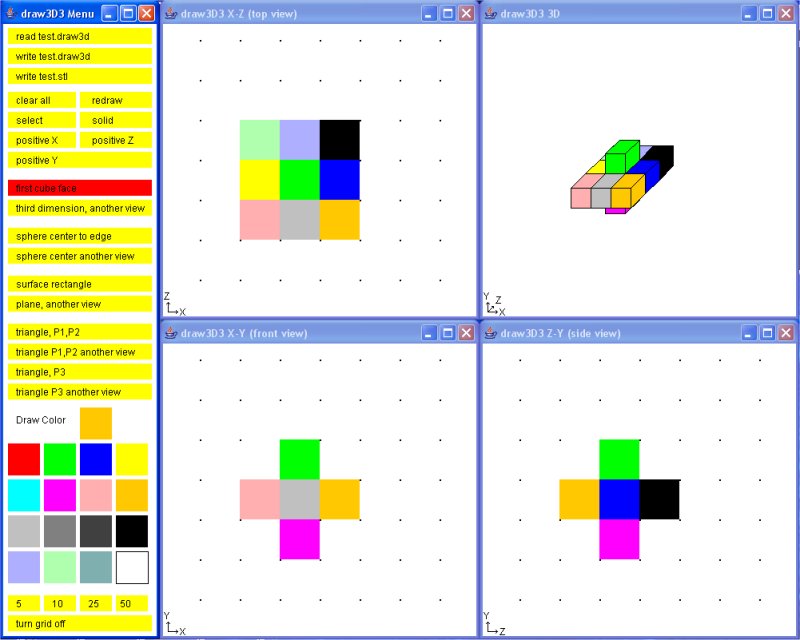

draw3D1.java - evolving 3D data entry (multiple windows)

threads, manual menu

draw3D2.java - evolving

solid and wireframe, flipping, read/write

draw3D3.java - evolving

test.draw3d test data

RunThread.java Four windows, possible multi core

four_windows.c - display multiple windows in OpenGL four_windows.gif

Techniques for developing interactive graphics applications

robot.c I considered not much to talk about robot.jpg

dynamic.c A follow-on is some way, of robot.c was hard to read. dynamic.jpg

robot2.c was an interesting exercise for me to develop. robot2.jpg

My approach was to copy dynamic.c to robot2.c and make the following

changes, in order, compiling (fixing) and running (fixing) each change.

I could not see the lower leg from the upper leg, thus I changed the

colors for various body parts. Since this was a 'lighting' scene,

it was a matter of changing the emitted light to white and covering

the various limbs with material of various colors.

Now that I could see the motion better, I wanted to make the robot

bend, not just turn. Yuk! The code used numbers, 1, 2, 3 ... rather

than named numbers for the angles. Thus I went through and changed

all references, menu, angle[?] and a few others to names, #define's.

This really helped me understand the code because I had to look

at every section.

With menu and angles and rotations named, it was easy to add two

menu items, one to increase motion per click, another to decrease

motion per click.

Now it was easy to add bend to the torso because I had seen that

the head could both rotate and bend, just cut-and-paste with some

name changing.

When I lifted both legs, the robot did not lower itself, unreal.

Thus I added keyboard function for 'x', 'X', 'y' and 'Y' so the

robot could be moved.

Future ideas are to "fix" the upper limbs, shoulder hip, to both

rotate up and down and sideways like real limbs. Then add "hands"

with some kind of grip. Texture map the face. Change cylinders

to ellipsoids. Be able to read and save a script of a sequence

of motions. Oh! But if I did that, students could not use it

as a project.

P.S. somewhere along the way I added + and - so the "repeat" function

of the keyboard would do what the mouse clicks would do, only faster.

Thus there became a 'move' function, which now should be stripped

of the cases and all of it executed every time.

robot2.c is an example of why there are many lines in an

interactive program. Much code is repeated yet is not suitable

for putting in loops. I expect this program would become more

unreadable and unmaintainable using loops.

A possible project is to implement a "record" mode where a user

moves the robots limbs to make the robot walk, run, dance, jump etc.

Then a "play" mode where the robot performs the recorded motions.

robot3.c Then, finally time to add data driven.

A typical data structure for each move might have:

sequence number

delta time for move

mode (just move, interpolate, repeat sequence)

x coordinate

y coordinate

z coordinate

number of joints to move

joint angle

joint angle

...

or an optional repeat sequence

sequence number

delta time for move

mode repeat sequence

from sequence number

to sequence number

robot3.dat is my first implementation

If the "record" kept an ASCII text file, the user could edit

the action and potentially have a computer program generate

the motions.

User interface buttons similar to those found on VCR or DVD

recorders would seem appropriate.

The robot could be replaced by a more human figure, an animal

or some pseudo figure like a car, truck or machine that could

do non characteristic actions. e.g. cartoon characters.

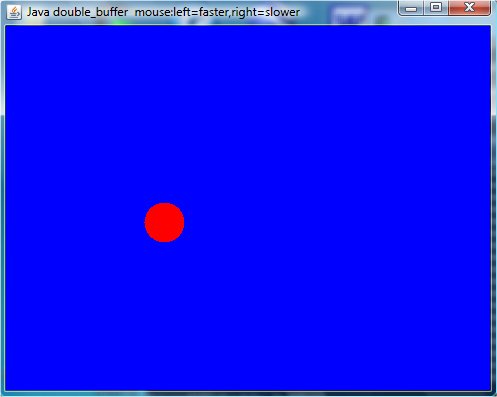

Double buffering in Java takes some effort. The code below

shows a reasonably small example that could be copied if your

project is in Java and has any fast moving objects.

double_buffer.java

Compile and run this program, click left mouse many times to

get a very fast moving red ball.

four_windows.c - display multiple windows in OpenGL four_windows.gif

Techniques for developing interactive graphics applications

robot.c I considered not much to talk about robot.jpg

dynamic.c A follow-on is some way, of robot.c was hard to read. dynamic.jpg

robot2.c was an interesting exercise for me to develop. robot2.jpg

My approach was to copy dynamic.c to robot2.c and make the following

changes, in order, compiling (fixing) and running (fixing) each change.

I could not see the lower leg from the upper leg, thus I changed the

colors for various body parts. Since this was a 'lighting' scene,

it was a matter of changing the emitted light to white and covering

the various limbs with material of various colors.

Now that I could see the motion better, I wanted to make the robot

bend, not just turn. Yuk! The code used numbers, 1, 2, 3 ... rather

than named numbers for the angles. Thus I went through and changed

all references, menu, angle[?] and a few others to names, #define's.

This really helped me understand the code because I had to look

at every section.

With menu and angles and rotations named, it was easy to add two

menu items, one to increase motion per click, another to decrease

motion per click.

Now it was easy to add bend to the torso because I had seen that

the head could both rotate and bend, just cut-and-paste with some

name changing.

When I lifted both legs, the robot did not lower itself, unreal.

Thus I added keyboard function for 'x', 'X', 'y' and 'Y' so the

robot could be moved.

Future ideas are to "fix" the upper limbs, shoulder hip, to both

rotate up and down and sideways like real limbs. Then add "hands"

with some kind of grip. Texture map the face. Change cylinders

to ellipsoids. Be able to read and save a script of a sequence

of motions. Oh! But if I did that, students could not use it

as a project.

P.S. somewhere along the way I added + and - so the "repeat" function

of the keyboard would do what the mouse clicks would do, only faster.

Thus there became a 'move' function, which now should be stripped

of the cases and all of it executed every time.

robot2.c is an example of why there are many lines in an

interactive program. Much code is repeated yet is not suitable

for putting in loops. I expect this program would become more

unreadable and unmaintainable using loops.

A possible project is to implement a "record" mode where a user

moves the robots limbs to make the robot walk, run, dance, jump etc.

Then a "play" mode where the robot performs the recorded motions.

robot3.c Then, finally time to add data driven.

A typical data structure for each move might have:

sequence number

delta time for move

mode (just move, interpolate, repeat sequence)

x coordinate

y coordinate

z coordinate

number of joints to move

joint angle

joint angle

...

or an optional repeat sequence

sequence number

delta time for move

mode repeat sequence

from sequence number

to sequence number

robot3.dat is my first implementation

If the "record" kept an ASCII text file, the user could edit

the action and potentially have a computer program generate

the motions.

User interface buttons similar to those found on VCR or DVD

recorders would seem appropriate.

The robot could be replaced by a more human figure, an animal

or some pseudo figure like a car, truck or machine that could

do non characteristic actions. e.g. cartoon characters.

Double buffering in Java takes some effort. The code below

shows a reasonably small example that could be copied if your

project is in Java and has any fast moving objects.

double_buffer.java

Compile and run this program, click left mouse many times to

get a very fast moving red ball.

An application of the above double_buffer.java is Springdb.java

Compare to basic Spring.java

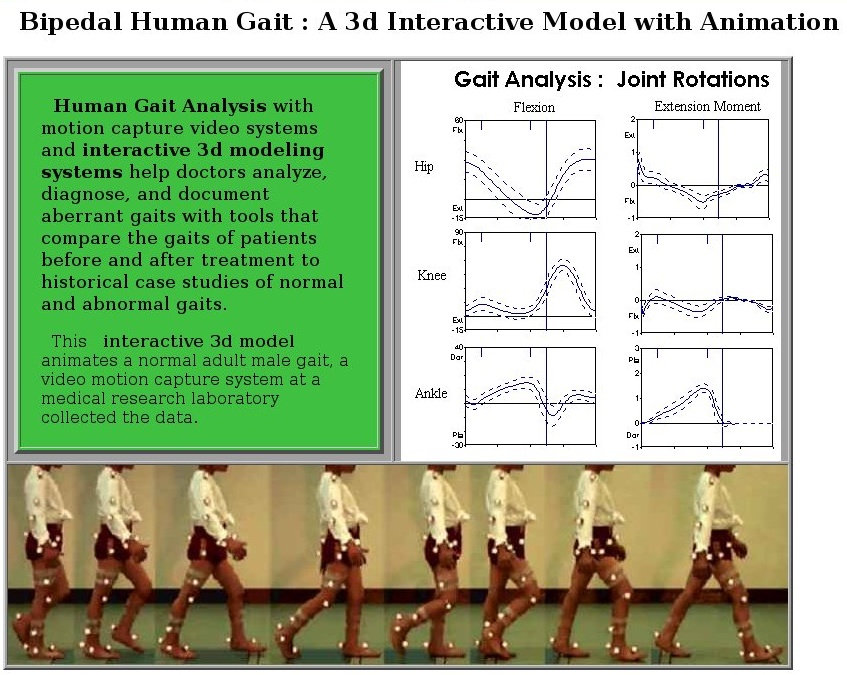

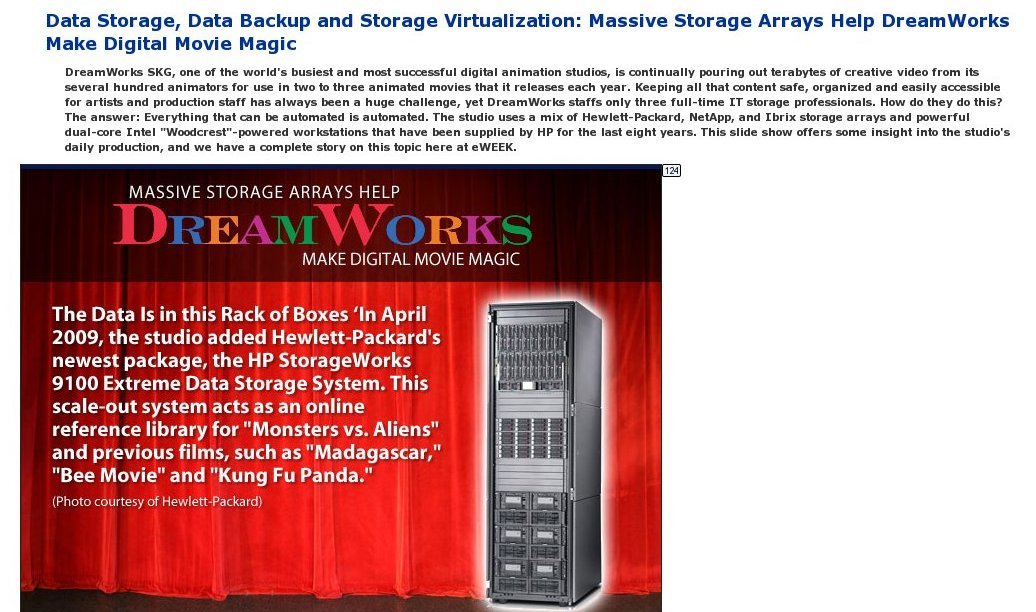

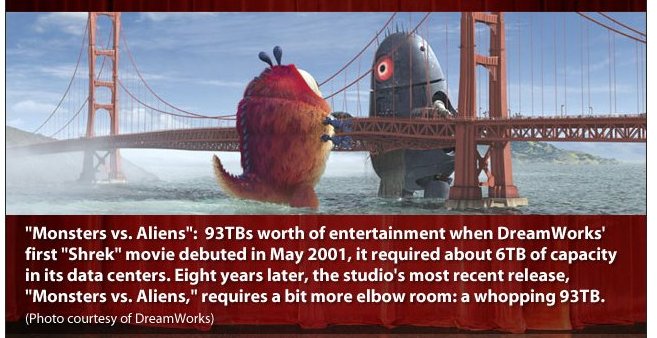

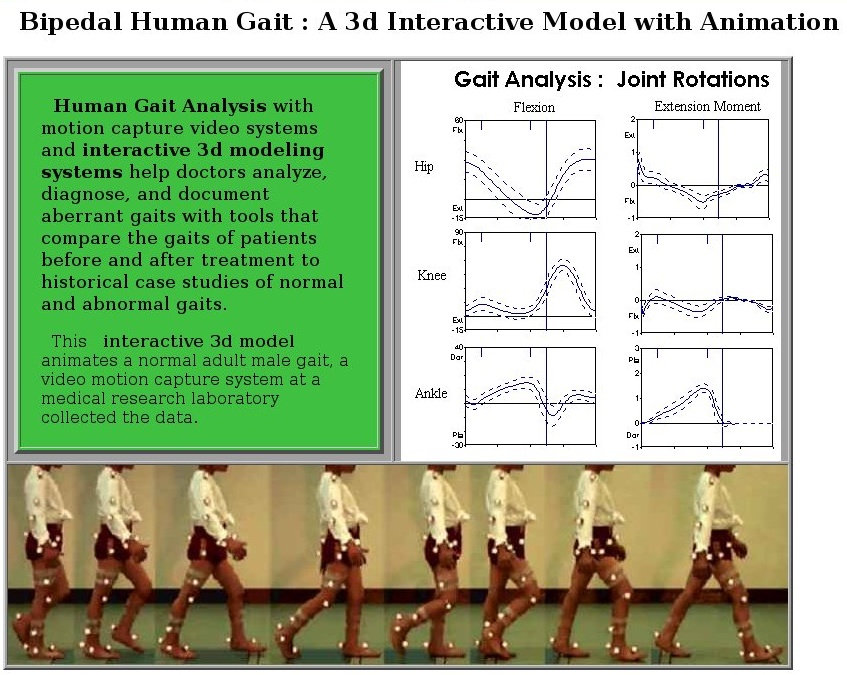

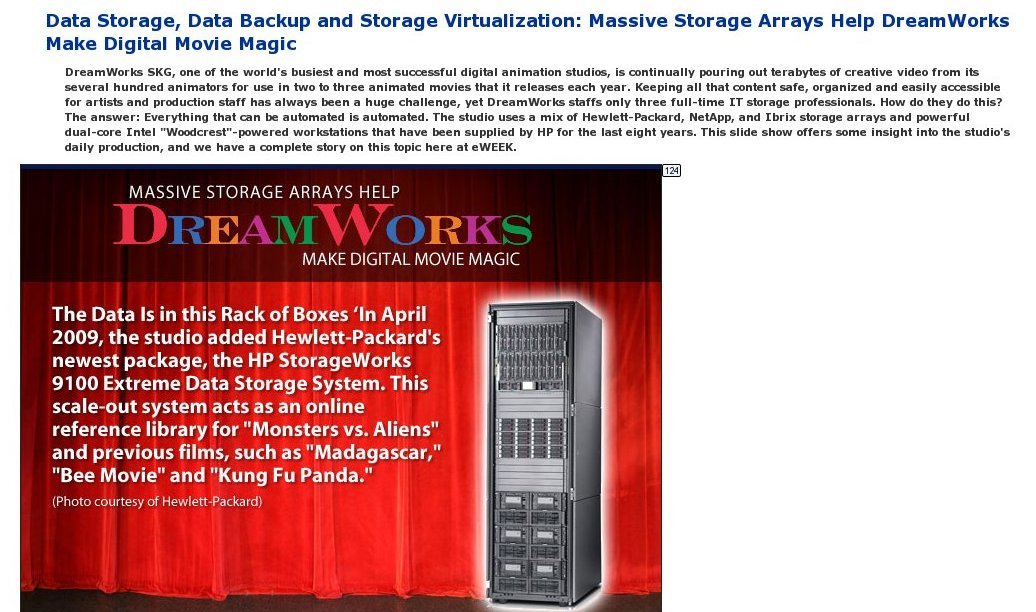

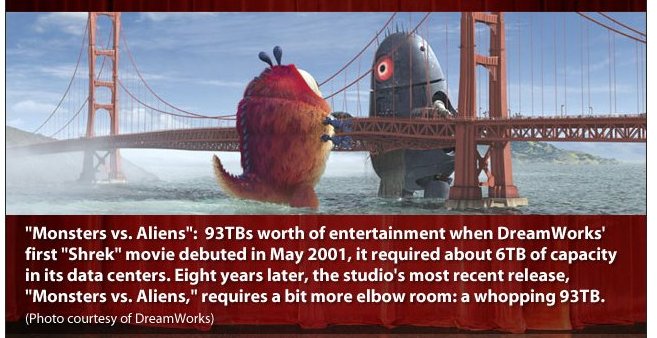

Professional movie makers use sophisticated software that has

many motions preprogrammed. A technique for getting realistic

motion is to dress a person in clothing that has colored dots

placed at "control points" on the body. The person is then

recorded doing the desired actions. The coordinates of the dots

are extracted at each time step. The coordinates are then

entered into a data file for future use in animating figures.

The result is movies such as "Toy Story" , "Madagascar" ,

"Over the Hedge" , "Tale of Despereaux" , "Bolt" , etc.

to name just a few.

An application of the above double_buffer.java is Springdb.java

Compare to basic Spring.java

Professional movie makers use sophisticated software that has

many motions preprogrammed. A technique for getting realistic

motion is to dress a person in clothing that has colored dots

placed at "control points" on the body. The person is then

recorded doing the desired actions. The coordinates of the dots

are extracted at each time step. The coordinates are then

entered into a data file for future use in animating figures.

The result is movies such as "Toy Story" , "Madagascar" ,

"Over the Hedge" , "Tale of Despereaux" , "Bolt" , etc.

to name just a few.

Many "trailers" are on line for viewing.

www.apple.com/trailers/disney/the_incredibles/trailer2_large.html

www.apple.com/trailers/disney

www.apple.com/trailers/dreamworks

The "Menu Bar" and drop down menus are the most common today.

You could do your own menus, yet you will probably want to use

the large amount of code provided by a GUI tool kit.

This lecture will cover the details often hidden by most GUI tool

kits. You may need to understand how menus are created in case

you have to work around a bug or problem in the tool kit you are using.

The richest toolkit for menus is Motif. (Linux/Unix/macOS)

Close behind is proprietary Microsoft Windows C++ classes.

Next comes Java Swing/Swing2.

The weakest we will look at is OpenGL with GLUT. Yet, strong by getting FLTK,

the Fast Light ToolKit from www.fltk.org

Defacto standardization makes some design issues obvious.

The "File" is on the left of the "Menu Bar".

The "Help" is on the right, or rightmost, of the "Menu Bar".

Using defacto standard names helps average users.

Using names that mean something to you are best for an application

you write for your own use.

Example programs to be covered are:

In X Windows using Motif, observe windows, widgets and buttons

being created, callbacks assigned and functions to handle callbacks.

The executable is w4 .

w4a.c

In OpenGL, a mouse click is used to popdown the menu.

Note that OpenGL requires sub menus to be created before

the main menu, the opposite order of Motif or Java.

w4gl.c

Not able to capture open menu and get graphic.

In Java using Swing, observe menu creation then menu item creation,

action listeners and functions to handle actions.

W4frame.java

In OpenGL, a mouse click is used to popdown the menu.

Note that OpenGL requires sub menus to be created before

the main menu, the opposite order of Motif or Java.

w4gl.c

Not able to capture open menu and get graphic.

In Java using Swing, observe menu creation then menu item creation,

action listeners and functions to handle actions.

W4frame.java

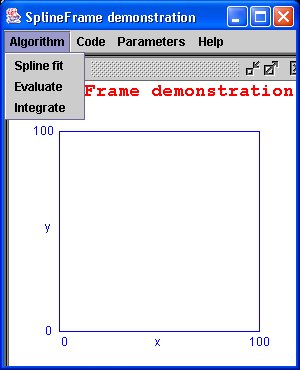

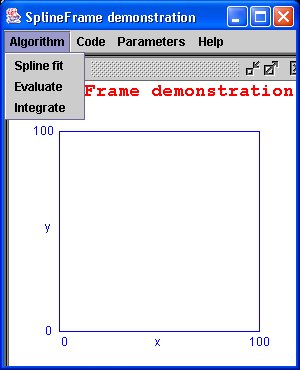

For the more complex example, Spline Tutorial, download and

compile: (note package myjava; and import myjava.*; fix to suit)

I have this in my 'myjava' directory and use java myjava.SplineFrame

In Python using tk, simple menu and callbacks.

w4tk.py3

For the more complex example, Spline Tutorial, download and

compile: (note package myjava; and import myjava.*; fix to suit)

I have this in my 'myjava' directory and use java myjava.SplineFrame

In Python using tk, simple menu and callbacks.

w4tk.py3

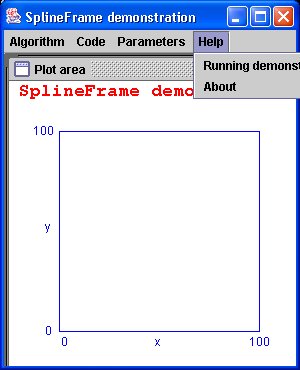

Note that other windows are opened for tutorial information.

The 'parameter' pull down menu uses radio buttons.

The mouse is used for both menu selection and graphics.

In Python using tk, a rotating dot, must be run to observe

This is an example of moving an object.

w5tk.py3 source code

Spline example in Java, reading files and math

Spline.java

SplineFrame.java

Then you need the *.txt files that are read at execution time:

SplineHelp.txt

SplineAbout.txt

SplineAlgorithm.txt

SplineEvaluate.txt

SplineIntegrate.txt

Note that other windows are opened for tutorial information.

The 'parameter' pull down menu uses radio buttons.

The mouse is used for both menu selection and graphics.

In Python using tk, a rotating dot, must be run to observe

This is an example of moving an object.

w5tk.py3 source code

Spline example in Java, reading files and math

Spline.java

SplineFrame.java

Then you need the *.txt files that are read at execution time:

SplineHelp.txt

SplineAbout.txt

SplineAlgorithm.txt

SplineEvaluate.txt

SplineIntegrate.txt

Clicking on menu bar 'Algorithm' (no 'File' items needed)

Clicking on menu bar 'Algorithm' (no 'File' items needed)

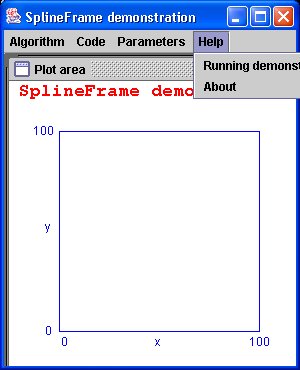

Clicking on menu bar 'Help' (Note that pull down menu can go outside

the main window in Java.)

To run demo I have my flash drive in the USB port and do commands:

F:

setup # runs setup.bat to set path and classpath

cd myjava # where this demo is located

java myjava.SplineFrame # I used 'package'

The Lecture outline was:

Show demo's.

Quickly survey code.

Explain how menubar or other features are created.

Explain how menus are created in menubars.

Explain how menu items are created in menus.

Explain how callbacks are coded to act when a menu item is selected.

Show where to put code that responds to a menu item select.

Can be in-line code if short and simple.

Use function call to handle long or complex actions.

Very repetitive, much to remember, copy, cut and paste to suit.

HW2 is assigned

Clicking on menu bar 'Help' (Note that pull down menu can go outside

the main window in Java.)

To run demo I have my flash drive in the USB port and do commands:

F: