##Chapter 13 - Cloud Computing

###Introduction

Cloud computing encapsulates many of the issues for distributed systems we have covered in this book such as web services, virtualization, and other implementation details. It can be generally defined as delivering shared, metered services on the Internet. The idea is that instead of companies developing and deploying their own information systems, they would contract with a service provider to do so. In this way they would get the following benefits:

- A high speed of deployment according to the service model (discussed below).

- Much less cost, since there is no capital investment and the use of resources is metered by the provider (like your water bill).

- Providers are expected to keep up with fast changing technologies.

- Elastic, variable capacity is inherent in the infrastructure from service providers so if one needs small capacity most of the time, but a lot at Xmas, one only pays for what is used.

The largest disadvantage is the loss of control over one's infrastructure since it is out-sourced to the service provider. Other disadvantages include vendor lock-in and vendor outages. The name cloud computing comes from the long practice of drawing a cloud to represent a network when you do not want to get into any details about how the network is configured. For example, in figure 13.1, we are interested in the client and server but not the network.

Figure 13.1. The cloud.

####Server Virtualization

Server virtualization is a method of running multiple independent virtual operating systems on a single physical computer. Server virtualization allows optimal use of physical hardware and dynamic scalability where virtual servers can be created or deleted much like files. Most server- class hardware is under-used and so co-locating multiple servers on one physical box improves utilization. Recall the a server is a process on a computer that listens for and responds to requests and most computers are multi-processing machines. Informal terminology often refers to a server as the box, but that is not strictly correct. For example, an organization might replace 10 physical server boxes with 2 physical boxes and run all 10 virtual servers on those 2 boxes. Administration is improved since bringing up another server can be accomplished by creating another virtual server and load-balancing it in software. Virtual servers are implemented in software and so they are much like files, you can copy a virtual machine to create another one and you can back up a server at exactly a certain point in processing by saving it. There are four types of virtual machines (VMs):

- Application-level VMs

- Operating System-level VMs

- Full-virtualization VMs

- Para-virtualization VMs

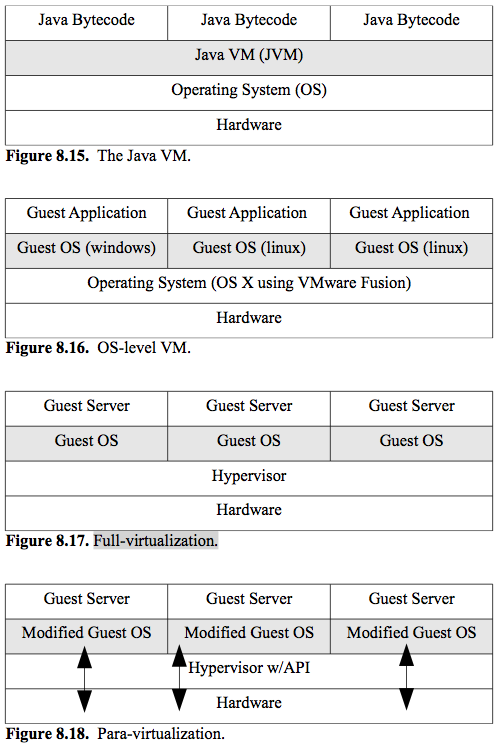

An application-level VM virtualizes the running computer program separately from the machine code that a typical compiler would produce.

The most common example of application virtualization is Java. For comparison, a C language compiler takes a program written in C and compiles it to machine code for a particular operating system and chip set. After the program is compiled, it will only run on the machine-type it was compiled for. Java compilers, by contrast, produce bytecode instead of machine code. The bytecode program will only run on a VM created for Java, so once a VM is created for a machine-type, it can run the bytecode. This makes compiled Java programs portable - they can run on any VM on any platform. Figure 8.15 shows the structure of the Java VM.

Operating system-level VMs allow a host OS to run multiple, virtual guest OSs. This is commonly used for desktop virtualization where the common motivation is to run another OS in order to run an application and not have to have another computer. For example, I have a Macintosh,

but sometimes need to run Windows. Figure 8.16 shows the OS-level VM.

Full virtualization is the first VM technology that would be used for servers. It introduces the concept of a hypervisor which acts as an interface between the hardware and all guest OSs. One can think of the hypervisor as a very thin OS that is optimized for hosting guest VMs.

This is the current technology used for all server-class VM software from all vendors. It is much more efficient than OS-level VMs. Figure 8.17 shows full-virtualization.

Para-virtualization is a version of full-virtualization that requires the guest OSs to be modified in minor ways to run more efficiently on the hypervisor. This can lead to performance increases. An example of a para-virtualization product is the open-source Xen project. Figure 8.18 shows para-virtualization. The hypervisor offers an API that allows guest OSs to directly access the hardware.

Virtualization can be used to abstract logical storage from physical storage on disk as we will see later in this chapter. Virtualization will also be a major factor for cloud computing which we cover in chapter 13.

####Service Models for Cloud Computing

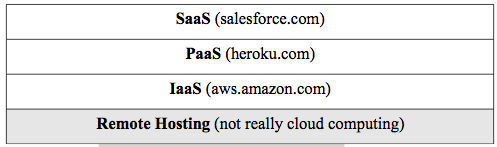

There are three basic service models for cloud computing:

- Software as a service (SaaS)

- Platform as a service (PaaS)

- Infrastructure as a service (IaaS)

Figure 13.1. The cloud.

SaaS offers the highest speed of deployment and the least control. One just buys the service from the provider in its entirety on a subscription basis. The customer gets access to the application typically through a web browser and pays the monthly bill. An example of such a service is salesforce.com. Their slogan is "No hardware. No software. No headaches." Their application offers all the services that a sales manager and sales team would need and eliminates the need for an IT department at all, especially for small to medium sized businesses.

The use is metered and the providers can adapt to the elastic demand by using virtualization to rapidly bring up resources to meet demand peaks.

Furthermore, a US-wide or world-wide company can take advantage of the CDN services that cloud computing providers have.

PaaS allows customers to create their own applications but using the provider's development framework. For example, a company would develop their own software but would be restricted to using a specific framework that the provider had optimized their infrastructure for. Examples include Heroku.com or Engineyard.com for Ruby on Rails and Google App Engine (http://code.google.com/appengine) for Java and Python frameworks. Users do not have to worry about how to configure and provision the applications run-times which takes time and productivity away from development related tasks. And it is expensive to have teams devoted to just deployment issues.

IaaS offers the most control. Customers have control over deployed instances. For example, they would control the operating system of a particular virtual machine deployed on shared hardware in the provider's infrastructure. The customer can still take advantage of elastic demand by ordering new instances of virtual servers. Amazon offers these kinds of services (http://aws.amazon.com/). For example, the Amazon Elastic Compute Cloud (EC2) web site states:

"Amazon EC2's simple web service interface allows you to obtain and configure capacity with minimal friction. It provides you with complete control of your computing resources and lets you run on Amazon's proven computing environment. Amazon EC2 reduces the time required to obtain and boot new server instances to minutes, allowing you to quickly scale capacity, both up and down, as your computing requirements change.

Amazon EC2 changes the economics of computing by allowing you to pay only for capacity that you actually use."

Since the servers are virtualized, they can be created, provisioned, and load balanced dynamically. Recall from our discussion of virtualization that server images can be copied like files. This is an extension of the older (non-cloud) practice of remote hosting where the customer has control over an actual physical server machine connected to the providers network infrastructure where the hardware is not shared.

Amazon has virtualized the storage for EC2 instances with the Amazon Elastic Block Store (EBS) which allows customers block-level storage for EC2 instances. The customer can create volumes from 1 GB to 1 TB and use as many volumes as needed. These volumes can be used like any hard drive.

Storage can be out-sourced to a cloud. For example, Amazon Simple Storage Service (S3) allows customer with their own private servers to write, read, and delete objects containing from 1 byte to 5 terabytes of data each over the Internet. The number of objects you can store is unlimited and is metered by use. Many customers use this for elastic backup capacity.

Figure 13.2 shows the relationship between these service models from the least control to the most control (bottom).

Figure 13.2. Service models for cloud computing.

The largest disadvantage of cloud computing is loss of control for both infrastructure and concerns for security. This has led to several deployment models that violate the shared, multi-tenancy requirement of cloud computing.

- Public clouds

- Private clouds

- Hybrid clouds

Public clouds have the definition we have already given. Private clouds are created for just one organization in response to concerns about flexibility and security. Organizations get some of the benefit of cloud computing by offering a cloud computing service interface internally but of course, get none of the benefits related to shared use. Many experts do not consider this to be cloud computing at all. A hybrid cloud combines private information systems with true cloud computing.

Organizations move less sensitive applications to the cloud and build interfaces between the cloud-based applications and their own. For example, a company may use salesforce.com to outsource their human resources (HR) department system. Another model for hybrid clouds is cloud bursting. Cloud bursting is a method where service providers offer instantaneous provisioning of virtual machines and companies can distribute loads (load balance) across their private and the contracted public cloud. This is usually used for meeting increased computing demands for peak loads.

###The Economics of Cloud Computing

This section has images from the paper Above the Clouds by Armbrust et al. - the reference is in the Chapter 13 references in the syllabus.

There are two important issues:

- Shifting the risk of investment to the Cloud provider.

- Infrastructure cost can be lower.

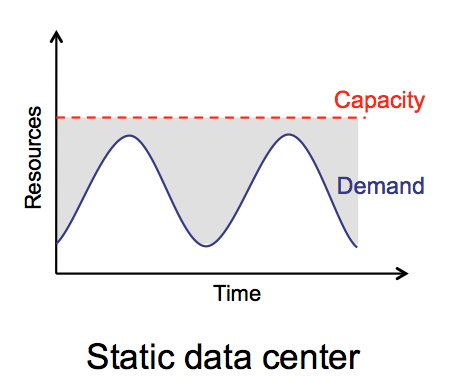

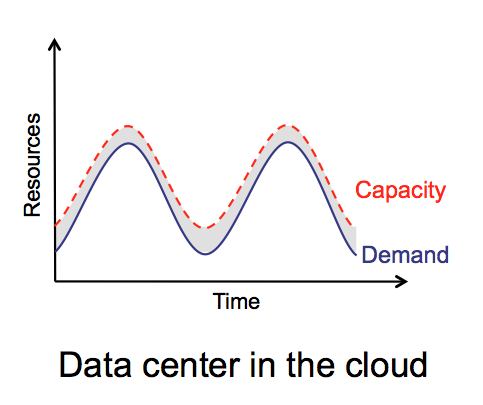

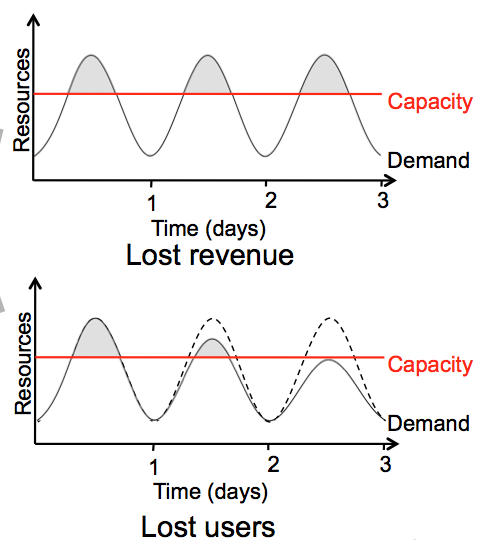

Elastic demand can be difficult to handle for organizations with their own data centers. They usually oeverprovision with excess capacity to avoid losing revenue or users due to bad service. Figures 13.3-5 show this.

Infrastructure costs are are conisderable. Consider buying all the hardware and network connections. Then add highly technical personel.

And finally consider building space and heating/cooling. Furthermore,

hardware changes very quickly and one has to make constant investments to keep up with the state of the art. Cloud providers can usually offer all this at a cost lower than an organization could build it due to economies of scale. Cloud providers spread their investment over many organizational customers that share the infrastructure.

Figure 13.3. Over-provisioning costs too much.

Figure 13.4. Cloud computing can have elastic capacity.

Figure 13.5. Under-provisioning can lose revenue or users.

###Chapter 13 Exercises

No exercises for chapter13!